Yay! Another "I am using 10Gb and my speed is slow!" post.

NAS

OS: TrueNAS-SCALE-22.02-RC.1-2

Motherboard: Supermicro X10SRL-F

CPU: Intel(R) Xeon(R) CPU E5-1650 v3 @ 3.50GHz

NIC: Intel X550-T2

RAM: 128GiB

Storage: 1 main pool. 7 HDD drives in Z2.

10FT CAT8

No switch. Direct connect between NIC's.

Workstation

OS: Windows 11

Motherboard: ROG STRIX Z590-I GAMING WIFI

CPU: i9 - 11900K

NIC: Sonnet Solo 10G (Thunderbolt 3 adapter in Thunderbolt 4 port.)

RAM: 32GiB

Storage: Samsung 980, Samsung 960, 2x Samsung 850 in RAID0

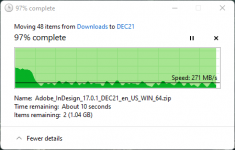

Every transfer starts out 800-900MB/sec then within 5-10 seconds falls to 250-350MB/sec for the rest of the transfer.

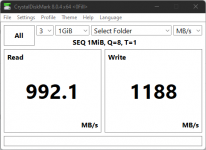

CrystalDiskMark test always comes out about the same. But I do know this is misleading as it reports the fastest speed at any point of the test.

With limited knowledge in this field, I am at a loss as to why the speed drops off.

NAS

OS: TrueNAS-SCALE-22.02-RC.1-2

Motherboard: Supermicro X10SRL-F

CPU: Intel(R) Xeon(R) CPU E5-1650 v3 @ 3.50GHz

NIC: Intel X550-T2

RAM: 128GiB

Storage: 1 main pool. 7 HDD drives in Z2.

10FT CAT8

No switch. Direct connect between NIC's.

Workstation

OS: Windows 11

Motherboard: ROG STRIX Z590-I GAMING WIFI

CPU: i9 - 11900K

NIC: Sonnet Solo 10G (Thunderbolt 3 adapter in Thunderbolt 4 port.)

RAM: 32GiB

Storage: Samsung 980, Samsung 960, 2x Samsung 850 in RAID0

Every transfer starts out 800-900MB/sec then within 5-10 seconds falls to 250-350MB/sec for the rest of the transfer.

CrystalDiskMark test always comes out about the same. But I do know this is misleading as it reports the fastest speed at any point of the test.

With limited knowledge in this field, I am at a loss as to why the speed drops off.

Code:

Connecting to host 192.168.5.11, port 5201 [ 4] local 192.168.5.80 port 51982 connected to 192.168.5.11 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 752 MBytes 6.30 Gbits/sec [ 4] 1.00-2.00 sec 723 MBytes 6.06 Gbits/sec [ 4] 2.00-3.00 sec 727 MBytes 6.10 Gbits/sec [ 4] 3.00-4.00 sec 720 MBytes 6.04 Gbits/sec [ 4] 4.00-5.00 sec 728 MBytes 6.10 Gbits/sec [ 4] 5.00-6.00 sec 756 MBytes 6.34 Gbits/sec [ 4] 6.00-7.00 sec 810 MBytes 6.80 Gbits/sec [ 4] 7.00-8.00 sec 735 MBytes 6.17 Gbits/sec [ 4] 8.00-9.00 sec 734 MBytes 6.16 Gbits/sec [ 4] 9.00-10.00 sec 730 MBytes 6.13 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 7.24 GBytes 6.22 Gbits/sec sender [ 4] 0.00-10.00 sec 7.24 GBytes 6.22 Gbits/sec receiver iperf Done.

Attachments

Last edited: