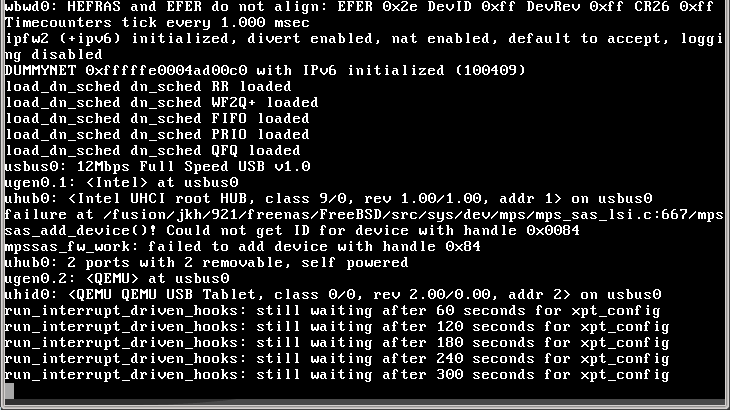

I'm getting this error when booting the FreeNAS 9.2.1.7 ISO. It's a VM and PCI passthrough is configured properly.

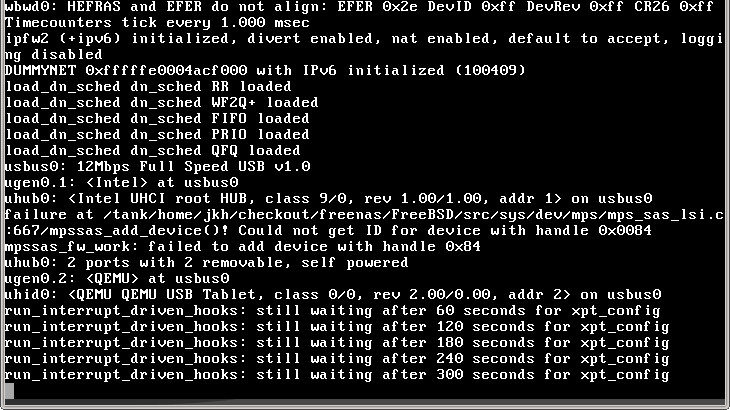

Also the same error with the 9.2.1 ISO:

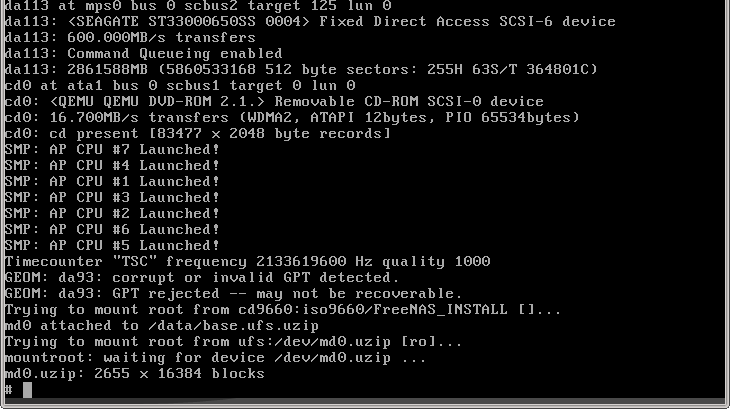

But the boot finished with the 9.2.0 ISO. Curiously, one of the disks possibly has a corrupt or invalid GPT. Could this be the reason why 9.2.1+ fails to continue booting?

Also the same error with the 9.2.1 ISO:

But the boot finished with the 9.2.0 ISO. Curiously, one of the disks possibly has a corrupt or invalid GPT. Could this be the reason why 9.2.1+ fails to continue booting?