cwagz

Dabbler

- Joined

- Jul 3, 2022

- Messages

- 35

I am very new to TrueNAS and ZFS.

I recently converted my home Hyper-V / Windows file server over to TrueNAS Scale.

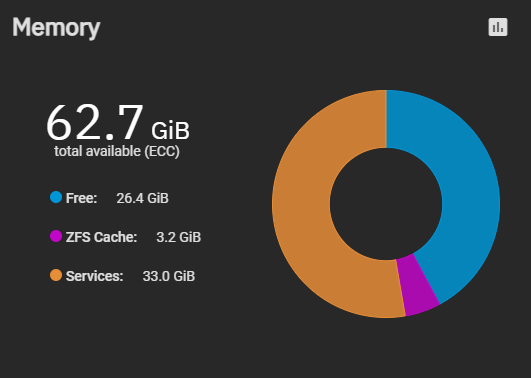

The system had 32GB of ram when I first installed TrueNAS, but I quickly realized I needed more to support the VMs I wanted to run. I upgraded the RAM to 64GB and I now have allocated 28GB of RAM to my 6 VMs.

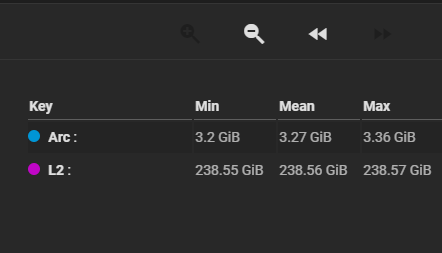

The main storage pool is a RAIDZ1 (4 x 8TB HDDs / 1x 256GB nvme for cache) archived data (photos and such) along with the image backup repository for all the other computers in the house.

VMs are all running on a mirrored pool (2 x 1TB nvme).

The question I have is regarding Arc size. I was under the impression that ZFS would pretty much consume all my left over RAM for arc, but every time I check it seems like my arc size is only around 3.2GB. Is this correct? Is it possible the arc settings are still based on my initial installation which only had 32GB of ram?

I recently converted my home Hyper-V / Windows file server over to TrueNAS Scale.

The system had 32GB of ram when I first installed TrueNAS, but I quickly realized I needed more to support the VMs I wanted to run. I upgraded the RAM to 64GB and I now have allocated 28GB of RAM to my 6 VMs.

The main storage pool is a RAIDZ1 (4 x 8TB HDDs / 1x 256GB nvme for cache) archived data (photos and such) along with the image backup repository for all the other computers in the house.

VMs are all running on a mirrored pool (2 x 1TB nvme).

The question I have is regarding Arc size. I was under the impression that ZFS would pretty much consume all my left over RAM for arc, but every time I check it seems like my arc size is only around 3.2GB. Is this correct? Is it possible the arc settings are still based on my initial installation which only had 32GB of ram?