Hi, i have a AIO setup ESXI 6.7 / FreeNAS 11.2 BETA 3 (VM) i use LSI SAS 9300-i8 Flashed to IT mode V16 fimware from broadcom 9300_8i_Package_P16_IR_IT_FW_BIOS_for_MSDOS_Windows

with 8 HGST 4TB in one pool. the ESXI, FN and one data store installed on Samsung EVO 860 250GB. on ESXi created one storage and one VM Network. for the VM i added Samsung EVO PRO 280GB data store and installed Windows 10 pro and using built in initiator, on freenas in the pool i set up ISCSi zvol and setup the share. both VM Network and storage are communicating and functional via ISCSi. for the pool i use Intel 900p SSD PCIe and configure it for Swap/L2ARC/SLOG. as a reference for this setup i used Stux guides, see my signature for all the hardware.

when finally doing the speed test on the Windows VM to check ISCSi the read speed is good get around 600MB/s but the write speed is 120-140MB/s. is that speed is normal when using the ISCSi on the pool? is there any configuration i'm missing on the FN or ESXi?

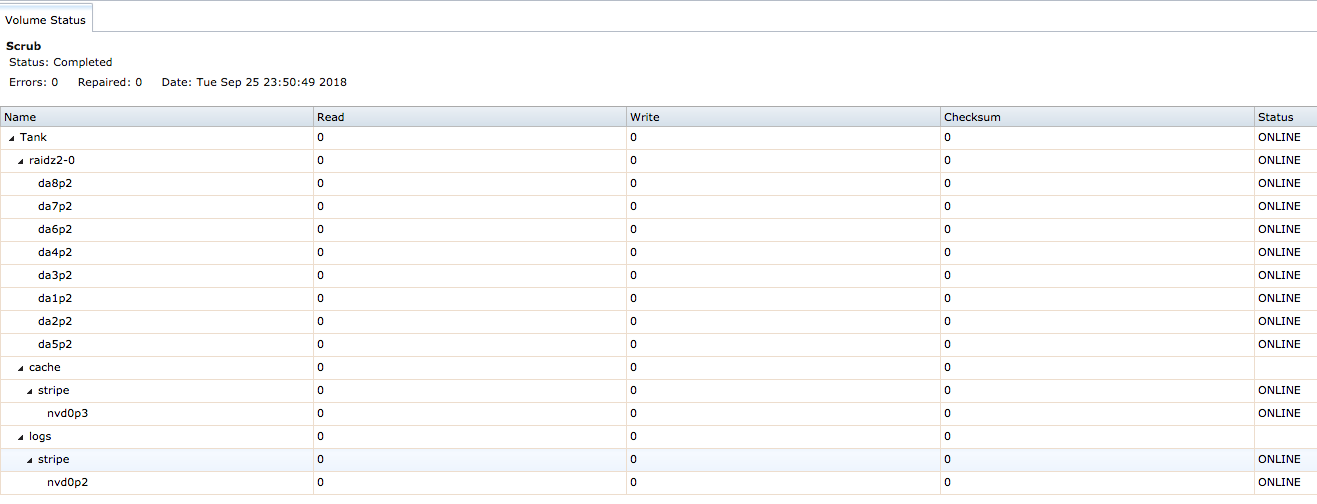

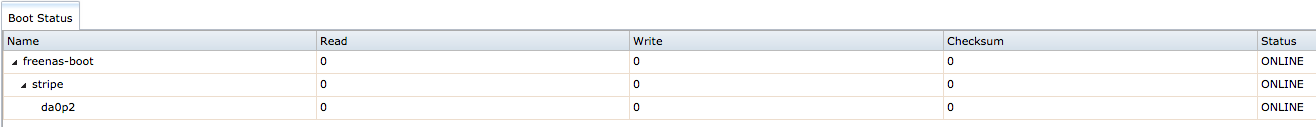

Note: Not sure if its related but i thought i will mention two things. first during the testing i installed the FN and imported the pool few times and was switching between bare-bone USB to ESXi SSD. one time the configuration didn't load the FN but when it did my pool IDs changed. before the pool IDs was ada0-7 and the boot was da0-1 (i.e separated) after the boot issue the pool and the boot devices changed to da0-8. is that mean that the FN boot is in the FN pool? the second thing when i flash the HBA i used the Broadcom 9300 firmware but reading other threads i can see everyone using 9211 IT V20. did i flashed the right firmware?

please find below more info, and let me know if you need any other information

Thanks for your help in advance

top

with 8 HGST 4TB in one pool. the ESXI, FN and one data store installed on Samsung EVO 860 250GB. on ESXi created one storage and one VM Network. for the VM i added Samsung EVO PRO 280GB data store and installed Windows 10 pro and using built in initiator, on freenas in the pool i set up ISCSi zvol and setup the share. both VM Network and storage are communicating and functional via ISCSi. for the pool i use Intel 900p SSD PCIe and configure it for Swap/L2ARC/SLOG. as a reference for this setup i used Stux guides, see my signature for all the hardware.

when finally doing the speed test on the Windows VM to check ISCSi the read speed is good get around 600MB/s but the write speed is 120-140MB/s. is that speed is normal when using the ISCSi on the pool? is there any configuration i'm missing on the FN or ESXi?

Note: Not sure if its related but i thought i will mention two things. first during the testing i installed the FN and imported the pool few times and was switching between bare-bone USB to ESXi SSD. one time the configuration didn't load the FN but when it did my pool IDs changed. before the pool IDs was ada0-7 and the boot was da0-1 (i.e separated) after the boot issue the pool and the boot devices changed to da0-8. is that mean that the FN boot is in the FN pool? the second thing when i flash the HBA i used the Broadcom 9300 firmware but reading other threads i can see everyone using 9211 IT V20. did i flashed the right firmware?

please find below more info, and let me know if you need any other information

Thanks for your help in advance

Code:

root@freenas[~]# camcontrol devlist <VMware Virtual disk 2.0> at scbus2 target 0 lun 0 (pass0,da0) <ATA HGST HDN724040AL A5E0> at scbus3 target 0 lun 0 (pass1,da1) <ATA HGST HDN724040AL A5E0> at scbus3 target 1 lun 0 (pass2,da2) <ATA HGST HDN724040AL A5E0> at scbus3 target 2 lun 0 (pass3,da3) <ATA HGST HDN724040AL A5E0> at scbus3 target 3 lun 0 (pass4,da4) <ATA HGST HDN724040AL A5E0> at scbus3 target 4 lun 0 (pass5,da5) <ATA HGST HDN724040AL A5E0> at scbus3 target 5 lun 0 (pass6,da6) <ATA HGST HDN724040AL A5E0> at scbus3 target 6 lun 0 (pass7,da7) <ATA HGST HDN724040AL A5E0> at scbus3 target 7 lun 0 (pass8,da8)

Code:

root@freenas[~]# zpool status -v pool: Tank state: ONLINE status: Some supported features are not enabled on the pool. The pool can still be used, but some features are unavailable. action: Enable all features using 'zpool upgrade'. Once this is done, the pool may no longer be accessible by software that does not support the features. See zpool-features(7) for details. scan: scrub repaired 0 in 0 days 01:44:29 with 0 errors on Tue Sep 25 23:50:49 2018 config: NAME STATE READ WRITE CKSUM Tank ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 gptid/b5b40bb4-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 gptid/b69faf01-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 gptid/b792e7b8-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 gptid/b87cfa92-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 gptid/b969428a-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 gptid/ba5cf8b5-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 gptid/bb4ae668-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 gptid/bc3d4265-4e99-11e7-9cba-0cc47adb3218 ONLINE 0 0 0 logs gptid/6ff84a55-c0a1-11e8-9f75-0cc47adb3218 ONLINE 0 0 0 cache gptid/76d01ebd-c0a1-11e8-9f75-0cc47adb3218 ONLINE 0 0 0 errors: No known data errors pool: freenas-boot state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM freenas-boot ONLINE 0 0 0 da0p2 ONLINE 0 0 0 errors: No known data errors

Code:

root@freenas[~]# swapinfo Device 1K-blocks Used Avail Capacity /dev/gptid/6762c737-c0a1-11e8-9 16777216 475784 16301432 3%

Code:

root@freenas[~]# cat /etc/fstab fdescfs /dev/fd fdescfs rw 0 0 /dev/gptid/6762c737-c0a1-11e8-9f75-0cc47adb3218.eli none swap sw 0 0

Code:

root@freenas[~]# glabel status | grep nvd0 gptid/6ff84a55-c0a1-11e8-9f75-0cc47adb3218 N/A nvd0p2 gptid/76d01ebd-c0a1-11e8-9f75-0cc47adb3218 N/A nvd0p3 gptid/6762c737-c0a1-11e8-9f75-0cc47adb3218 N/A nvd0p1

top

Code:

load averages: 0.07, 0.17, 0.17 up 0+15:29:32 13:15:19 57 processes: 1 running, 56 sleeping CPU: 0.1% user, 0.0% nice, 0.3% system, 0.1% interrupt, 99.5% idle Mem: 57M Active, 1103M Inact, 31M Laundry, 5325M Wired, 9395M Free ARC: 2705M Total, 334M MFU, 2224M MRU, 12M Anon, 28M Header, 104M Other 2005M Compressed, 6299M Uncompressed, 3.14:1 Ratio Swap: 16G Total, 454M Used, 16G Free, 2% Inuse