pro lamer

Guru

- Joined

- Feb 16, 2018

- Messages

- 626

Long story short: is it possible to run a Supermicro X9DRL-IF motherboard having only one DIMM slot populated?

The story:

Is it possible to run a Supermicro X9DRL-IF dual socket motherboard (bulk or retail) having only one DIMM slot populated? (of course only one CPU then).

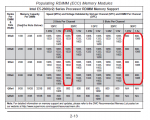

On one hand the manual says "For memory to work properly, follow the tables" and the table lists configurations of 2,4,6 or 8 slots populated. I also used to be able to find some posts somewhere on the Internet that claimed one using a Supermicro dual CPU motherboard needs at least two modules but cannot find it anymore.

On the other hand the same manual says (in the "Troubleshooting" chapter) "Turn on the system with only one DIMM module installed.". I've also found a post describing testing the modules one by one by an author using an X9DRL-3F motherboard (a mobo similar to my candidate X9DRL-IF) but the author didn't state if tests were run on the X9DRL-3F motherboard or some other one.

The reason I'm asking is I'm planning initially 32GB of RAM, ideally as a single DDR3 1600MHz LRDIMM module.

The story:

Is it possible to run a Supermicro X9DRL-IF dual socket motherboard (bulk or retail) having only one DIMM slot populated? (of course only one CPU then).

On one hand the manual says "For memory to work properly, follow the tables" and the table lists configurations of 2,4,6 or 8 slots populated. I also used to be able to find some posts somewhere on the Internet that claimed one using a Supermicro dual CPU motherboard needs at least two modules but cannot find it anymore.

On the other hand the same manual says (in the "Troubleshooting" chapter) "Turn on the system with only one DIMM module installed.". I've also found a post describing testing the modules one by one by an author using an X9DRL-3F motherboard (a mobo similar to my candidate X9DRL-IF) but the author didn't state if tests were run on the X9DRL-3F motherboard or some other one.

The reason I'm asking is I'm planning initially 32GB of RAM, ideally as a single DDR3 1600MHz LRDIMM module.