Hi everyone,

we have a TrueNAS 12 Install with a 5TB ZVOL which is shared via iSCSI to a productive Server which stores data on it, so far, so good.

Now for doing incremental file - backups we have a second Server with bacula (a backup software). For backup time our plan is to do a snapshot of the ZVOL in TrueNAS, share this snapshot via iscsi, mount it on the backup server and do the incremental backup from it.

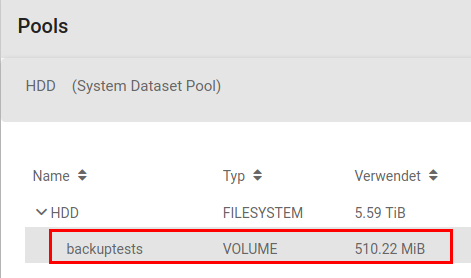

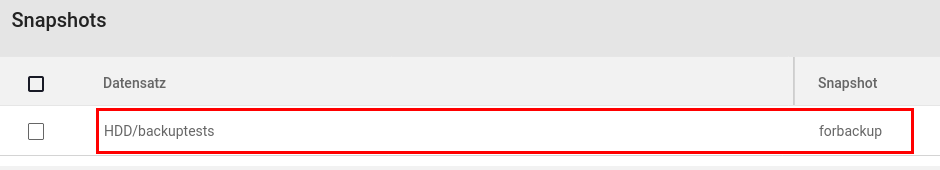

For testing this scenario we created another small ZVOL (backuptests), mounted it on the productive server, created some files on it and did a snapshot in the TrueNAS GUI:

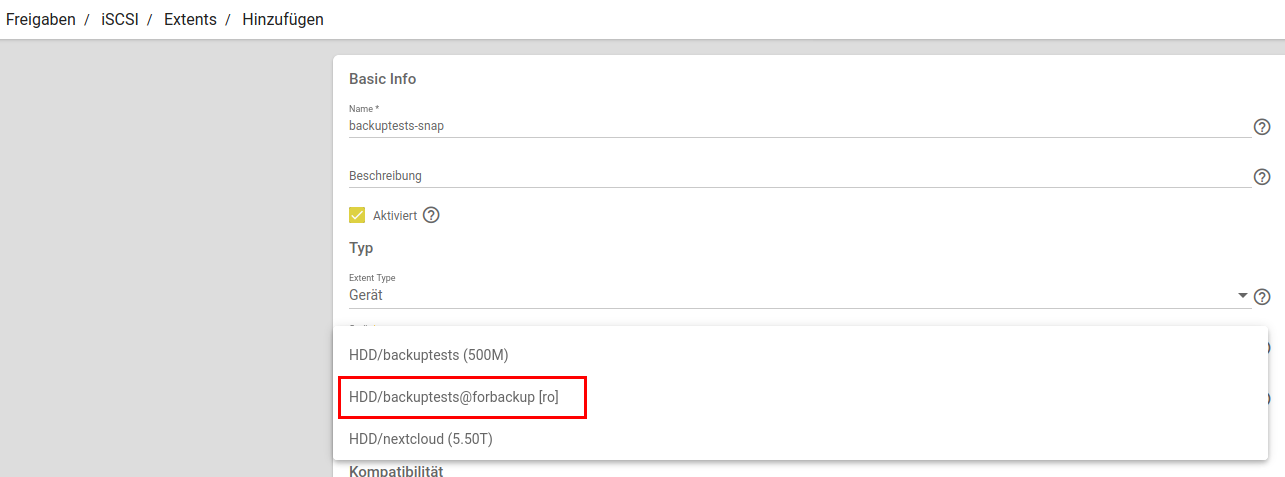

Now we are at the point to share this snapshot via iSCSI in the TrueNAS GUI, which doesn't work... in the extend dialog TrueNAS shows the Snapshot Volume (as ro, of course, which is quit ok):

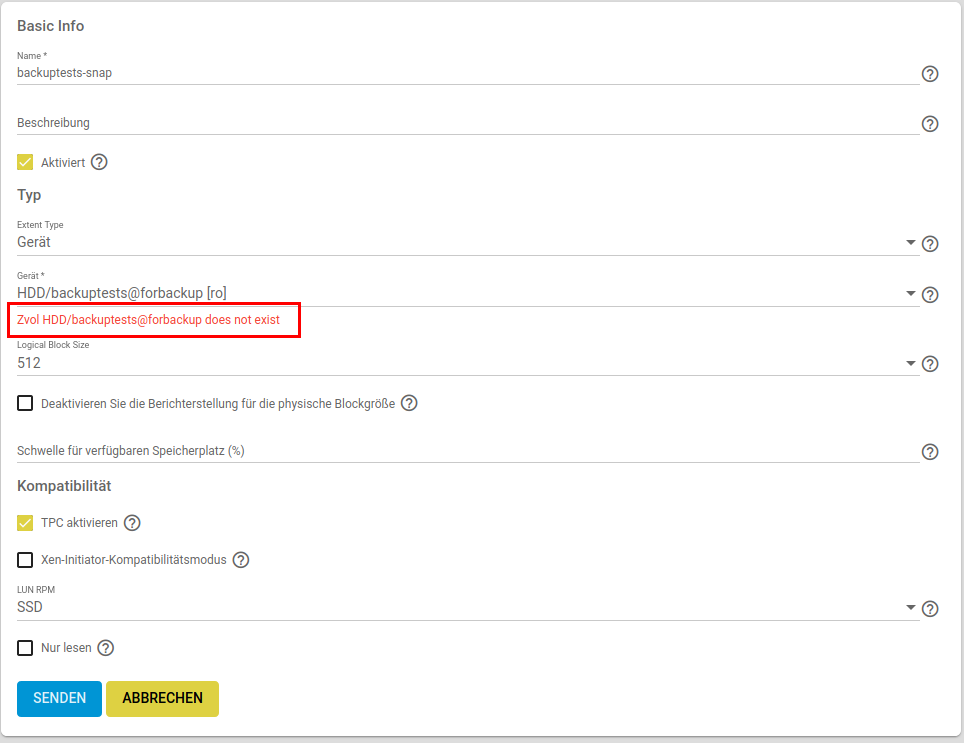

but when clicking on "send", an error appears that the ZVOL doesn't exist:

What are we doing wrong? Can't zvol snapshots be shared via iscsi despite they are shown in the dialog? Or is this scenario completely nonsense at all?

As iSCSI Vols should / can only be attached to one single server we thought, sharing a snapshot as "new" vol would be a way...

Thx for any hint!

T0m@

we have a TrueNAS 12 Install with a 5TB ZVOL which is shared via iSCSI to a productive Server which stores data on it, so far, so good.

Now for doing incremental file - backups we have a second Server with bacula (a backup software). For backup time our plan is to do a snapshot of the ZVOL in TrueNAS, share this snapshot via iscsi, mount it on the backup server and do the incremental backup from it.

For testing this scenario we created another small ZVOL (backuptests), mounted it on the productive server, created some files on it and did a snapshot in the TrueNAS GUI:

Now we are at the point to share this snapshot via iSCSI in the TrueNAS GUI, which doesn't work... in the extend dialog TrueNAS shows the Snapshot Volume (as ro, of course, which is quit ok):

but when clicking on "send", an error appears that the ZVOL doesn't exist:

What are we doing wrong? Can't zvol snapshots be shared via iscsi despite they are shown in the dialog? Or is this scenario completely nonsense at all?

As iSCSI Vols should / can only be attached to one single server we thought, sharing a snapshot as "new" vol would be a way...

Thx for any hint!

T0m@