Hi,

I have one SSD-pool "/mnt/SSDpool/" and one HDD-pool "/mnt/HDDpool" and wanted to duplicate the whole SSD-pool to the HDD-pool as a backup.

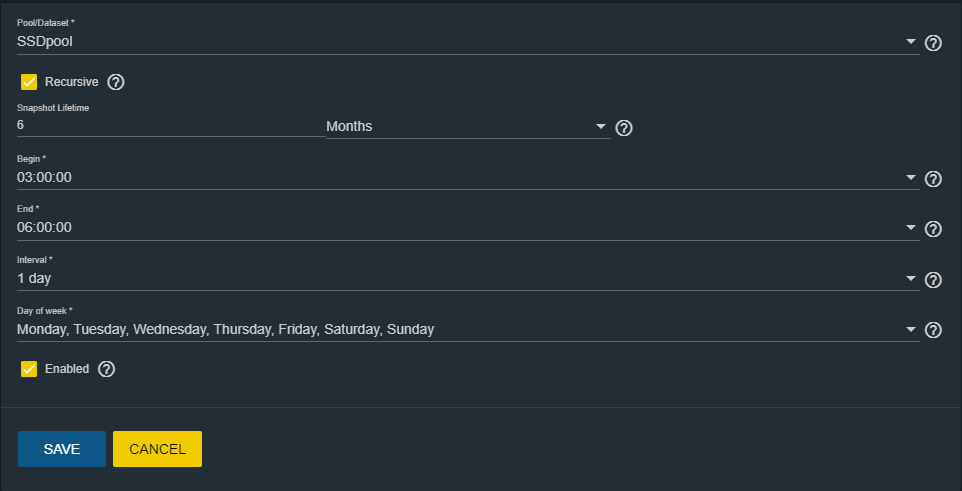

I created an snapshot task with "recursive" enabled...

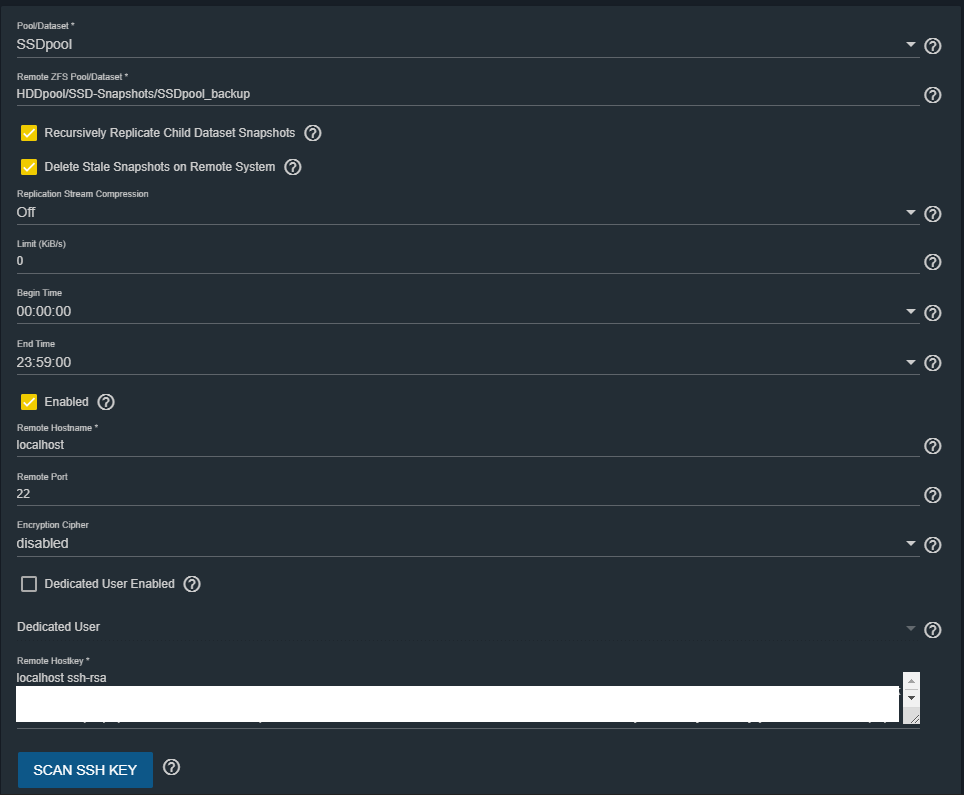

...and an replication task with "recursive" enabled too and localhost as remote host...

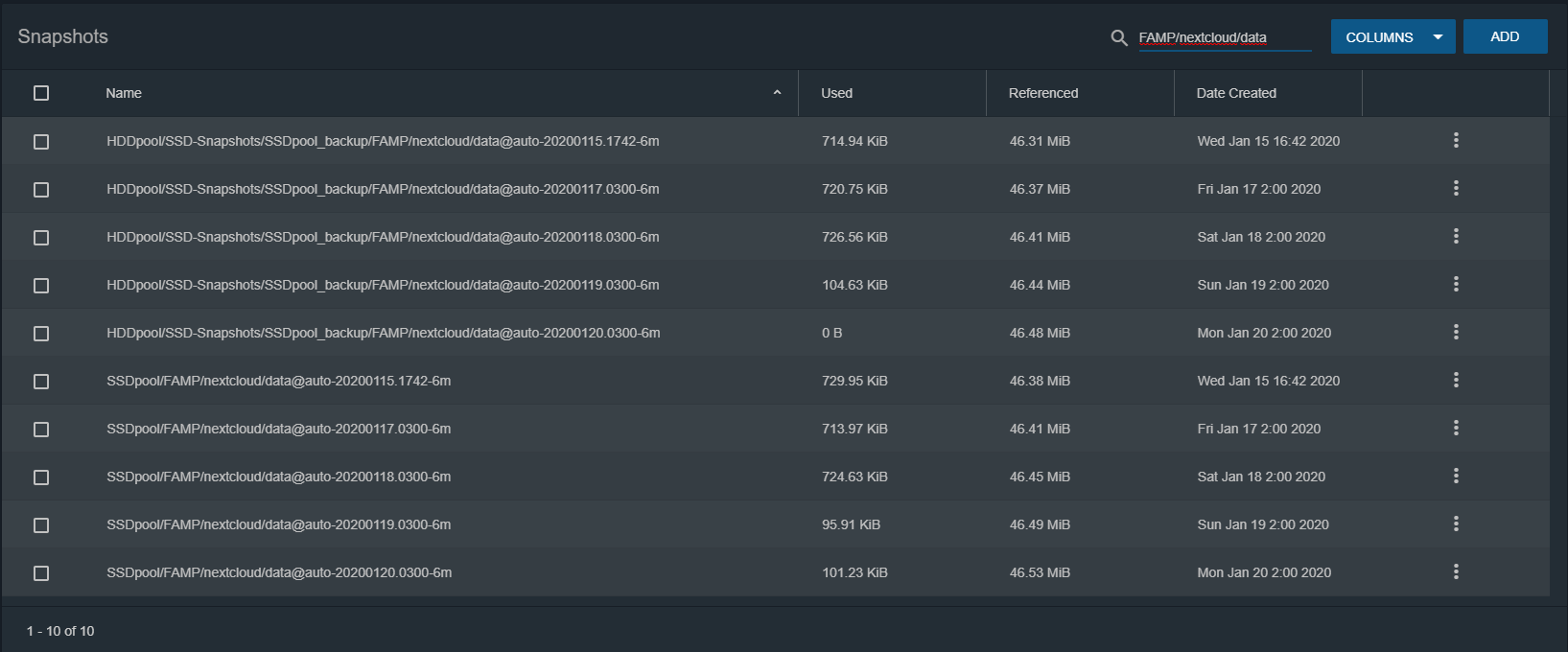

...and snapshots are created...

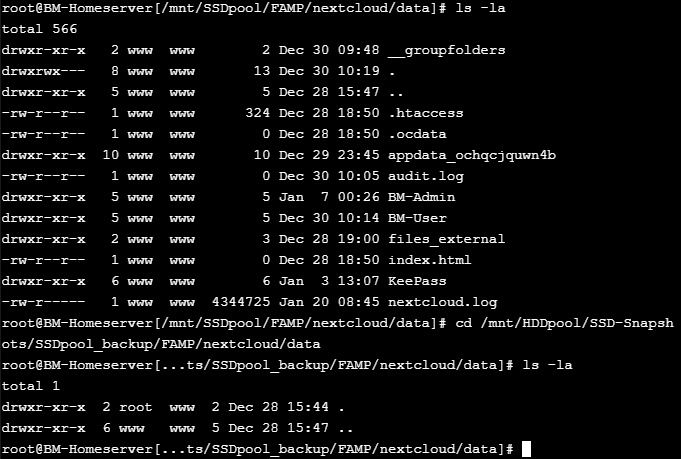

...and the replication is running and finishes with "up to date" but some datasets on the HDD are empty (like "HDDpool/SSD-Snapshots/SSDpool_backup/FAMP/nextcloud/data") but there are files in the dataset on the SSD (like "SSDPool/FAMP/nextcloud/data"):

In the console I see this messages:

All those folders are empty on the HDD but shouldn't.

1.) Why are those folders empty?

I found an old thread where an disabled "recursive" option on side resulted in empty folders but I activated recursion on both sides.

2.) Is there a way to validate if the replication is complete and both datasets are the same?

3.) Is it normal that the replication creates snapshots of the "target" datasets?

I only created a task to create snapshots of "SSDpool" but that job also creates snapshots of that replicated backup datasets on the HDDpool.

I am using FreeNAS-11.2-U7.

I have one SSD-pool "/mnt/SSDpool/" and one HDD-pool "/mnt/HDDpool" and wanted to duplicate the whole SSD-pool to the HDD-pool as a backup.

I created an snapshot task with "recursive" enabled...

...and an replication task with "recursive" enabled too and localhost as remote host...

...and snapshots are created...

...and the replication is running and finishes with "up to date" but some datasets on the HDD are empty (like "HDDpool/SSD-Snapshots/SSDpool_backup/FAMP/nextcloud/data") but there are files in the dataset on the SSD (like "SSDPool/FAMP/nextcloud/data"):

In the console I see this messages:

Jan 19 03:00:08 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/Backup/root) failed: No such file or directory

Jan 19 03:00:08 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/ComiXed/root) failed: No such file or directory

Jan 19 03:00:08 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/Emby/root) failed: No such file or directory

Jan 19 03:00:08 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/FAMP/root) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/FAMP/nextcloud/config) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/FAMP/nextcloud/data) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/SSD-Share/HighSec/Documents) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/SSD-Share/HighSec/StuffX_SSD) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/Torrent/root) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/digiKam/root) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/emby/root) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/jDownloader/root) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/jails/mineos/root) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/releases/11.2-RELEASE/root) failed: No such file or directory

Jan 19 03:00:18 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/releases/11.3-RELEASE/root) failed: No such file or directory

Jan 19 03:00:28 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/SSD-Share/LowSec/Roms_SSD) failed: No such file or directory

Jan 19 03:00:28 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/iocage/download/11.2-RELEASE) failed: No such file or directory

Jan 19 03:00:38 BM-Homeserver collectd[4229]: statfs(/mnt/HDDpool/SSD-Snapshots/SSDpool_backup/Emby/cache) failed: No such file or directoryAll those folders are empty on the HDD but shouldn't.

1.) Why are those folders empty?

I found an old thread where an disabled "recursive" option on side resulted in empty folders but I activated recursion on both sides.

2.) Is there a way to validate if the replication is complete and both datasets are the same?

3.) Is it normal that the replication creates snapshots of the "target" datasets?

I only created a task to create snapshots of "SSDpool" but that job also creates snapshots of that replicated backup datasets on the HDDpool.

I am using FreeNAS-11.2-U7.