kaz.williams

Cadet

- Joined

- Apr 15, 2020

- Messages

- 3

Hello there :)

First off specs, let me know what other info would be useful and I'll include it!

Build: FreeNAS-9.2.1.9-RELEASE-x64

Platform: AMD Sempron(tm) 145 Processor

RAM: 20 GB

4x 4TB drives in a ZFS pool, I think it is a Z1, but I'm not sure how to confirm this, is there a command line way to tell?

One of the drives has apparently gone bad. First I tested the SATA connections and those appear to be fine, as when I swap cables between the bad drive and one of the good drives, the good drive still shows up correctly and the bad drive does not.

Here is the zpool status output:

And when I attempt to zpool import:

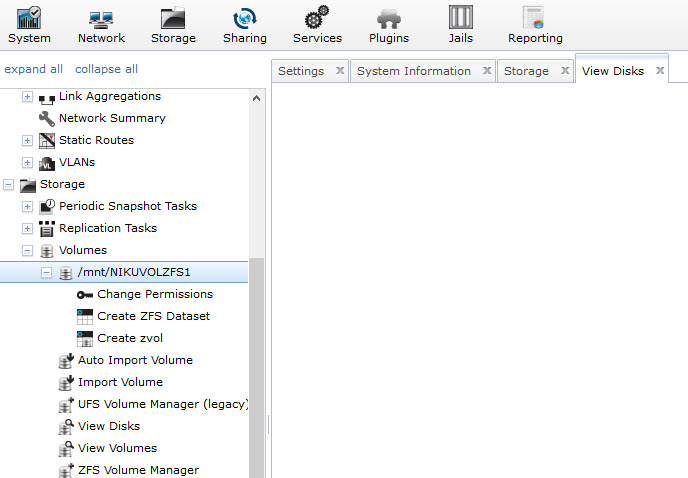

So after looking at the user manual and searching these forums, it looks like I need to use the GUI and replace the drive. However in my case, since the drive went bad, it appears that I can't even view the disks at all right now. My volume is still listed in the UI, but when I navigate to "View Disks" nothing shows up:

Is there a command line way to replace a failed drive instead? Or, do I have to do something first to get the pool back "online" for the disks to show back up in the UI so that I can select the bad one and replace it?

Thanks in advance!

First off specs, let me know what other info would be useful and I'll include it!

Build: FreeNAS-9.2.1.9-RELEASE-x64

Platform: AMD Sempron(tm) 145 Processor

RAM: 20 GB

4x 4TB drives in a ZFS pool, I think it is a Z1, but I'm not sure how to confirm this, is there a command line way to tell?

One of the drives has apparently gone bad. First I tested the SATA connections and those appear to be fine, as when I swap cables between the bad drive and one of the good drives, the good drive still shows up correctly and the bad drive does not.

Here is the zpool status output:

Code:

[root@freenas ~]# zpool status -v no pools available

And when I attempt to zpool import:

Code:

[root@freenas ~]# zpool import

pool: NIKUVOLZFS1

id: 7711231493565674790

state: UNAVAIL

status: One or more devices are missing from the system.

action: The pool cannot be imported. Attach the missing

devices and try again.

see: http://illumos.org/msg/ZFS-8000-6X

config:

NIKUVOLZFS1 UNAVAIL missing device

gptid/e5d8a4d0-0269-11e5-a419-bc5ff4ea1a9e ONLINE

gptid/e6749646-0269-11e5-a419-bc5ff4ea1a9e ONLINE

gptid/e70f2752-0269-11e5-a419-bc5ff4ea1a9e ONLINE

Additional devices are known to be part of this pool, though their

exact configuration cannot be determined. So after looking at the user manual and searching these forums, it looks like I need to use the GUI and replace the drive. However in my case, since the drive went bad, it appears that I can't even view the disks at all right now. My volume is still listed in the UI, but when I navigate to "View Disks" nothing shows up:

Is there a command line way to replace a failed drive instead? Or, do I have to do something first to get the pool back "online" for the disks to show back up in the UI so that I can select the bad one and replace it?

Thanks in advance!