Fei

Explorer

- Joined

- Jan 13, 2014

- Messages

- 80

Hi

I use Freenas 9.3.1 and other ZFS system(Nexenta) , I find out a BIG problem.Theirs available space are different when i use 4TB hard drives, their difference about 2TB,why?

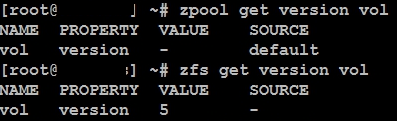

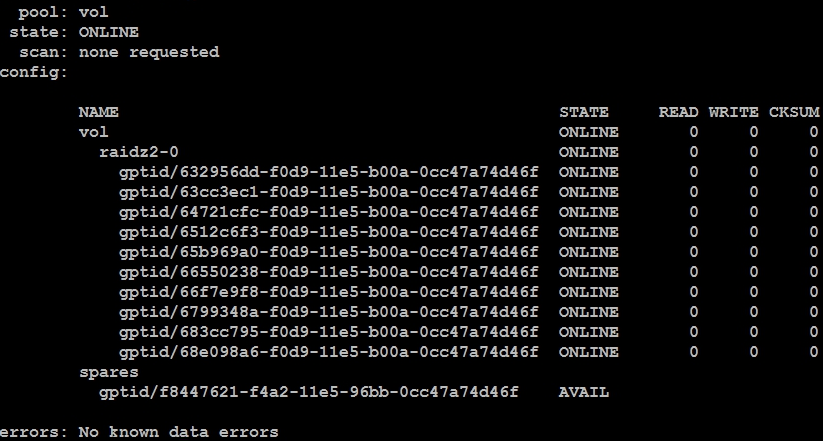

<freenas >

zfs version:5

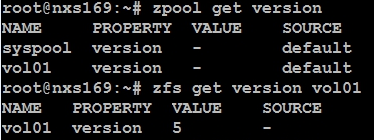

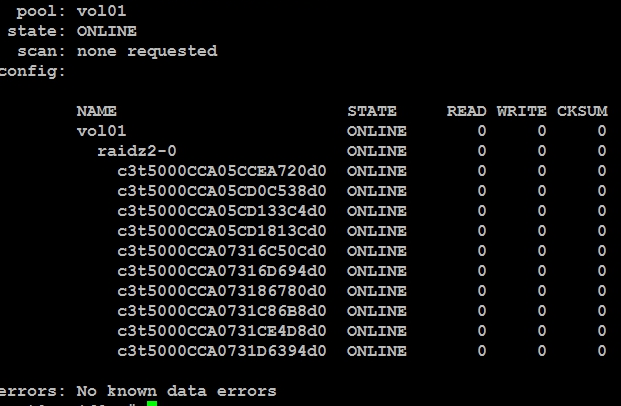

<other system>

zfs version:5

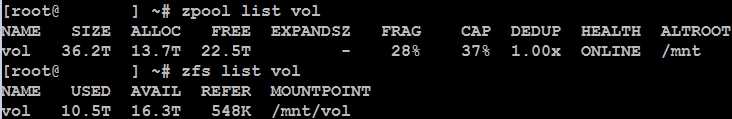

<freenas>

zpool list (raw capacity) : 36.2T

zfs list (Available space) : 26.8T

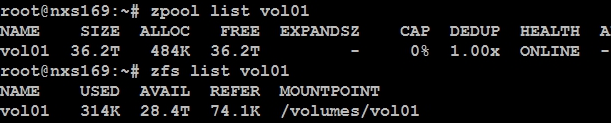

<other system>

zpool list (raw capacity) : 36.2T

zfs list (Available space) : 28.4T

<freenas>

use 4TB hard drives*10 create raidz2 group

<other system>

use 4TB hard drives*10 create raidz2 group

I use Freenas 9.3.1 and other ZFS system(Nexenta) , I find out a BIG problem.Theirs available space are different when i use 4TB hard drives, their difference about 2TB,why?

<freenas >

zfs version:5

<other system>

zfs version:5

<freenas>

zpool list (raw capacity) : 36.2T

zfs list (Available space) : 26.8T

<other system>

zpool list (raw capacity) : 36.2T

zfs list (Available space) : 28.4T

<freenas>

use 4TB hard drives*10 create raidz2 group

<other system>

use 4TB hard drives*10 create raidz2 group

Last edited: