Hi, I'm a noob to TrueNAS and just set it up. So far I'm pretty disappointed in the performance but there's a good chance of misconfiguration on my side, hopefully.

I did trawl the forum and websites for infos quite a bit and found similar threads but the solutions didn't help me so far. At this point it feels like I'm just wasting time because I'm not exactly sure what I'm looking for. Maybe there's something obviously wrong to an experienced eye...

I think my setup is fairly straight forward:

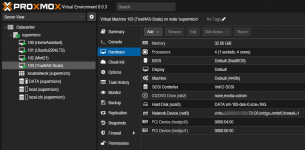

- Supermicro X10SDV-TP8F (XEON D-1518)

- Proxmox 8.0.3 Host (on SSD)

- VM for TrueNAS Scale (latest version) with 4 cores and 32GB RAM

- Broadcom SAS3008 Controller HBA (IT mode), pass-through to VM

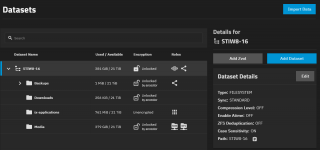

- 4x 8TB Seagate IronWolf in a VDEV: 1 x RAIDZ1 | 4 wide | 7.28 TiB

- Main Dataset with Media sub dataset and SMB share

- Compression turned off

- Dedupe off

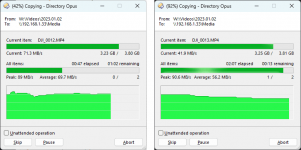

I'm not running apps or VMs within TrueNAS, it's just storage. My issues are copy & write speed through SMB (haven't tried anything else):

a) TrueNAS SMB share mounted on Synology DS214+ (DSM7)

Copy large video files 3+ GB to TrueNAS starts as at expected 70-100 MB/s but drops down to 1/3 after approx 2-3GB and stays low, CPU shoots up to 100%.

b) Copying the same file back from TrueNAS to Synology only does 10-15MB/s from the beginning!

c) Copying PC > Synology via SMB steady 80-100 MB/s

d) Copying Synology > PC via SMB steady 80-100 MB/s

So, it's not the PC and not the Synology I would say, it's something on the TrueNAS system. Even though the Xeon D-1518 is a bit older, it should easily match my old DS214+ Synology which doesn't have any speed issues.

I've attached some screenshots. Help would be much appreciated.

I did trawl the forum and websites for infos quite a bit and found similar threads but the solutions didn't help me so far. At this point it feels like I'm just wasting time because I'm not exactly sure what I'm looking for. Maybe there's something obviously wrong to an experienced eye...

I think my setup is fairly straight forward:

- Supermicro X10SDV-TP8F (XEON D-1518)

- Proxmox 8.0.3 Host (on SSD)

- VM for TrueNAS Scale (latest version) with 4 cores and 32GB RAM

- Broadcom SAS3008 Controller HBA (IT mode), pass-through to VM

- 4x 8TB Seagate IronWolf in a VDEV: 1 x RAIDZ1 | 4 wide | 7.28 TiB

- Main Dataset with Media sub dataset and SMB share

- Compression turned off

- Dedupe off

I'm not running apps or VMs within TrueNAS, it's just storage. My issues are copy & write speed through SMB (haven't tried anything else):

a) TrueNAS SMB share mounted on Synology DS214+ (DSM7)

Copy large video files 3+ GB to TrueNAS starts as at expected 70-100 MB/s but drops down to 1/3 after approx 2-3GB and stays low, CPU shoots up to 100%.

b) Copying the same file back from TrueNAS to Synology only does 10-15MB/s from the beginning!

c) Copying PC > Synology via SMB steady 80-100 MB/s

d) Copying Synology > PC via SMB steady 80-100 MB/s

So, it's not the PC and not the Synology I would say, it's something on the TrueNAS system. Even though the Xeon D-1518 is a bit older, it should easily match my old DS214+ Synology which doesn't have any speed issues.

I've attached some screenshots. Help would be much appreciated.