vii

Dabbler

- Joined

- Aug 17, 2023

- Messages

- 13

Hello, I purchased two used SSD drives and configured them as a RAID mirror. However, I have noticed several times that one of the drives shows a faulted state.

SAMSUNG_MZ7WD960HAGP-00003

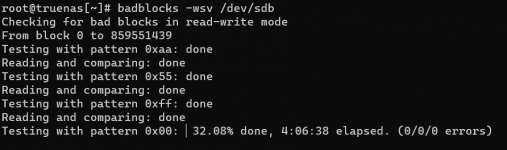

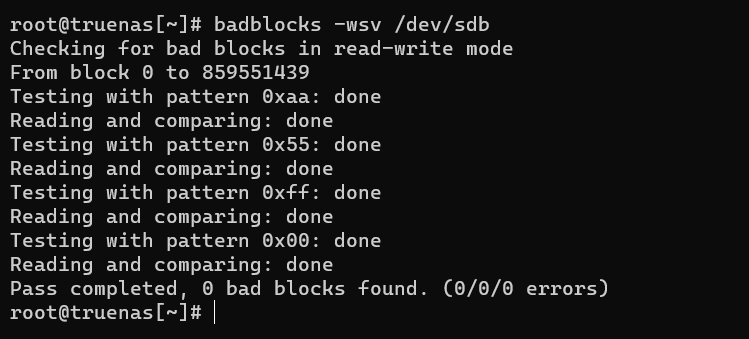

I dethatch the drive and ran the following command:

Also, the SMART test does not show any problem. The drives have been used for 50k hours, but the badblocks test shows no problem either. :/

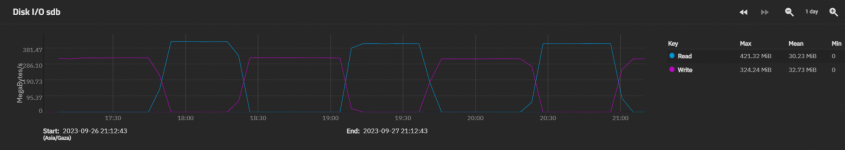

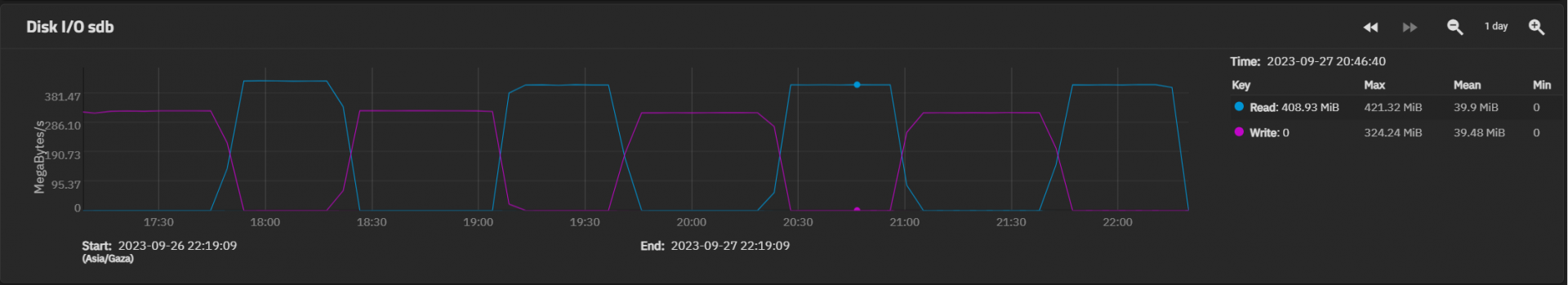

I have changed the SATA cable several times, but the problem persists. Sometimes, when I transfer something, the speed drops to zero and hangs for a minute. When I check the log, it shows me write and read errors on this device only sdb.

SAMSUNG_MZ7WD960HAGP-00003

I dethatch the drive and ran the following command:

badblocks -wsv /dev/sdb

Also, the SMART test does not show any problem. The drives have been used for 50k hours, but the badblocks test shows no problem either. :/

I have changed the SATA cable several times, but the problem persists. Sometimes, when I transfer something, the speed drops to zero and hangs for a minute. When I check the log, it shows me write and read errors on this device only sdb.