Minxster

Dabbler

- Joined

- Sep 27, 2013

- Messages

- 36

Purchased and built already specs:

Supermicro Motherboard: X11SPM-TF for single Socket LGA3647 CPU

CPU: INTEL Xeon SILVER 4108 1,8GHz socket LGA3647 Scalable, 8 cores, 16 threads, DDR4-2400

64GB RAM: 2x SAMSUNG 32GB DDR4-2400 LRDIMM ECC Registered CL17 Dual Rank

NIC - Onboard dual 10gbe

HBA Card: Broadcom (Avago, LSI) 9305-16i, 16 ports - latest firmware

Hard drives - 16 x He10 HGST 4Kn 10TB SATA 220MB/s

Boot Device: Samsung 960 EVO NVMe M.2 250GB PCIe 3 3.0

FreeNAS : 11.1-U5

Array make-up : Waiting to run either 2 x 8 RAID Z2 or 8 x 2 Mirrored (RAID 10) - For now I'm focusing on the Mirror setup.

This is no my first build, but it is the first one that I'm wanting to push the performance as best I can.

So to the problem : The server has come together well, but am finding serious problems with performance. Before I start looking into NIC performance issues, I've been running 'dd' tests to benchmark the server itself in RAID10

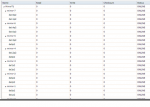

Here is a test done with the 8 x 2 Mirrored setup:

file type size count tot size gbyte process seconds bytes per sec calc to mbytes calc to gbytes

zero 2048k 100k 200 write 133.3 1610142624 1535.6 1.5

zero 2048k 100k 200 read 283.2 758075548 723.0 0.7

I've checked CPU and it's not pushing hard at all, and even the busy % (when on read) is only sitting at around 20%.

For the life of me I can't see why this is so slow and/or what the bottleneck is...

Does anyone have any experience with this hardware that can maybe shed some light on what happening?

Supermicro Motherboard: X11SPM-TF for single Socket LGA3647 CPU

CPU: INTEL Xeon SILVER 4108 1,8GHz socket LGA3647 Scalable, 8 cores, 16 threads, DDR4-2400

64GB RAM: 2x SAMSUNG 32GB DDR4-2400 LRDIMM ECC Registered CL17 Dual Rank

NIC - Onboard dual 10gbe

HBA Card: Broadcom (Avago, LSI) 9305-16i, 16 ports - latest firmware

Hard drives - 16 x He10 HGST 4Kn 10TB SATA 220MB/s

Boot Device: Samsung 960 EVO NVMe M.2 250GB PCIe 3 3.0

FreeNAS : 11.1-U5

Array make-up : Waiting to run either 2 x 8 RAID Z2 or 8 x 2 Mirrored (RAID 10) - For now I'm focusing on the Mirror setup.

This is no my first build, but it is the first one that I'm wanting to push the performance as best I can.

So to the problem : The server has come together well, but am finding serious problems with performance. Before I start looking into NIC performance issues, I've been running 'dd' tests to benchmark the server itself in RAID10

Here is a test done with the 8 x 2 Mirrored setup:

file type size count tot size gbyte process seconds bytes per sec calc to mbytes calc to gbytes

zero 2048k 100k 200 write 133.3 1610142624 1535.6 1.5

zero 2048k 100k 200 read 283.2 758075548 723.0 0.7

I've checked CPU and it's not pushing hard at all, and even the busy % (when on read) is only sitting at around 20%.

For the life of me I can't see why this is so slow and/or what the bottleneck is...

Does anyone have any experience with this hardware that can maybe shed some light on what happening?