jyavenard

Patron

- Joined

- Oct 16, 2013

- Messages

- 361

Introduction

I became in need for a NAS solution, something to store all my files and provide a great level of safety and redundancy.

ZFS was always going to be my choice of filesystem; it worked well, provide lots of useful features (especially snapshots) and is very reliable.

I looked at the existing professional solutions, but none of them provided the flexibility I was after.

FreeNAS' backer iXsystems has an interesting FreeNAS Mini; but only allows a maximum of four disks; and their professional solutions was just outside my budget.

It's been a while since I had done a ZFS benchmarks (check zfs-raidz-benchmarks I wrote in 2009).

So I'm at it again.

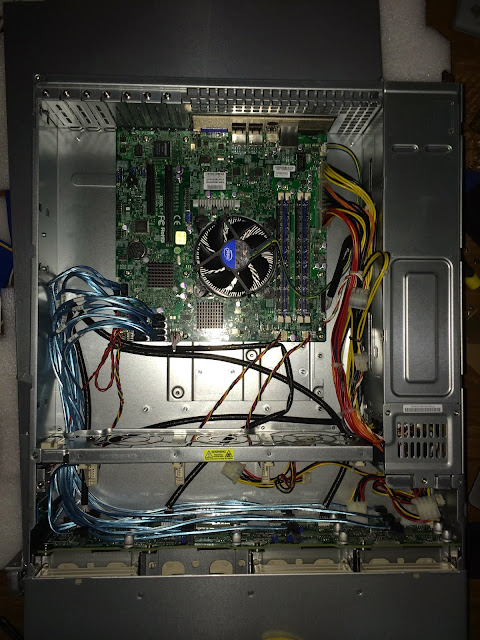

Hardware Setup

- Supermicro SC826TQ-500LPB 12 bays chassis

- Supermicro X10SL7-F motherboard

- Intel E3-1220v3 processor

- 32GB RAM (made of 4*8GB Kingston DD3 1600MHz ECC KVR16E11/8EF)

- 6 x WD RED 4TB

Description

The chassis comes with a 500W platinum rated redundant power supplies; it's rated at 94% for 20% load and 88% at 10% load. Even with 12 disks, it won't ever go over 25% load so this power supply is overkilled, but it's the smallest Supermicro has.

The X10SL7-F has 6 onboard SATA connectors plus a LSI 2308 SAS2 with 8 SAS/SATA ports.

ZFS shouldn't run on top of hardware RAID controller, it defeat the purpose of ZFS. The LSI was flashed with an IT firmware, making the 2308 a plain HBA.

The plan was to use RAIDZ2 (ZFS equivalent to RAID6), which provides redundancy for two simultaneous disk failures). RAIDZ2 with six 4TB disks would give me 16TB (14TiB) of available space.

The system could later be extended with six more disks... As this is going to be used as a MythTV storage center, I estimate that it will reach capacity in just one year (though MythTV perfectly handles auto-deleting low-priority recordings)

The choice came between the new Seagate NAS drives and WD. My primary concern was power consumption and noise: the WD being 5400 drives win power-wise, but the Seagate are a tiny bit more quiet. Anandtech review also found that IOPS on the WD Red were slightly better: this and the lower power consumption made me go for the WD: the noise difference being minimal.

While I like to fiddle with computers, I'm not as young as I used to and as such, I wanted something that would be easy to use and configure: so my plan was to use FreeNAS.

FreeNAS is a FreeBSD based distribution that makes everything web configurable... It's still not for the absolute noob, and requires that you have good understanding of the underlying file system: ZFS.

FreeNAS runs of a USB flash drive in read-only mode, and let you install all of the FreeBSD ports in a jail residing in the ZFS partitions..

Hiccups

My plans became slightly compromised once I put everything together and realise how noisy that setup was. The Supermicro chassis being enterprise-grade, its only concern is to keep everything cool. But damn, that thing is noisy: no way I could ever have this in any room or office.

There's nothing in the motherboard BIOS allowing you to change the fans speed. The IPMI web access let you choose the fan speed mode: but the choice ends up being between "Normal" which wouldn't let anyone sleep, and "Heavy" which would for sure wake-up the neighbours.

The fans on this motherboard are controlled by a Nuvoton NCT6776D. On linux the w83627ehf kernel module let you control most of the PWM including the fans speed, unfortunately I found no equivalent on FreeBSD. So if I'm to run FreeBSD I would have to use an external voltage regulator to lower the fans: something that doesn't appeal to me.

Also, this motherboard and chassis provides SGPIO interface to control the SAS/SATA backplane and indicates the status of the drives. This is great to identify which disk is faulty as you can't always rely on the device name provided by the OS.

However, I connected all my drives to the LSI 2308 controller.

Despite my attempts, I couldn't get the front LEDs to show the status of the disk in FreeBSD. Something I could easily do under Linux using the sas2ircu utility...

I like FreeBSD, and always used it for servers, but its lack of hardware gimmick like this started to annoy me. As part of my involvement in the MythTV projects (www.mythtv.org), I have switched to Linux for all my home servers, and I've grown familiar to it over the years.

A few months ago, I would have never considered anything but FreeBSD as I wanted to use ZFS, however the ZFS On Linux (ZOL) project recently called their drivers as "ready for production".... So could it be that linux be the solution?

So FreeBSD or Linux?

I ran various benchmarks, here are the results...

Benchmarks

lz4 compression was enabled on all ZFS partitions.

Intel NAS Performance Toolkit (via Windows and samba: gigabit link)

Bonnie++

FreeNAS 9.1

Ubuntu - ZOL 0.6.2

Ubuntu - MD - EXT4

iozone

This NAS will mostly deal with very big files, so let's specifically test those (ext4 perform especially poorly here)

started with iozone -o -c -t 8 -r 128k -s 4G

phoronix test suite

results are available here (xml result file here)

Comparison including md+ext4 raid6 here

Conclusions

Ignoring some of the nonsensical data found by the benchmarks above which indicates pure cache effect; the ZFS On Linux drivers are doing extremely well, and Ubuntu manages on average to surpass FreeBSD: that was a surprise...

Maybe time to port FreeNAS to use linux as kernel? That would be a worthy project...

Sorry, the array don't render very well here... I created a blog entry there:

http://jyavariousideas.blogspot.com.au/2013/11/zfs-raidz-benchmarks-part-2.html

I became in need for a NAS solution, something to store all my files and provide a great level of safety and redundancy.

ZFS was always going to be my choice of filesystem; it worked well, provide lots of useful features (especially snapshots) and is very reliable.

I looked at the existing professional solutions, but none of them provided the flexibility I was after.

FreeNAS' backer iXsystems has an interesting FreeNAS Mini; but only allows a maximum of four disks; and their professional solutions was just outside my budget.

It's been a while since I had done a ZFS benchmarks (check zfs-raidz-benchmarks I wrote in 2009).

So I'm at it again.

Hardware Setup

- Supermicro SC826TQ-500LPB 12 bays chassis

- Supermicro X10SL7-F motherboard

- Intel E3-1220v3 processor

- 32GB RAM (made of 4*8GB Kingston DD3 1600MHz ECC KVR16E11/8EF)

- 6 x WD RED 4TB

Description

The chassis comes with a 500W platinum rated redundant power supplies; it's rated at 94% for 20% load and 88% at 10% load. Even with 12 disks, it won't ever go over 25% load so this power supply is overkilled, but it's the smallest Supermicro has.

The X10SL7-F has 6 onboard SATA connectors plus a LSI 2308 SAS2 with 8 SAS/SATA ports.

ZFS shouldn't run on top of hardware RAID controller, it defeat the purpose of ZFS. The LSI was flashed with an IT firmware, making the 2308 a plain HBA.

The plan was to use RAIDZ2 (ZFS equivalent to RAID6), which provides redundancy for two simultaneous disk failures). RAIDZ2 with six 4TB disks would give me 16TB (14TiB) of available space.

The system could later be extended with six more disks... As this is going to be used as a MythTV storage center, I estimate that it will reach capacity in just one year (though MythTV perfectly handles auto-deleting low-priority recordings)

The choice came between the new Seagate NAS drives and WD. My primary concern was power consumption and noise: the WD being 5400 drives win power-wise, but the Seagate are a tiny bit more quiet. Anandtech review also found that IOPS on the WD Red were slightly better: this and the lower power consumption made me go for the WD: the noise difference being minimal.

While I like to fiddle with computers, I'm not as young as I used to and as such, I wanted something that would be easy to use and configure: so my plan was to use FreeNAS.

FreeNAS is a FreeBSD based distribution that makes everything web configurable... It's still not for the absolute noob, and requires that you have good understanding of the underlying file system: ZFS.

FreeNAS runs of a USB flash drive in read-only mode, and let you install all of the FreeBSD ports in a jail residing in the ZFS partitions..

Hiccups

My plans became slightly compromised once I put everything together and realise how noisy that setup was. The Supermicro chassis being enterprise-grade, its only concern is to keep everything cool. But damn, that thing is noisy: no way I could ever have this in any room or office.

There's nothing in the motherboard BIOS allowing you to change the fans speed. The IPMI web access let you choose the fan speed mode: but the choice ends up being between "Normal" which wouldn't let anyone sleep, and "Heavy" which would for sure wake-up the neighbours.

The fans on this motherboard are controlled by a Nuvoton NCT6776D. On linux the w83627ehf kernel module let you control most of the PWM including the fans speed, unfortunately I found no equivalent on FreeBSD. So if I'm to run FreeBSD I would have to use an external voltage regulator to lower the fans: something that doesn't appeal to me.

Also, this motherboard and chassis provides SGPIO interface to control the SAS/SATA backplane and indicates the status of the drives. This is great to identify which disk is faulty as you can't always rely on the device name provided by the OS.

However, I connected all my drives to the LSI 2308 controller.

Despite my attempts, I couldn't get the front LEDs to show the status of the disk in FreeBSD. Something I could easily do under Linux using the sas2ircu utility...

I like FreeBSD, and always used it for servers, but its lack of hardware gimmick like this started to annoy me. As part of my involvement in the MythTV projects (www.mythtv.org), I have switched to Linux for all my home servers, and I've grown familiar to it over the years.

A few months ago, I would have never considered anything but FreeBSD as I wanted to use ZFS, however the ZFS On Linux (ZOL) project recently called their drivers as "ready for production".... So could it be that linux be the solution?

So FreeBSD or Linux?

I ran various benchmarks, here are the results...

Benchmarks

lz4 compression was enabled on all ZFS partitions.

Intel NAS Performance Toolkit (via Windows and samba: gigabit link)

FreeNASUbuntu RAIDZ2 Ubuntu md+ext4

Test Name Throughput(MB/s) Throughput(MB/s) Difference Throughput(MB/s)

HDVideo_1Play 93.402 102.626 9.88% 101.585

HDVideo_2Play 74.331 95.031 27.85% 101.153

HDVideo_4Play 66.395 95.931 44.49% 99.255

HDVideo_1Record 104.369 87.922 -15.76% 208.868

HDVideo_1Play_1Record 63.991 97.156 51.83% 96.807

ContentCreation 10.361 10.433 0.69% 10.734

OfficeProductivity 51.627 56.867 10.15% 11.405

FileCopyToNAS 56.42 50.427 -10.62% 55.226

FileCopyFromNAS 66.868 85.651 28.09% 8.367

DirectoryCopyToNAS 5.692 16.812 195.36% 13.356

DirectoryCopyFromNAS 19.127 27.29 42.68% 0.638

PhotoAlbum 10.403 10.88 4.59% 12.413

Bonnie++

FreeNAS 9.1

Version 1.97 Sequential Output Sequential Input Random

Seeks Sequential Create Random Create

Seeks Sequential Create Random Create

Size Per Char Block Rewrite Per Char Block Num Files Create Read Delete Create Read Delete

K/sec % CPU K/sec % CPU K/sec % CPU K/sec % CPU K/sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU

ports 64G 197 99 741314 74 522658 63 469 95 1531762 68 612.6 3 16 +++++ +++ +++++ +++ +++++ +++ 11537 36 +++++ +++ 22031 71

Latency 55233us 117ms 4771ms 129ms 760ms 817ms Latency 16741us 78us 126us 145ms 23us 92942us

Ubuntu - ZOL 0.6.2

Version 1.97 Sequential Output Sequential Input Random

Seeks Sequential Create Random Create

Seeks Sequential Create Random Create

Size Per Char Block Rewrite Per Char Block Num Files Create Read Delete Create Read Delete

K/sec % CPU K/sec % CPU K/sec % CPU K/sec % CPU K/sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU

ubuntu 63G 199 99 1102305 91 686584 77 498 98 1539862 66 445.4 10 16 +++++ +++ +++++ +++ +++++ +++ 30173 96 +++++ +++ +++++ +++

Latency 50051us 60474us 326ms 79062us 93147us 133ms Latency 20511us 236us 252us 41664us 10us 356us

Ubuntu - MD - EXT4

Version 1.97 Sequential Output Sequential Input Random

Seeks Sequential Create Random Create

Seeks Sequential Create Random Create

Size Per Char Block Rewrite Per Char Block Num Files Create Read Delete Create Read Delete

K/sec % CPU K/sec % CPU K/sec % CPU K/sec % CPU K/sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU /sec % CPU

ubuntu 63G 1086 95 137492 11 133601 7 5758 85 575418 15 571.8 7 16 29852 0 +++++ +++ +++++ +++ +++++ +++ +++++ +++ +++++ +++

Latency 18937us 146ms 634ms 23816us 86087us 141ms Latency 47us 223us 227us 39us 17us 35us

iozone

This NAS will mostly deal with very big files, so let's specifically test those (ext4 perform especially poorly here)

started with iozone -o -c -t 8 -r 128k -s 4G

FreeNASUbuntu ZOL 0.6.2Ubuntu md+ext4

Title KB/s KB/s KB/s

Children see throughput for 8 initial writers 38100.27 38065.71 6141.23

Parent sees throughput for 8 initial writers 38096.5 37892.05 6140.56

Min throughput per process 4762.32 4749.14 767.58

Max throughput per process 4763.04 4769.23 767.74

Avg throughput per process 4762.53 4758.21 767.65

Min xfer 4193664.00 KB 4176640.00 KB 4193536.00 KB

Children see throughput for 8 rewriters 36189.47 36842.59 5938.99

Parent sees throughput for 8 rewriters 36189.12 36842.26 5938.93

Min throughput per process 4523.49 4602.7 742.25

Max throughput per process 4524.1 4609 742.52

Avg throughput per process 4523.68 4605.32 742.37

Min xfer 4193792.00 KB 4188672.00 KB 4192896.00 KB

Children see throughput for 8 readers 4755369.06 4219519.44 541271.02

Parent sees throughput for 8 readers 4743778.25 4219187.22 540861.93

Min throughput per process 593043.56 527155.69 58198.66

Max throughput per process 596547.44 527774.56 71080.23

Avg throughput per process 594421.13 527439.93 67658.88

Min xfer 4169728.00 KB 4189696.00 KB 3438592.00 KB

Children see throughput for 8 re-readers 4421317.25 4648511.62 4961596.72

Parent sees throughput for 8 re-readers 4416015.2 4648083.46 4593093.04

Min throughput per process 539363.12 580726.5 31874.36

Max throughput per process 558968.81 581585 4619527.5

Avg throughput per process 552664.66 581063.95 620199.59

Min xfer 4048000.00 KB 4188288.00 KB 29184.00 KB

Children see throughput for 8 reverse readers 5082555.84 1929863.77 9773067.07

Parent sees throughput for 8 reverse readers 4955348.81 1898050.51 9441166.11

Min throughput per process 426778 183929.8 7041.22

Max throughput per process 991416.19 381972.75 9710407

Avg throughput per process 635319.48 241232.97 1221633.38

Min xfer 1879040.00 KB 2075008.00 KB 3072

Children see throughput for 8 stride readers 561014.62 179665.19 11963150.31

Parent sees throughput for 8 stride readers 559886.81 179420.54 11030888.26

Min throughput per process 57737.73 19092.56 11788.88

Max throughput per process 107340.9 36176.44 9238066

Avg throughput per process 70126.83 22458.15 1495393.79

Min xfer 2268288.00 KB 2221312.00 KB 5376

Children see throughput for 8 random readers 209240.16 93627.64 13201790.94

Parent sees throughput for 8 random readers 209234.74 93625.12 12408594.53

Min throughput per process 25897.38 11702.19 72349.58

Max throughput per process 27949.81 11704.4 9059793

Avg throughput per process 26155.02 11703.45 1650223.87

Min xfer 3886464.00 KB 4193536.00 KB 34688

Children see throughput for 8 mixed workload 91072.29 24038.27 Too Slow

Cancelled

Cancelled

Parent sees throughput for 8 mixed workload 17608.63 23877.52

Min throughput per process 2305.94 2990.74

Max throughput per process 20461.23 3021.75

Avg throughput per process 11384.04 3004.78

Min xfer 472704.00 KB 4151296.00 KB

Children see throughput for 8 random writers 36929.77 37391.58

Parent sees throughput for 8 random writers 36893.05 36944.62

Min throughput per process 4615.37 4643.6

Max throughput per process 4617.82 4704.09

Avg throughput per process 4616.22 4673.95

Min xfer 4192128.00 KB 4140416.00 KB

Children see throughput for 8 pwrite writers 37133.23 36726.14

Parent sees throughput for 8 pwrite writers 37131.31 36549.34

Min throughput per process 4641.49 4575.27

Max throughput per process 4641.97 4600.88

Avg throughput per process 4641.65 4590.77

Min xfer 4193920.00 KB 4171008.00 KB

Children see throughput for 8 pread readers 4943880.5 4806370.25

Parent sees throughput for 8 pread readers 4942716.33 4805915.05

Min throughput per process 617373.75 595302.62

Max throughput per process 619556.38 603041.88

Avg throughput per process 617985.06 600796.28

Min xfer 4179968.00 KB 4140544.00 KB

phoronix test suite

results are available here (xml result file here)

Comparison including md+ext4 raid6 here

Conclusions

Ignoring some of the nonsensical data found by the benchmarks above which indicates pure cache effect; the ZFS On Linux drivers are doing extremely well, and Ubuntu manages on average to surpass FreeBSD: that was a surprise...

Maybe time to port FreeNAS to use linux as kernel? That would be a worthy project...

Sorry, the array don't render very well here... I created a blog entry there:

http://jyavariousideas.blogspot.com.au/2013/11/zfs-raidz-benchmarks-part-2.html