Hello all,

Let me start off by saying that this community has been incredibly helpful in my freeNAS journey and I can't thank ya'll enough.

Background:

- I have a freeNAS SAN box set up right now with 6 vDEVS in mirrors.

- FreeNAS box has 6 Ethernet ports

- They are sharing out one 8 TB zvol via ISCSI(currently over just one port, just as a test).

- I have 2 SAN switches

- 3 ESXi Hosts

Network set up:

- Plan to have 3 Ethernet connections going from the freeNAS SAN to each SAN switch.

- Each SAN switch will have 2 connections to each ESXI host. Ex: SAN SWITCH 1 will have 2 connections to Esxi-1, esxi-2, and esxi3.

Questions:

I need advice on how to properly configure freeNAS to handle this set up. I understand how to share a ISCSI zvol over one port to multiple esxi hosts on vmware. But, how do I set up the interfaces in FreeNAS to accommodate the network set up above?

I know I have to add all the interfaces to freeNAS first. But do I need to add all those interfaces as portals? Do I need to create specific initiators? Right now I just have the wildcards and ALL set up. I'm just a bit confused. Please see pics below for reference...

Also...I know LACP is not recommended for ISCSI, but would Failover or Load balancing improve performance?

Thank you all so much in advance!!

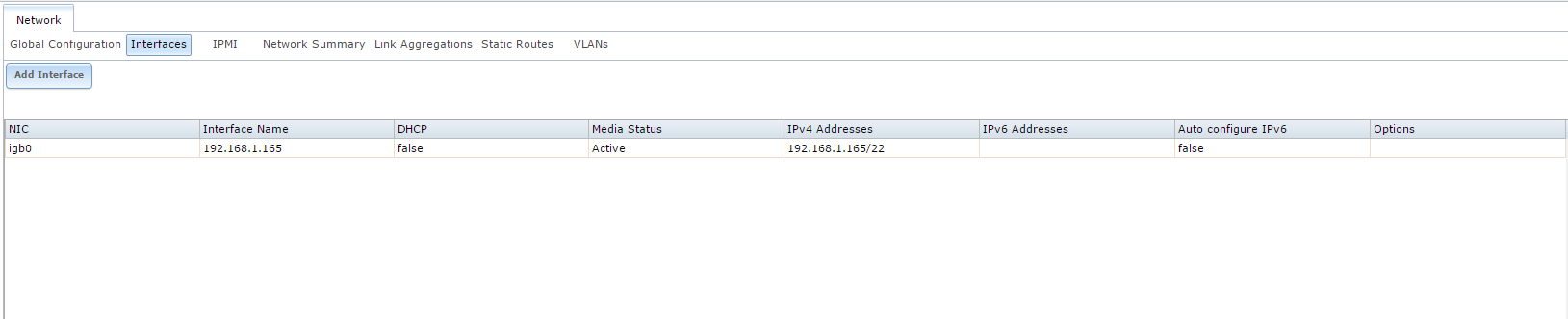

Interfaces:

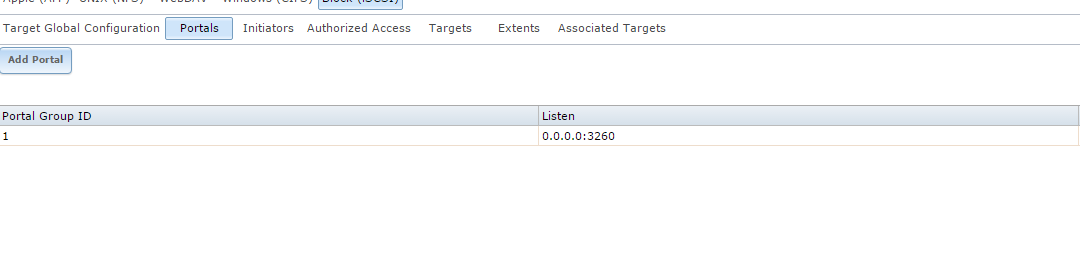

Portals:

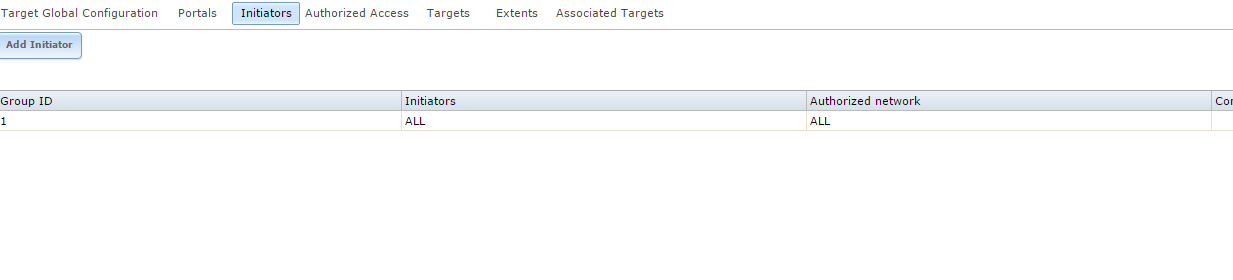

Initiators:

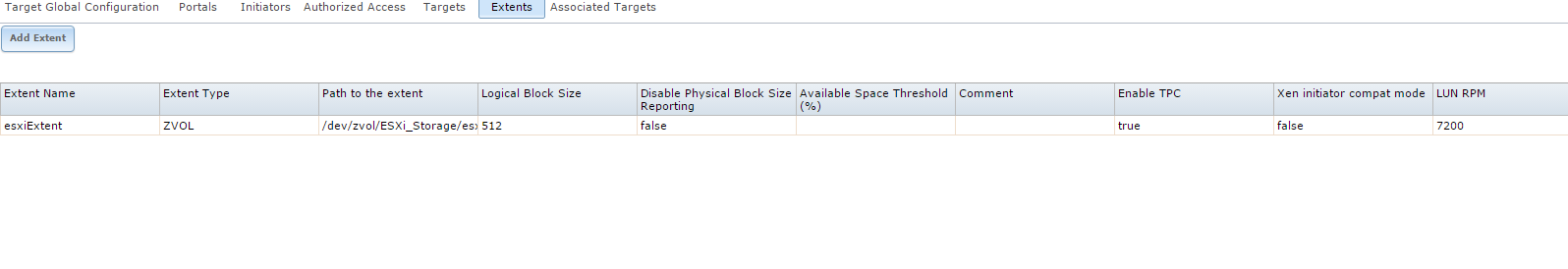

Extents:

Let me start off by saying that this community has been incredibly helpful in my freeNAS journey and I can't thank ya'll enough.

Background:

- I have a freeNAS SAN box set up right now with 6 vDEVS in mirrors.

- FreeNAS box has 6 Ethernet ports

- They are sharing out one 8 TB zvol via ISCSI(currently over just one port, just as a test).

- I have 2 SAN switches

- 3 ESXi Hosts

Network set up:

- Plan to have 3 Ethernet connections going from the freeNAS SAN to each SAN switch.

- Each SAN switch will have 2 connections to each ESXI host. Ex: SAN SWITCH 1 will have 2 connections to Esxi-1, esxi-2, and esxi3.

Questions:

I need advice on how to properly configure freeNAS to handle this set up. I understand how to share a ISCSI zvol over one port to multiple esxi hosts on vmware. But, how do I set up the interfaces in FreeNAS to accommodate the network set up above?

I know I have to add all the interfaces to freeNAS first. But do I need to add all those interfaces as portals? Do I need to create specific initiators? Right now I just have the wildcards and ALL set up. I'm just a bit confused. Please see pics below for reference...

Also...I know LACP is not recommended for ISCSI, but would Failover or Load balancing improve performance?

Thank you all so much in advance!!

Interfaces:

Portals:

Initiators:

Extents: