Todd Nine

Dabbler

- Joined

- Nov 16, 2013

- Messages

- 37

Hey all,

I recently migrated from an old volume of USB drives to a new volume I created of internal storage drives. My goal is to keep my system exactly the same, just move it to a new volume. I performed the following.

I recently migrated from an old volume of USB drives to a new volume I created of internal storage drives. My goal is to keep my system exactly the same, just move it to a new volume. I performed the following.

- Set up the new drives as "internal" mount point

- Stop all sharing services and jails

- Rsync with this command Code:

/usr/local/bin/rsync -avzh --delete /mnt/origdisk/ /mnt/internal/

- Go to System-> System Dataset and set the the dataset pool to use the new "internal" point from "origdisk"

- Go to sharing, and change all paths from /mnt/origdisk to /mnt/internal

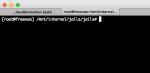

- Go to jails, and change all mount points from /mnt/origdisk to /mnt/internal

- Detach origdisk volume.

- Reboot to verify the system functions as expected