Hi,

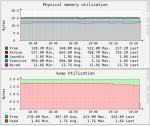

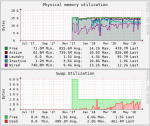

Freenas is using quite a lot of swap, even while there is free memory. I want to understand, what is going on, where should I start?

Update: I should add, I am running freenas 11.1-U5

Freenas is using quite a lot of swap, even while there is free memory. I want to understand, what is going on, where should I start?

Update: I should add, I am running freenas 11.1-U5

Attachments

Last edited: