Jason Keller

Explorer

- Joined

- Apr 2, 2015

- Messages

- 61

Sad as this is to say, compressed ARC is indeed my culprit. Upgrading to 11-RC on the box originally noted above, and ensuring that

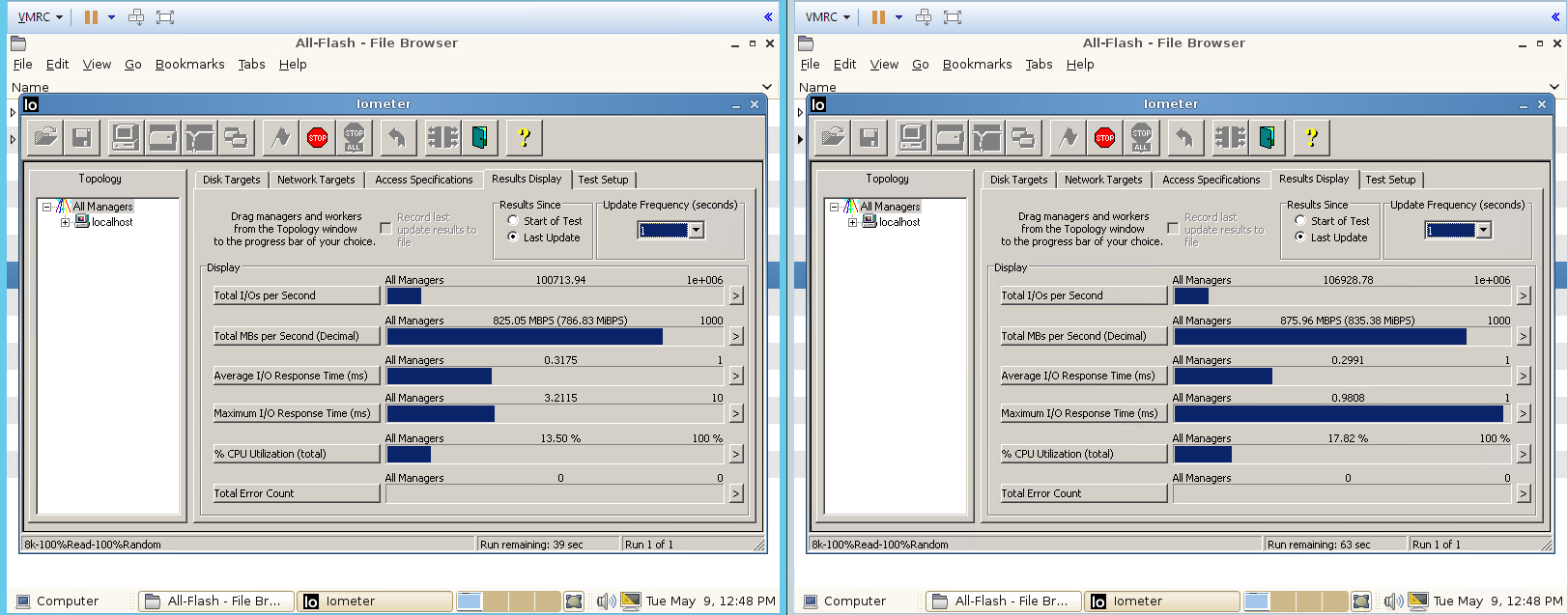

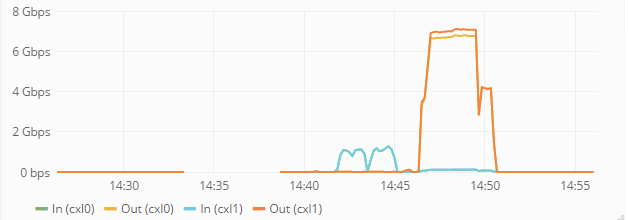

I'm now up well over 200k 8k RIOPS (hovered around 212k) with only about 40% CPU usage via software iSCSI initiators in VMware. Latency looks much better as well (a full 200us lower), as can be seen in the screenshot. I'm getting much better throughput loading on the links as well.

vfs.zfs.compressed_arc_enabled: 0 shows thus...Code:

last pid: 4735; load averages: 8.54, 3.62, 1.80 up 0+00:11:32 14:48:05

1523 processes:40 running, 1422 sleeping, 61 waiting

CPU: 0.0% user, 0.0% nice, 37.5% system, 3.3% interrupt, 59.2% idle

Mem: 381M Active, 188M Inact, 21G Wired, 72G Free

ARC: 19G Total, 935M MFU, 18G MRU, 64K Anon, 261M Header, 8452K Other

Swap:

PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND

5 root -16 - 0K 128K CPU22 22 1:01 64.59% ctl{work0}

0 root 8 - 0K 20080K CPU22 22 0:40 44.33% kernel{cblk0 taskq_0}

0 root 8 - 0K 20080K CPU9 9 0:40 44.31% kernel{cblk0 taskq_1}

0 root 8 - 0K 20080K - 4 0:40 44.08% kernel{cblk0 taskq_12}

0 root 8 - 0K 20080K CPU5 5 0:40 44.08% kernel{cblk0 taskq_3}

0 root 8 - 0K 20080K - 8 0:40 44.06% kernel{cblk0 taskq_13}

0 root 8 - 0K 20080K CPU19 19 0:40 44.06% kernel{cblk0 taskq_2}

0 root 8 - 0K 20080K CPU14 14 0:40 44.01% kernel{cblk0 taskq_10}

0 root 8 - 0K 20080K - 21 0:40 43.98% kernel{cblk0 taskq_4}

0 root 8 - 0K 20080K CPU16 16 0:40 43.96% kernel{cblk0 taskq_8}

0 root 8 - 0K 20080K - 4 0:40 43.94% kernel{cblk0 taskq_11}

0 root 8 - 0K 20080K CPU20 20 0:40 43.93% kernel{cblk0 taskq_7}

0 root 8 - 0K 20080K - 16 0:40 43.86% kernel{cblk0 taskq_9}

0 root 8 - 0K 20080K - 13 0:40 43.84% kernel{cblk0 taskq_6}

0 root 8 - 0K 20080K CPU12 12 0:40 43.60% kernel{cblk0 taskq_5}

12 root -92 - 0K 992K WAIT 8 0:38 33.30% intr{irq263: t5nex0:0a4}

0 root -16 - 0K 20080K CPU7 7 0:24 31.09% kernel{cfiscsitx}

0 root -16 - 0K 20080K CPU6 6 0:29 29.44% kernel{cfiscsitx}

0 root -16 - 0K 20080K CPU18 18 0:22 29.27% kernel{cfiscsitx}

0 root -16 - 0K 20080K icl_tx 6 0:27 26.63% kernel{cfiscsitx}

12 root -92 - 0K 992K WAIT 20 0:27 24.02% intr{irq273: t5nex0:1a4}

0 root -16 - 0K 20080K icl_rx 6 0:19 23.68% kernel{cfiscsirx}

6 root -8 - 0K 192K dbuf_e 15 0:19 23.31% zfskern{dbuf_evict_thread}

12 root -92 - 0K 992K CPU0 0 0:20 22.23% intr{irq274: t5nex0:1a5}

0 root -16 - 0K 20080K icl_rx 17 0:21 21.93% kernel{cfiscsirx}

0 root -16 - 0K 20080K CPU13 13 0:17 20.71% kernel{cfiscsirx}

0 root -16 - 0K 20080K icl_rx 11 0:19 17.37% kernel{cfiscsirx}

5 root -16 - 0K 128K - 8 0:12 15.75% ctl{work3}

5 root -16 - 0K 128K - 2 0:11 14.82% ctl{work4}

5 root -16 - 0K 128K CPU4 4 0:13 14.54% ctl{work5}

4682 root 20 0 29212K 11512K kqread 21 0:01 1.01% pmcstat

4417 root 20 0 32464K 10356K CPU10 10 0:01 0.26% top

7 root -16 - 0K 16K - 1 0:01 0.22% rand_harvestq

0 root -16 - 0K 20080K - 3 0:09 0.07% kernel{zio_write_intr_3}

6 root -8 - 0K 192K tx->tx 1 0:11 0.07% zfskern{txg_thread_enter}

0 root -16 - 0K 20080K - 21 0:09 0.07% kernel{zio_write_intr_1}

0 root -16 - 0K 20080K - 22 0:09 0.07% kernel{zio_write_intr_5}

0 root -16 - 0K 20080K - 16 0:09 0.06% kernel{zio_write_intr_2}

4683 root -16 - 0K 16K pmcloo 17 0:00 0.06% hwpmc: proc(4682)

0 root -16 - 0K 20080K - 12 0:09 0.06% kernel{zio_write_intr_4}

0 root -16 - 0K 20080K - 22 0:09 0.06% kernel{zio_write_intr_6}

0 root -16 - 0K 20080K - 12 0:09 0.06% kernel{zio_write_intr_7}

0 root -16 - 0K 20080K - 5 0:01 0.05% kernel{zio_null_issue}

0 root -16 - 0K 20080K - 21 0:09 0.05% kernel{zio_write_intr_0}

4 root -16 - 0K 96K - 8 0:01 0.04% cam{doneq3}

4 root -16 - 0K 96K - 22 0:01 0.04% cam{doneq2}

12 root -60 - 0K 992K WAIT 0 0:02 0.04% intr{swi4: clock (0)}

3338 ladvd 20 0 19624K 2820K kqread 17 0:00 0.04% ladvd

12 root -88 - 0K 992K WAIT 10 0:01 0.03% intr{irq279: mps1}

4 root -16 - 0K 96K - 10 0:01 0.03% cam{doneq1}

0 root -12 - 0K 20080K - 17 0:08 0.03% kernel{zio_write_issue_15}

4 root -16 - 0K 96K - 8 0:01 0.02% cam{doneq0}

0 root -12 - 0K 20080K - 13 0:08 0.02% kernel{zio_write_issue_10}

0 root -12 - 0K 20080K - 4 0:08 0.02% kernel{zio_write_issue_2}

0 root -12 - 0K 20080K - 19 0:08 0.02% kernel{zio_write_issue_16}

0 root -12 - 0K 20080K - 1 0:08 0.02% kernel{zio_write_issue_12}

0 root -12 - 0K 20080K - 20 0:08 0.02% kernel{zio_write_issue_6}

6 root -8 - 0K 192K spa->s 9 0:00 0.02% zfskern{trim flash}

12 root -88 - 0K 992K WAIT 12 0:01 0.02% intr{irq256: mps0}

0 root -12 - 0K 20080K - 19 0:08 0.02% kernel{zio_write_issue_13}

3337 root 20 0 21672K 2868K kqread 8 0:00 0.02% ladvd

PMC: [CPU_CLK_UNHALTED_CORE] Samples: 14806 (100.0%) , 0 unresolved

%SAMP IMAGE FUNCTION CALLERS

13.9 kernel lock_delay _sx_xlock_hard:4.9 __mtx_lock_sleep:4.3 __rw_wlock_hard:4.1 ...

8.0 kernel lz4_decompress zio_decompress

4.3 kernel cpu_search_highest cpu_search_highest:3.7 sched_idletd:0.6

3.2 kernel memcpy icl_soft_conn_pdu_append_data

2.4 kernel uma_zalloc_arg

2.1 kernel cpu_idle sched_idletd

1.7 kernel bcopy uiomove_faultflag

1.7 kernel ctl_work_thread fork_exit

1.6 kernel bzero

1.4 kernel ctl_check_ooa ctl_work_thread

1.3 kernel uma_zfree_arg

1.2 kernel tcp_output tcp_usr_send

1.1 kernel sched_switch mi_switch

1.0 kernel cpu_search_lowest cpu_search_lowest

1.0 kernel thread_lock_flags_

0.9 kernel dbuf_destroy dmu_read_uio_dnode

0.9 kernel dbuf_find dbuf_hold_impl

0.9 kernel dbuf_create dbuf_hold_impl

0.9 kernel _sx_xlock_hard

0.8 kernel cfiscsi_datamove ctl_datamove

0.8 kernel avl_remove dbuf_destroy

0.8 kernel cpu_switch mi_switch

0.7 kernel sched_idletd fork_exit

0.7 kernel ctl_be_block_worker taskqueue_run_locked

0.7 kernel avl_add dbuf_create

0.7 kernel dbuf_compare avl_add

0.7 kernel zrl_remove

0.7 kernel critical_exit

0.7 kernel __mtx_lock_sleep

0.7 kernel zrl_add_impl

0.7 kernel sleepq_lock

0.7 kernel sched_pickcpu sched_add

0.6 kernel atomic_add_64_nv

0.6 kernel dmu_zfetch dmu_buf_hold_array_by_dnode

0.6 kernel sched_add

0.6 kernel eth_tx drain_ring

0.5 kernel zio_wait_for_childre

0.5 kernel m_freem

I'm now up well over 200k 8k RIOPS (hovered around 212k) with only about 40% CPU usage via software iSCSI initiators in VMware. Latency looks much better as well (a full 200us lower), as can be seen in the screenshot. I'm getting much better throughput loading on the links as well.