Freenas 11.2 - FreeBSD 11.2-STABLE

For the last few weeks, I had issue with some disks that I had to replace; everything went fine, except now the controller/disk seems to be not available any more.

The LSI card is a m5016 flashed with the latest LSI firmware.

The problem seems to happen under some heavy load (resilver + something else); I have the issue with the mrsas driver, but also mfi (using different freenas 11.x).

Freenas is a VM under ESXI 6.7 with pci passthrough; it used to work for years properly.

The drives are all HSGT (6TB, and 3 disk now 10TB).

dmesg:

When everything is ok, I have:

When the issue happens:

and camcontrol reports:

the pool still reports resilvering:

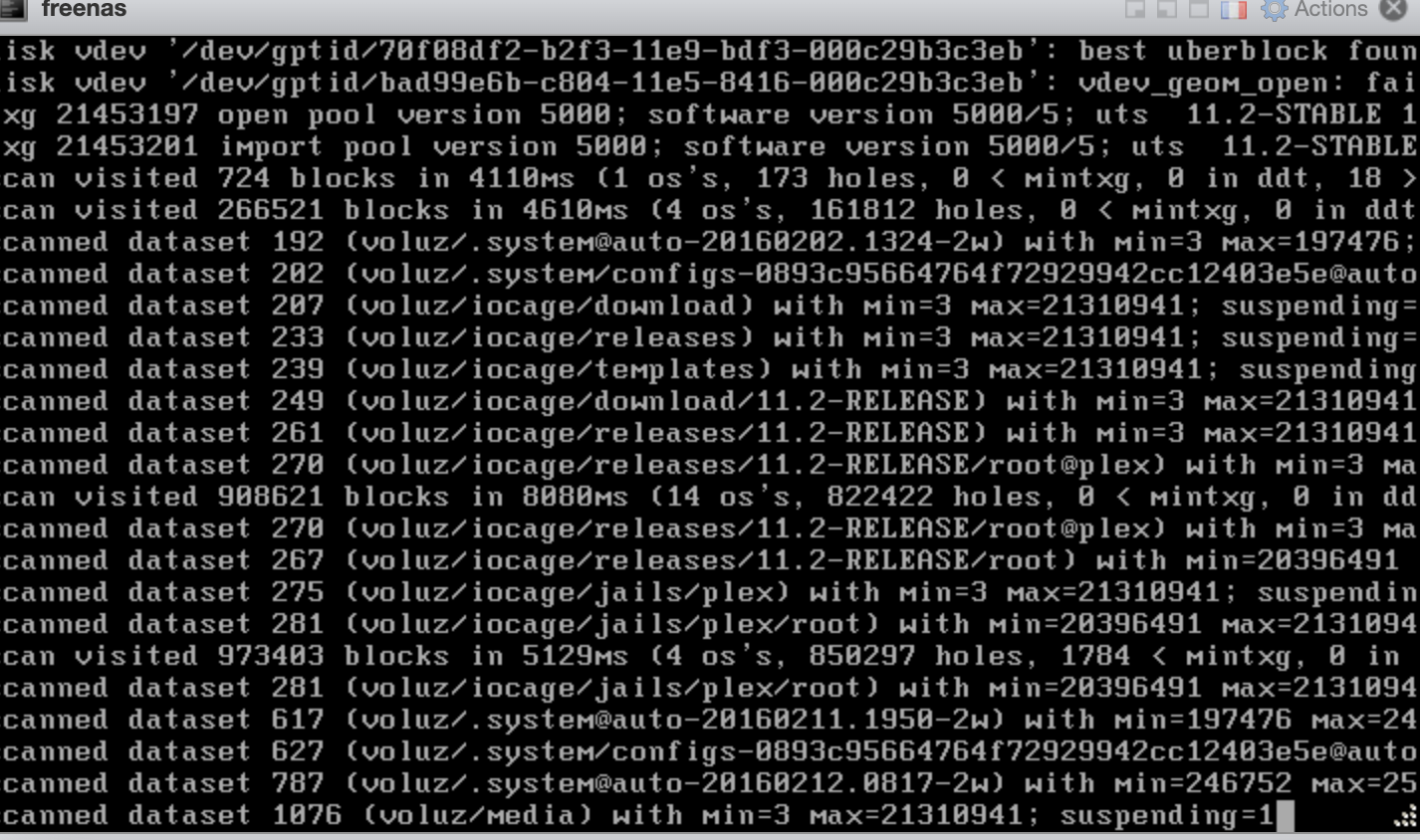

I have an 'error message' when I boot, see attached; not sure if it is a real one or not? See the vdev_geom_open message (it seems truncated but I am not sure where the full message is expected to be stored?)

When the issue happens, commands such as the one below does not help to report the drives

camcontrol reset all

storcli /c0 show... is reporting controller not found

if I restart the VM everything works fine again (until I get the problem again).

On other forum I read that the temperature of the LSI could be the reason, but it is surprising it is working again like a charm as soon as I reboot.

It seems for me a bug in the firmware/mrsas driver when the reset happens under heavy load (it seems there are some bug report about it but my dmesg looks different)

Debug file available - not sure how to upload it however.

Do you have any recommendation?

For the last few weeks, I had issue with some disks that I had to replace; everything went fine, except now the controller/disk seems to be not available any more.

The LSI card is a m5016 flashed with the latest LSI firmware.

The problem seems to happen under some heavy load (resilver + something else); I have the issue with the mrsas driver, but also mfi (using different freenas 11.x).

Freenas is a VM under ESXI 6.7 with pci passthrough; it used to work for years properly.

The drives are all HSGT (6TB, and 3 disk now 10TB).

dmesg:

Code:

AVAGO MegaRAID SAS FreeBSD mrsas driver version: 06.712.04.00-fbsd mrsas0: <AVAGO Thunderbolt SAS Controller> port 0x4000-0x40ff mem 0xfd4fc000-0xfd4fffff,0xfd480000-0xfd4bffff irq 18 at device 0.0 on pci3 mrsas0: Using MSI-X with 4 number of vectors mrsas0: FW supports <16> MSIX vector,Online CPU 4 Current MSIX <4> mrsas0: MSI-x interrupts setup success ... mrsas0: Initiaiting OCR because of FW fault!

When everything is ok, I have:

Code:

root@freenas:~ # camcontrol devlist <NECVMWar VMware IDE CDR10 1.00> at scbus1 target 0 lun 0 (cd0,pass0) <VMware Virtual SATA Hard Drive 00000001> at scbus3 target 0 lun 0 (pass1,ada0) <VMware Virtual SATA Hard Drive 00000001> at scbus4 target 0 lun 0 (pass2,ada1) <IBM ServeRAID M5016 3.46> at scbus33 target 0 lun 0 (pass3,da0) <IBM ServeRAID M5016 3.46> at scbus33 target 1 lun 0 (pass4,da1) <IBM ServeRAID M5016 3.46> at scbus33 target 2 lun 0 (pass5,da2) <IBM ServeRAID M5016 3.46> at scbus33 target 3 lun 0 (pass6,da3) <IBM ServeRAID M5016 3.46> at scbus33 target 4 lun 0 (pass7,da4) <IBM ServeRAID M5016 3.46> at scbus33 target 5 lun 0 (pass8,da5)

When the issue happens:

Code:

mrsas0: Initiaiting OCR because of FW fault! mrsas0: Reset failed, killing adapter. (da2:mrsas0:0:2:0): Invalidating pack (da3:mrsas0:0:3:0): Invalidating pack (da4:mrsas0:0:4:0): Invalidating pack (da0:mrsas0:0:0:0): Invalidating pack da2 at mrsas0 bus 0 scbus33 target 2 lun 0 da2: <IBM ServeRAID M5016 3.46> s/n 00b60db33f2a0400ff40f83f04b00506 detached da3 at mrsas0 bus 0 scbus33 target 3 lun 0 da3: <IBM ServeRAID M5016 3.46> s/n 000e21c530a17800ff40f83f04b00506 detached da4 at mrsas0 bus 0 scbus33 target 4 lun 0 da4: <IBM ServeRAID M5016 3.46> s/n 0002ef5b597a65892440f83f04b00506 detached da0 at mrsas0 bus 0 scbus33 target 0 lun 0 da0: <IBM ServeRAID M5016 3.46> s/n 00c6f493401b41d32440f83f04b00506 detached xptioctl: pass driver is not in the kernel xptioctl: put "device pass" in your kernel config file xptioctl: pass driver is not in the kernel xptioctl: put "device pass" in your kernel config file GEOM_MIRROR: Device swap0: provider da3p1 disconnected. (da1:mrsas0:0:1:0): Invalidating pack da1 at mrsas0 bus 0 scbus33 target 1 lun 0 da1: <IBM ServeRAID M5016 3.46> s/n 00095d1603310000ff40f83f04b00506 detached GEOM_MIRROR: Device swap1: provider da2p1 disconnected. GEOM_MIRROR: Device swap1: provider da4p1 disconnected. GEOM_MIRROR: Device swap1: provider destroyed. GEOM_MIRROR: Device swap1 destroyed. xptioctl: pass driver is not in the kernel xptioctl: put "device pass" in your kernel config file GEOM_ELI: Device mirror/swap1.eli destroyed. (da0:mrsas0:0:0:0): Periph destroyed (da1:mrsas0:0:1:0): Periph destroyed (da2:mrsas0:0:2:0): Periph destroyed (da3:mrsas0:0:3:0): Periph destroyed (da4:mrsas0:0:4:0): Periph destroyed

and camcontrol reports:

Code:

root@freenas:~ # camcontrol devlist <NECVMWar VMware IDE CDR10 1.00> at scbus1 target 0 lun 0 (cd0,pass0) <VMware Virtual SATA Hard Drive 00000001> at scbus3 target 0 lun 0 (pass1,ada0) <VMware Virtual SATA Hard Drive 00000001> at scbus4 target 0 lun 0 (pass2,ada1) <IBM ServeRAID M5016 3.46> at scbus33 target 5 lun 0 (pass8,da5)

the pool still reports resilvering:

Code:

errors: No known data errors

pool: voluz

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sun Aug 18 19:01:46 2019

3.66G scanned at 3.66G/s, 0 issued at 0/s, 23.2T total

0 resilvered, 0.00% done, no estimated completion time

config:

NAME STATE READ WRITE CKSUM

voluz DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

replacing-0 DEGRADED 0 0 0

18047640745310007856 UNAVAIL 0 0 0 was /dev/gptid/bad99e6b-c804-11e5-8416-000c29b3c3eb

gptid/70f08df2-b2f3-11e9-bdf3-000c29b3c3eb ONLINE 0 0 0

gptid/bb6ea102-c804-11e5-8416-000c29b3c3eb ONLINE 0 0 0

gptid/89a4c1fc-69bd-11e9-8b8e-000c29b3c3eb ONLINE 0 0 0

gptid/bc8a0c7d-c804-11e5-8416-000c29b3c3eb ONLINE 0 0 0

gptid/035b4da6-86ee-11e9-b16d-000c29b3c3eb ONLINE 0 0 0

errors: Permanent errors have been detected in the following files:

<0x312>:<0x1>

voluz/vm-iscsi/osxboot:<0x1>I have an 'error message' when I boot, see attached; not sure if it is a real one or not? See the vdev_geom_open message (it seems truncated but I am not sure where the full message is expected to be stored?)

When the issue happens, commands such as the one below does not help to report the drives

camcontrol reset all

storcli /c0 show... is reporting controller not found

if I restart the VM everything works fine again (until I get the problem again).

On other forum I read that the temperature of the LSI could be the reason, but it is surprising it is working again like a charm as soon as I reboot.

It seems for me a bug in the firmware/mrsas driver when the reset happens under heavy load (it seems there are some bug report about it but my dmesg looks different)

Debug file available - not sure how to upload it however.

Do you have any recommendation?