I recently began doing snapshot replication to a secondary local pool and it broke my jails.

This is correct and how it's supposed to be. Randomly this morning the pool had switched by itself to my secondary pool so all the lines above were showing FreeNAS-Backup/iocage, etc.

I reboot and it did nothing. I went to "Jails" in the GUI and activated FreeNAS-Backup as the jail pool, then activated Storage again. My jails re-appear but all say "Corrupt". Like this:

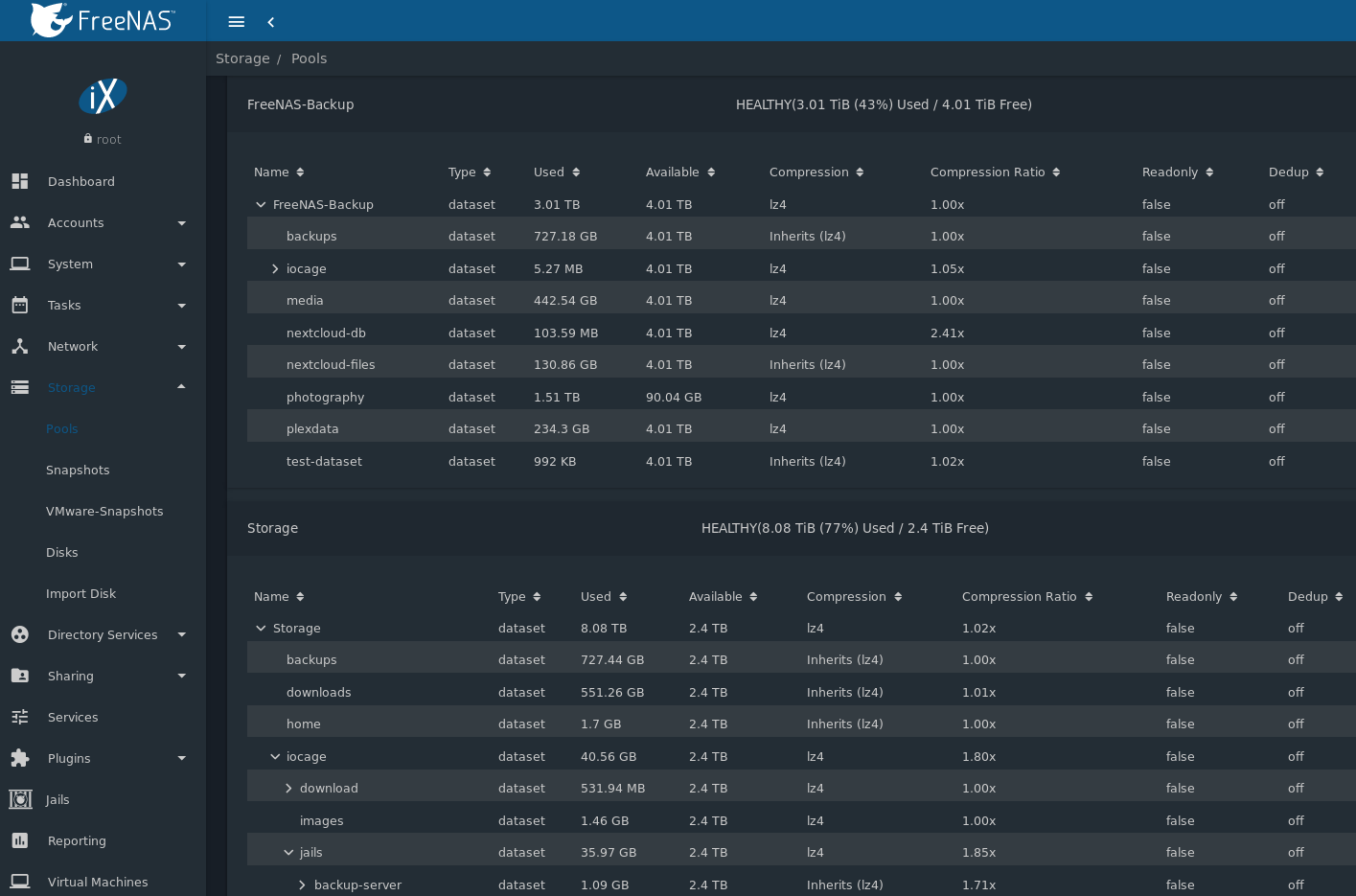

If I use the GUI to browse to jails and look in the iocage dataset, I see the data there:

I have no idea how iocage is mounted at the low level or how to even see if my jails are still there. Not sure how this happened either. Any guidance in troubleshooting...?

EDIT:

with zfs list I can even see the old data. Something is funky about the mounting process and I have no idea what it is or how to troublshoot it since iocage jails seem to be mysteriously mounted on /mnt/iocage instead of where the dataset shows inside of the GUI: Storage/iocage

Code:

@freenas ~]$ ls /mnt FreeNAS-Backup iocage md_size Storage [@freenas ~]$ ls /mnt/iocage/jails [@freenas ~]$ mount | grep Storage/iocage Storage/iocage on /mnt/iocage (zfs, local, nfsv4acls) Storage/iocage/download on /mnt/iocage/download (zfs, local, nfsv4acls) Storage/iocage/download/11.1-RELEASE on /mnt/iocage/download/11.1-RELEASE (zfs, local, nfsv4acls) Storage/iocage/download/11.2-RELEASE on /mnt/iocage/download/11.2-RELEASE (zfs, local, nfsv4acls) Storage/iocage/images on /mnt/iocage/images (zfs, local, nfsv4acls) Storage/iocage/jails on /mnt/iocage/jails (zfs, local, nfsv4acls) Storage/iocage/jails/backup-server on /mnt/iocage/jails/backup-server (zfs, local, nfsv4acls) Storage/iocage/jails/backup-server/root on /mnt/iocage/jails/backup-server/root (zfs, local, nfsv4acls) ............

This is correct and how it's supposed to be. Randomly this morning the pool had switched by itself to my secondary pool so all the lines above were showing FreeNAS-Backup/iocage, etc.

I reboot and it did nothing. I went to "Jails" in the GUI and activated FreeNAS-Backup as the jail pool, then activated Storage again. My jails re-appear but all say "Corrupt". Like this:

Code:

ocage list unifi_controller_1 is missing it's configuration, please destroy this jail and recreate it. wiki is missing it's configuration, please destroy this jail and recreate it. backup-server is missing it's configuration, please destroy this jail and recreate it. nextcloud is missing it's configuration, please destroy this jail and recreate it. dns_1 is missing it's configuration, please destroy this jail and recreate it. pms is missing it's configuration, please destroy this jail and recreate it. +-----+--------------------+---------+---------+-----+ | JID | NAME | STATE | RELEASE | IP4 | +=====+====================+=========+=========+=====+ | - | backup-server | CORRUPT | N/A | N/A | +-----+--------------------+---------+---------+-----+ | - | dns_1 | CORRUPT | N/A | N/A | +-----+--------------------+---------+---------+-----+ | - | nextcloud | CORRUPT | N/A | N/A | +-----+--------------------+---------+---------+-----+ | - | pms | CORRUPT | N/A | N/A | +-----+--------------------+---------+---------+-----+ | - | unifi_controller_1 | CORRUPT | N/A | N/A | +-----+--------------------+---------+---------+-----+ | - | wiki | CORRUPT | N/A | N/A | +-----+--------------------+---------+---------+-----+

If I use the GUI to browse to jails and look in the iocage dataset, I see the data there:

I have no idea how iocage is mounted at the low level or how to even see if my jails are still there. Not sure how this happened either. Any guidance in troubleshooting...?

EDIT:

with zfs list I can even see the old data. Something is funky about the mounting process and I have no idea what it is or how to troublshoot it since iocage jails seem to be mysteriously mounted on /mnt/iocage instead of where the dataset shows inside of the GUI: Storage/iocage

Code:

[steve@freenas /mnt/iocage]$ zfs list | grep jails FreeNAS-Backup/iocage/jails 304K 3.43T 88K /mnt/iocage/jails FreeNAS-Backup/iocage/jails/pms 216K 3.43T 96K /mnt/iocage/jails/pms Storage/iocage/jails 36.0G 2.40T 208K /mnt/iocage/jails Storage/iocage/jails/backup-server 1.09G 2.40T 208K /mnt/iocage/jails/backup-server Storage/iocage/jails/backup-server/root 1.09G 2.40T 2.42G /mnt/iocage/jails/backup-server/root Storage/iocage/jails/dns_1 204M 2.40T 184K /mnt/iocage/jails/dns_1 Storage/iocage/jails/dns_1/root 203M 2.40T 1.52G /mnt/iocage/jails/dns_1/root Storage/iocage/jails/nextcloud 1.70G 2.40T 192K /mnt/iocage/jails/nextcloud Storage/iocage/jails/nextcloud/root 1.70G 2.40T 2.92G /mnt/iocage/jails/nextcloud/root Storage/iocage/jails/pms 3.82G 2.40T 192K /mnt/iocage/jails/pms Storage/iocage/jails/pms/root 3.82G 2.40T 4.94G /mnt/iocage/jails/pms/root Storage/iocage/jails/transmission_1 5.59G 2.40T 192K /mnt/iocage/jails/transmission_1 Storage/iocage/jails/unifi_controller_1 7.96G 2.40T 184K /mnt/iocage/jails/unifi_controller_1 Storage/iocage/jails/unifi_controller_1/root 7.96G 2.40T 7.48G /mnt/iocage/jails/unifi_controller_1/root Storage/iocage/jails/wiki 5.13G 2.40T 184K /mnt/iocage/jails/wiki Storage/iocage/jails/wiki/root 5.13G 2.40T 4.70G /mnt/iocage/jails/wiki/root

Last edited: