Hey Guys – it’s almost a year since my FreeNAS build and want your feedback on this issue. I have a feeling that the server is running out steam as after adding the 10TB Helium drive, my read write performance from client via CIFS windows share has become sloppy, whereby in the past I was able to maintain 120 MB/s without fluctuations.

In addition, did it commit a newbie mistake by creating TWO ISCSI target pointing to a ZPOOL with just one drive (10TB He10 SAS). What I tried to do was create 2 target – one for the security camera VMS server and the second target for my windows workstation. When did that, the drive performance tanked completely….

Below are my server specs and storage configuration:

Intel XEON E3 1265L V3 | Supermicro X10SAE | 16GB DDR3 ECC RAM | LSI SAS 9211-8i

ARC Size 12.2G

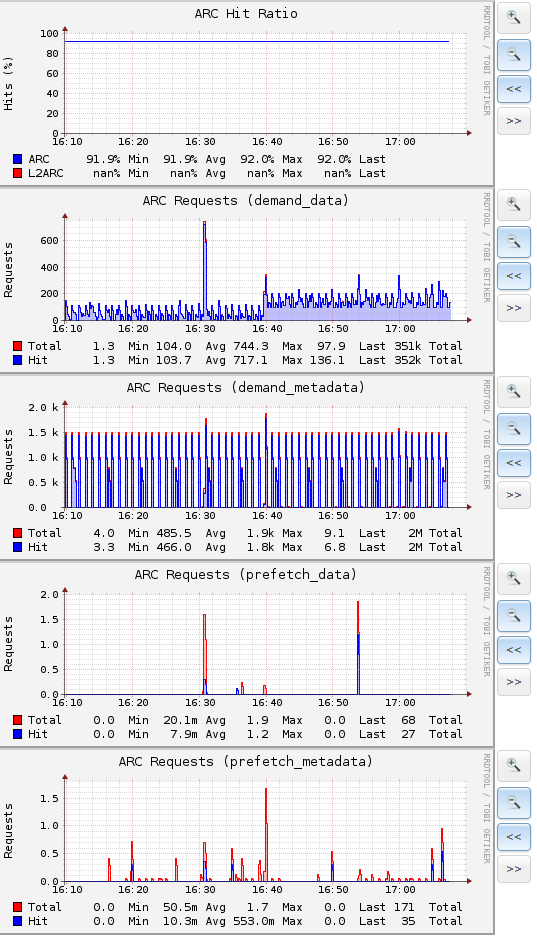

ARC Hit Ratio 91.8%

Below are the storage breakdown (TOTAL STORAGE – 29.7TB) :

ZPOOL 1

6 X 3TB HGST 7K4000 Ultrastar RAIDZ2 (Connected to motherboard SATA Controller)

Total Used 5.2TB out of 11.1TB

Volume 1 – 2.5TB

Volume 2 – 1 TB

Volume 3 – 2TB

Volume 4 – 1TB

Volume 5 – 1 TB

ZPOOL 2

3 X 3TB HGST 7K4000 Ultrastar RAIDZ1 (Connected to LSI SAS 9211-8i)

Total Used 914GB out of 7.2TB

Volume 1 – 1.5 TB

Volume 2 – 2.5 TB

ZPOOL 3 (Single Drive)

1 X Seagate LP 2TB HDD

Total Used 86GB out of 1.7TB

Volume 1 – 1.7TB

ZPOOL 4 (Single Drive)

1X HGST He10 10TB SAS Drive

Total Used 1.1TB out of 9.7TB

ISCSI Volume 1 – 2.5TB

Volume 2 – 4.5TB

In addition, did it commit a newbie mistake by creating TWO ISCSI target pointing to a ZPOOL with just one drive (10TB He10 SAS). What I tried to do was create 2 target – one for the security camera VMS server and the second target for my windows workstation. When did that, the drive performance tanked completely….

Below are my server specs and storage configuration:

Intel XEON E3 1265L V3 | Supermicro X10SAE | 16GB DDR3 ECC RAM | LSI SAS 9211-8i

ARC Size 12.2G

ARC Hit Ratio 91.8%

Below are the storage breakdown (TOTAL STORAGE – 29.7TB) :

ZPOOL 1

6 X 3TB HGST 7K4000 Ultrastar RAIDZ2 (Connected to motherboard SATA Controller)

Total Used 5.2TB out of 11.1TB

Volume 1 – 2.5TB

Volume 2 – 1 TB

Volume 3 – 2TB

Volume 4 – 1TB

Volume 5 – 1 TB

ZPOOL 2

3 X 3TB HGST 7K4000 Ultrastar RAIDZ1 (Connected to LSI SAS 9211-8i)

Total Used 914GB out of 7.2TB

Volume 1 – 1.5 TB

Volume 2 – 2.5 TB

ZPOOL 3 (Single Drive)

1 X Seagate LP 2TB HDD

Total Used 86GB out of 1.7TB

Volume 1 – 1.7TB

ZPOOL 4 (Single Drive)

1X HGST He10 10TB SAS Drive

Total Used 1.1TB out of 9.7TB

ISCSI Volume 1 – 2.5TB

Volume 2 – 4.5TB