Specs of machine are in my sig (FS01 in this case), I can't post debug or commands because I am mid-import from bootup

Ok here is the situation.

Last night I updated to latest Freenas build after testing it on another two servers with success. Everything came up great and I went to bed.

Today I did a large delete of probably 70TB of data, I then noticed Freenas was stuck on trying to delete a snapshot that was weeks stale. I tried to kill the process but it wouldn't let me, nor would it let me release it.

I turned off replication and rebooted with the intention of trying to release/destroy that snapshot when it came back up.

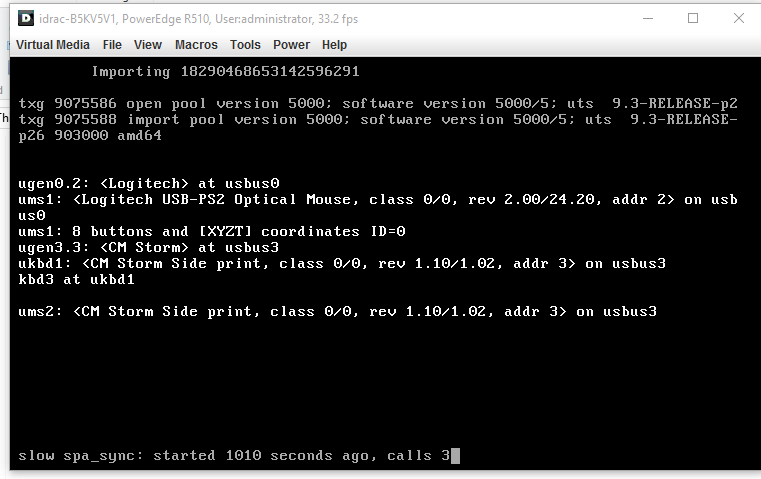

Instead my OS kernel panicked on reboot, second reboot I got to "importing pool" but it's been a solid 45mins and nothing yet.. I went down the server and I can see the disks blinking so I know its doing something.

After some research it sounds like this could be caused by that large delete, is the zpool import doing some sort of remediation right now?

Thanks

Edit:

Here is a screenshot

Ok here is the situation.

Last night I updated to latest Freenas build after testing it on another two servers with success. Everything came up great and I went to bed.

Today I did a large delete of probably 70TB of data, I then noticed Freenas was stuck on trying to delete a snapshot that was weeks stale. I tried to kill the process but it wouldn't let me, nor would it let me release it.

I turned off replication and rebooted with the intention of trying to release/destroy that snapshot when it came back up.

Instead my OS kernel panicked on reboot, second reboot I got to "importing pool" but it's been a solid 45mins and nothing yet.. I went down the server and I can see the disks blinking so I know its doing something.

After some research it sounds like this could be caused by that large delete, is the zpool import doing some sort of remediation right now?

Thanks

Edit:

Here is a screenshot

Last edited: