Ambassador Dave

Cadet

- Joined

- Jul 10, 2018

- Messages

- 6

Hi all,

So I've been running a single-disk FreeNAS 9.x server for years and decided to build out a new NAS and migrate the data. After a lot of reading, I decided - for better or worse - that I'd pull the drive from the 9.x system, plug it into the new 11.1 system, run Import Disk from the GUI to a specific dataset, and then move the folders I want to keep. The old drive is a 2TB drive using UFS.

Here's the new system build:

FreeNAS 11.1 RELEASE 64bit [11.1-U5]

MOBO: Supermicro X11SSH-LN4F

CPU: Intel Xeon E3-1240 V5

RAM: 2x 16GB Crucial Technology CT16G4WFD824A 288-Pin EUDIMM DDR4

SSD: 2x PNY CS900 120GB SSD (boot, mirrored)

HDD: 6x Toshiba N300 8TB NAS (RAIDZ2 setup)

CASE: Fractal Design Node 804 Black MINI-ITX

PSU: Seasonic G-650 80 Plus Gold

UPS: CyberPower CP 1500C

Burn-in tests went pretty well. An old SSD failed so I changed it out for the 2 new PNY drives. Badblocks & SMART tests are all clear.

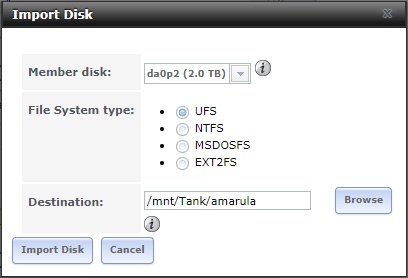

Last night, I started the disk import via the GUI, like this:

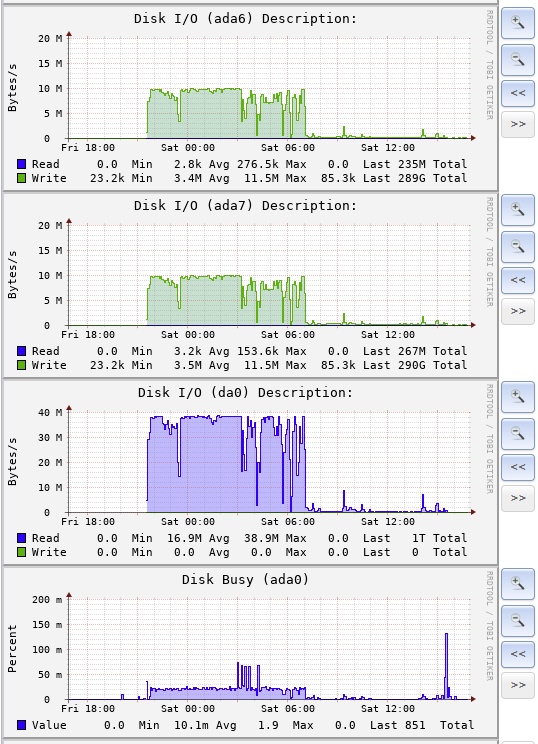

This morning I checked on it and the status was still updating, everything seemed ok. Later I checked again and I noticed that the current filename hadn't changed in a while. While about 1TB has copied over, there's another ~.75TB to go. I found the disks weren't busy anymore, system load was above 1.0, the GUI was sluggish, and there was a python3.6 command using up 100% WCPU.

The console was flooded with alert messages, repeating every 80 seconds:

I made a poor decision and killed the python process that was using up all the cpu. That appears to have killed the disk import procedure as shortly after I noticed the two RSYNC processes I'd seen via top were also gone. I decided to reboot. After rebooting I checked via top and think the same python process and two RSYNC processes are back. However, it doesn't look like the disk import is continuing or at least making any progress. Used disk space hasn't changed in over an hour:

I tried setting up the disk import through the GUI again based on what I read in another thread but I'm getting a disk busy error instead of the import status showing back up:

I could use some help here. Any thoughts?

Thanks,

Dave

So I've been running a single-disk FreeNAS 9.x server for years and decided to build out a new NAS and migrate the data. After a lot of reading, I decided - for better or worse - that I'd pull the drive from the 9.x system, plug it into the new 11.1 system, run Import Disk from the GUI to a specific dataset, and then move the folders I want to keep. The old drive is a 2TB drive using UFS.

Here's the new system build:

FreeNAS 11.1 RELEASE 64bit [11.1-U5]

MOBO: Supermicro X11SSH-LN4F

CPU: Intel Xeon E3-1240 V5

RAM: 2x 16GB Crucial Technology CT16G4WFD824A 288-Pin EUDIMM DDR4

SSD: 2x PNY CS900 120GB SSD (boot, mirrored)

HDD: 6x Toshiba N300 8TB NAS (RAIDZ2 setup)

CASE: Fractal Design Node 804 Black MINI-ITX

PSU: Seasonic G-650 80 Plus Gold

UPS: CyberPower CP 1500C

Burn-in tests went pretty well. An old SSD failed so I changed it out for the 2 new PNY drives. Badblocks & SMART tests are all clear.

Last night, I started the disk import via the GUI, like this:

This morning I checked on it and the status was still updating, everything seemed ok. Later I checked again and I noticed that the current filename hadn't changed in a while. While about 1TB has copied over, there's another ~.75TB to go. I found the disks weren't busy anymore, system load was above 1.0, the GUI was sluggish, and there was a python3.6 command using up 100% WCPU.

The console was flooded with alert messages, repeating every 80 seconds:

Code:

Aug 18 15:18:16 lillet /alert.py: [system.alert:393] Alert module '<samba4.Samba4Alert object at 0x814b4e470>' failed: timed out Aug 18 15:18:27 lillet /alert.py: [system.alert:393] Alert module '<update_check.UpdateCheckAlert object at 0x81405c550>' failed: timed out Aug 18 15:19:37 lillet /alert.py: [system.alert:393] Alert module '<samba4.Samba4Alert object at 0x814b4e470>' failed: timed out Aug 18 15:19:47 lillet /alert.py: [system.alert:393] Alert module '<update_check.UpdateCheckAlert object at 0x81405c550>' failed: timed out

I made a poor decision and killed the python process that was using up all the cpu. That appears to have killed the disk import procedure as shortly after I noticed the two RSYNC processes I'd seen via top were also gone. I decided to reboot. After rebooting I checked via top and think the same python process and two RSYNC processes are back. However, it doesn't look like the disk import is continuing or at least making any progress. Used disk space hasn't changed in over an hour:

Code:

[root@lillet ~]# zfs list Tank NAME USED AVAIL REFER MOUNTPOINT Tank 1.07T 27.0T 176K /mnt/Tank

I tried setting up the disk import through the GUI again based on what I read in another thread but I'm getting a disk busy error instead of the import status showing back up:

Code:

Import of Volume /dev/da0p2 Failed. Reason: [Errno 16] Device busy: '/var/run/importcopy/tmpdir/dev/da0p2'

I could use some help here. Any thoughts?

Thanks,

Dave