long time listener, first time caller.

looking to get some input on existing choices and remaining components.

use case: homelab/storage

Raid type: raidz2

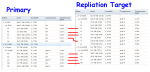

Newegg Shopping List

general idea is creating a raidz2 pool consisting of 12x3TB drives. if the calc is accurate, we're looking at ~24TB usable. thinking about splitting that as 12TB presented to vmware (currently only 1 physical host. expanding to 2-3 in the future) as iscsi and the other 10TB as probably NFS (or something more appropriate) for backup/storage/plex.

hardware notes:

looking to get some input on existing choices and remaining components.

use case: homelab/storage

Raid type: raidz2

Newegg Shopping List

general idea is creating a raidz2 pool consisting of 12x3TB drives. if the calc is accurate, we're looking at ~24TB usable. thinking about splitting that as 12TB presented to vmware (currently only 1 physical host. expanding to 2-3 in the future) as iscsi and the other 10TB as probably NFS (or something more appropriate) for backup/storage/plex.

hardware notes:

- FreeNAS install on mirrored 32gb usb3.0 thumb drives

- current config has 32GB ECC. could bump to 64GB ECC if performance improvement would justify the cost.

- use LSI HBA for 8/12 drives. other 4/12 to be connected via motherboard.

- is the processor sufficient for the intended workload?

- PSU calculators recommend PSUs in the 650W range. does that sound right for 12x7500RPM drives + proc + ram? currently looking at 750W just in case.

- should i add a SLOG/L2ARC m.2/SSD? if so, what sizes/formats?

- do both L2ARC & SLOG require power loss protection capabilities? (documentation appears to indicate yes for SLOG, not sure about L2ARC)

- ...how bout this? and based on IO performance and capacity, can it be used for both SLOG & L2ARC, or is the golden rule to keep all roles separate?

- documentation appears to indicate that if you have sufficient ram, L2ARC will not significantly improve performance. but what is sufficient ram ratio based on storage/workload?

- anything blatantly missing?

Last edited by a moderator: