winnielinnie

MVP

- Joined

- Oct 22, 2019

- Messages

- 3,641

Apparently, an upcoming feature for ZFS is a complete re-imagining of how inline compression will be used.

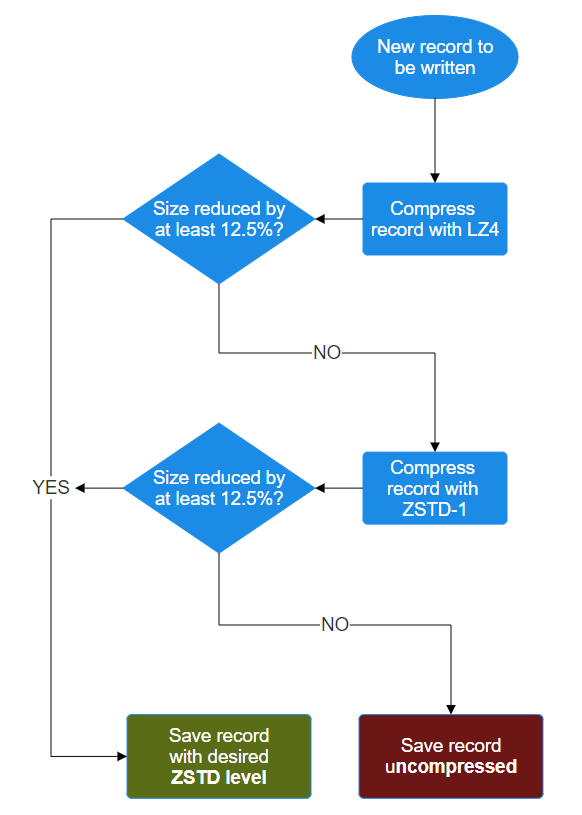

Here is an example to summarize the gist of it:

Essentially, the idea is:

"If the record shows compressibility with LZ4, then let's go ahead and compress it with the desired ZSTD level, since we know we'll get space savings without wasting the CPU for nothing. LZ4 is so ultra fast that it works as a heuristic when used on an entire record."

"If the record supposedly lacks compressibility, based on our first attempt with LZ4, it could be a false negative? Let's try again with ZSTD-1. It's still very fast, and it might reveal that the entire record is indeed compressible! If ZSTD-1 reveals this, then let's go ahead and compress it with the desired ZSTD level."

"If the record supposedly lacks compressibility with LZ4 and even with ZSTD-1? Forget it! Not worth wasting more time and CPU trying to squeeze it with the desired compression level. Let's just write it as an uncompressed record."

Flowchart illustration of this process

"Desired ZSTD level" can be ZSTD-3, ZSTD-9, ZSTD-19, etc.

While all of this sounds great, I'm still unable to figure out of this is planned for ZFS 2.2? (I can't find any reference to this except for a thread with a Google employee who explains the logic behind this new feature.)

If this feature makes it into ZFS, it means you can set ZSTD compression levels at 3 and higher (e.g, ZSTD-3, -9, -19), without worrying about wasted CPU cycles trying to compress incompressible data with slower compression methods. The only "wasted" CPU will be spent on testing the record with LZ4 (and ZSTD-1 to rule out false negatives), which are not considered a huge cost since they are very fast.

Thoughts on this? Did I interpret it incorrectly?

Will this have to be enabled as a "pool feature", or will it automatically "just work" without any additional configuration? (Important for TrueNAS users.)

Does anyone know if this is going to make it into ZFS 2.2? I'm having difficulty finding more information.

Here is an example to summarize the gist of it:

- You configure a dataset to use ZSTD-9 compression

- Upon writing a new record, it first attempts to compress it entirely via LZ4 (ultra fast)

- If the resulting compressed record does reduce in size by at least 12.5%, it compresses and saves it with your chosen level (i.e, ZSTD-9)

- If the resulting compressed record does not reduce in size by at least 12.5%, it then...

- ...attempts to compress it entirely via ZSTD-1 (very fast)

- If the resulting compressed record does reduce in size by at least 12.5%, it compresses and saves it with your chosen level (i.e, ZSTD-9)

- If the resulting compressed record does not reduce the size by at least 12.5%, it then...

- ...discards compression, saving the record uncompressed

Essentially, the idea is:

"If the record shows compressibility with LZ4, then let's go ahead and compress it with the desired ZSTD level, since we know we'll get space savings without wasting the CPU for nothing. LZ4 is so ultra fast that it works as a heuristic when used on an entire record."

"If the record supposedly lacks compressibility, based on our first attempt with LZ4, it could be a false negative? Let's try again with ZSTD-1. It's still very fast, and it might reveal that the entire record is indeed compressible! If ZSTD-1 reveals this, then let's go ahead and compress it with the desired ZSTD level."

"If the record supposedly lacks compressibility with LZ4 and even with ZSTD-1? Forget it! Not worth wasting more time and CPU trying to squeeze it with the desired compression level. Let's just write it as an uncompressed record."

Flowchart illustration of this process

"Desired ZSTD level" can be ZSTD-3, ZSTD-9, ZSTD-19, etc.

While all of this sounds great, I'm still unable to figure out of this is planned for ZFS 2.2? (I can't find any reference to this except for a thread with a Google employee who explains the logic behind this new feature.)

If this feature makes it into ZFS, it means you can set ZSTD compression levels at 3 and higher (e.g, ZSTD-3, -9, -19), without worrying about wasted CPU cycles trying to compress incompressible data with slower compression methods. The only "wasted" CPU will be spent on testing the record with LZ4 (and ZSTD-1 to rule out false negatives), which are not considered a huge cost since they are very fast.

Thoughts on this? Did I interpret it incorrectly?

Will this have to be enabled as a "pool feature", or will it automatically "just work" without any additional configuration? (Important for TrueNAS users.)

Does anyone know if this is going to make it into ZFS 2.2? I'm having difficulty finding more information.

Last edited: