I reviewed his debug. The short and skinny is that the system crashed due to zpool I/O. Either something stalled or something was corrupt. I'm not sure which and he didn't have a data zpool when the debug was sent so I couldn't review his zpool history.

-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

- Forums

- Archives

- FreeNAS (Legacy Software Releases)

- FreeNAS Help & support

- General Questions and Help

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FreeNAS server rebooted on its own -- troubleshooting possible?

- Thread starter SnakeByte

- Start date

- Status

- Not open for further replies.

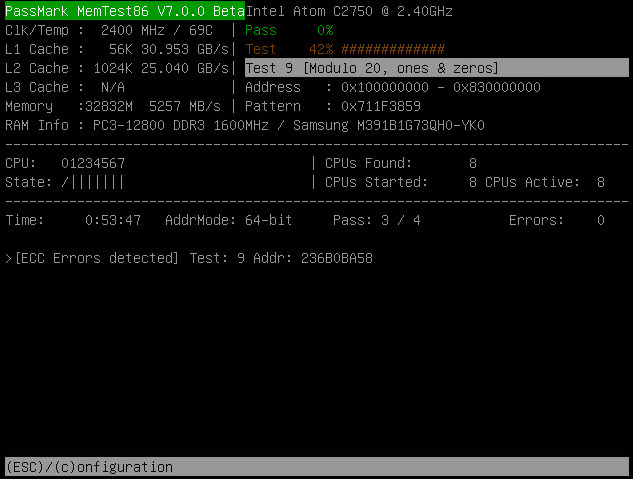

I had three passes go through without errors. There were some mentions of some ecc corrections happening though. I had stopped the test to try out the 7.0 beta of memtest since it had a number of improvements for the ECC tests, but IPMI locked up on me when I attempted to remotely mount the iso and I couldn't regain access until now. I'm running the 7.0 beta now. Note this is a free download and is the "pro" version if anyone wants to play: http://www.passmark.com/forum/showthread.php?6064-MemTest86-v7-0-Beta-1

Last edited:

So with 6.3.0 Pro, it had discovered some "ECC corrections" while running in Test 9. However, the 7.0 beta specifically has changes for the ecc tests with the Atom C2000 chipset series (what I'm using - C2750) as well as some other updates to ECC testing in general.

Since booting into 7.0, and only running test 9, 3 passes came up completely clean, but a fourth discovered that ECC correction thing again. While the ecc correction means that the corruption was fixable, it's still something that I will try to get replaced.

Since booting into 7.0, and only running test 9, 3 passes came up completely clean, but a fourth discovered that ECC correction thing again. While the ecc correction means that the corruption was fixable, it's still something that I will try to get replaced.

Last edited:

- Joined

- Feb 15, 2014

- Messages

- 20,194

Check the IPMI log. It'll at least tell you if those were real ECC errors or just memtest86 misinterpreting data.So with 6.3.0 Pro, it had discovered some "ECC corrections" while running in Test 9. However, the 7.0 beta specifically has changes for the ecc tests with the Atom C2000 chipset series (what I'm using - C2750) as well as some other updates to ECC testing in general.

Since booting into 7.0, and only running test 9, 3 passes came up completely clean, but a fourth discovered that ECC correction thing again. While the ecc correction means that the corruption was fixable, it's still something that I will try to get replaced.

View attachment 12620

Check the IPMI log. It'll at least tell you if those were real ECC errors or just memtest86 misinterpreting data.

Thanks for the hint Ericloewe. I keep underestimating what IPMI can do. What's interesting however is this:

Memtest did not detect any uncorrectable ECC's. Hmm. But I did reboot the system to pull another debug log dump, so it's possible that happened while FreeNAS was running.

Now here's the weird part. So far, to date, the only time memtest has detecting something it as been with Test 9. And then only at one specific location, and finally only "errors detected" -- never a failed event:

So I adjusted the settings to only do test 9, and then only within this range: 0x236b0b000 - 0x236b0c000

I went through 99 passes -- nothing found. :(

So I reset the ranges back to defaults and started testing again -- maybe the range was too small to apply the patterns correctly? Oddly, after 10 passes, nothing was found.

I've reset ALL tests back to default and am running it over night. I really hope I can get this to find something again because I just asked iXsystems to send me out a dimm and won't be able to tell which dimm to replace if I can't repeat the problem.

If a system kernel panics, that can result in false uncorrectable ECC errors. The reason is that the memory controller is left in an inconsistent state. I had no idea that this was possible and wrongly tried to RMA a system's RAM because of the issue.

Considering all of the information I have, your RAM appears to be just fine and replacing a DIMM isn't going to fix a thing. It could make things worse for you though because you're changing parameters that, for now, are static.

Considering all of the information I have, your RAM appears to be just fine and replacing a DIMM isn't going to fix a thing. It could make things worse for you though because you're changing parameters that, for now, are static.

- Joined

- Feb 15, 2014

- Messages

- 20,194

What is test 9??Now here's the weird part. So far, to date, the only time memtest has detecting something it as been with Test 9.

Row Hammer was turned down a bit because errors were surprisingly common.

If a system kernel panics, that can result in false uncorrectable ECC errors. The reason is that the memory controller is left in an inconsistent state. I had no idea that this was possible and wrongly tried to RMA a system's RAM because of the issue.

Considering all of the information I have, your RAM appears to be just fine and replacing a DIMM isn't going to fix a thing. It could make things worse for you though because you're changing parameters that, for now, are static.

Cyberjock,

The uncorrectable ECC errors that IPMI detected happened days after that random reboot event. There hasn't been another kernel panic since then (mainly because I haven't been running FreeNAS -- just the mem test). I only very quickly ran FreeNAS to pull another debug file for you and it didn't crash or otherwise show issues.

I just checked in on the system today. All tests ran 140 times across the entire memory range of addresses without a single error. This was while running the beta though. I'm going to go back to 6.3.0 pro and see if the errors re-appear.

Last edited:

What is test 9??

Row Hammer was turned down a bit because errors were surprisingly common.

According to their documentation (http://www.memtest86.com/technical.htm):

Test 9 [Modulo 20, Random pattern]

Using the Modulo-X algorithm should uncover errors that are not detected by moving inversions due to cache and buffering interference with the algorithm.

The Row Hammer test is Test 13. That always came up clean for me in both 6.3.0 and 7.0. I believe they even made it more aggressive in the 7.0 beta (http://www.passmark.com/forum/showthread.php?6064-MemTest86-v7-0-Beta-1):

- Row Hammer Test (Test 13) now uses double-sided hammering and random data patterns in an attempt to expose more RAM modules susceptible to disturbance errors. During our testing we observed double sided hammering was effective at high frequencies, but at lower frequencies single sided hammering was more effective. So feedback on this would be good.

I'm baaaack. The server rebooted itself again. According to the uptime, it happened 44 hours ago (Tuesday, July 19, 2016 at 4:06 ish pm). This time I checked the IMPI logs for hardware errors -- none found.

I had started the server after running memtest for 4 days without errors. This was back, I think Wed of last week. I then came down with a nasty cold and only now got back into work to check things.

The server should have been mostly idle except for scheduled events like scrubbing, etc. As far as I remember, nothing is scheduled to take place at that time -- scrubbing is set for the weekend.

The server is currently being used to back up our main file server's data -- I usually run robocopy to sync up the files nightly. The last robocopy was ran on the 16th and finished at 11:15pm. It should be a vanilla server -- no plugins, etc.

zpool status shows a clean bill of health:

This was in the end of the /var/log/messages file:

The IPMI stuff happens on occasion -- apparently it's a harmless quirk of the mini:

https://bugs.pcbsd.org/issues/5429

Since it doesn't seem to be hardware, where else can I look? Why is it rebooting and not halting?

I had started the server after running memtest for 4 days without errors. This was back, I think Wed of last week. I then came down with a nasty cold and only now got back into work to check things.

The server should have been mostly idle except for scheduled events like scrubbing, etc. As far as I remember, nothing is scheduled to take place at that time -- scrubbing is set for the weekend.

The server is currently being used to back up our main file server's data -- I usually run robocopy to sync up the files nightly. The last robocopy was ran on the 16th and finished at 11:15pm. It should be a vanilla server -- no plugins, etc.

zpool status shows a clean bill of health:

Code:

zpool status

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0 in 0h0m with 0 errors on Sat Jun 25 03:45:37 2016

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

gptid/99348679-210c-11e5-9dc0-d050991b6355 ONLINE 0 0 0

errors: No known data errors

pool: trunk

state: ONLINE

scan: scrub repaired 0 in 16h35m with 0 errors on Sun Jul 17 16:35:39 2016

config:

NAME STATE READ WRITE CKSUM

trunk ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/50bb91eb-3484-11e5-80f0-d050991b6355.eli ONLINE 0 0 0

gptid/6c31537e-cc30-11e5-bae6-d05099792f2d.eli ONLINE 0 0 0

gptid/518fa2f3-3484-11e5-80f0-d050991b6355.eli ONLINE 0 0 0

gptid/cdbd3c90-e0ad-11e5-b9d4-d05099792f2d.eli ONLINE 0 0 0

logs

gptid/094a0596-d9b2-11e5-8d2b-d05099792f2d.eli ONLINE 0 0 0

cache

gptid/c7c544d8-d9b1-11e5-8d2b-d05099792f2d.eli ONLINE 0 0 0

errors: No known data errors

This was in the end of the /var/log/messages file:

Code:

Jul 19 16:00:04 freenas autosnap.py: [tools.autosnap:61] Popen()ing: /sbin/zfs snapshot -r "trunk@auto-20160719.1600-1w" Jul 19 16:03:39 freenas ipmi0: KCS: Failed to read address Jul 19 16:03:39 freenas ipmi0: KCS error: 5f Jul 19 16:03:46 freenas ipmi0: KCS: Failed to read address Jul 19 16:03:46 freenas ipmi0: KCS error: 5f Jul 19 16:03:51 freenas ipmi0: KCS: Failed to read address Jul 19 16:03:51 freenas ipmi0: KCS error: 5f Jul 19 16:03:51 freenas ipmi0: Failed to reset watchdog Jul 19 16:03:57 freenas ipmi0: KCS: Failed to read address Jul 19 16:03:57 freenas ipmi0: KCS error: 5f Jul 19 16:04:04 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:04 freenas ipmi0: KCS error: 5f Jul 19 16:04:09 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:09 freenas ipmi0: KCS error: 5f Jul 19 16:04:09 freenas ipmi0: Failed to set watchdog Jul 19 16:04:17 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:17 freenas ipmi0: KCS error: 5f Jul 19 16:04:23 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:23 freenas ipmi0: KCS error: 5f Jul 19 16:04:29 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:29 freenas ipmi0: KCS error: 5f Jul 19 16:04:29 freenas ipmi0: Failed to set watchdog Jul 19 16:04:35 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:35 freenas ipmi0: KCS error: 5f Jul 19 16:04:41 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:41 freenas ipmi0: KCS error: 5f Jul 19 16:04:47 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:47 freenas ipmi0: KCS error: 5f Jul 19 16:04:47 freenas ipmi0: Failed to set watchdog Jul 19 16:04:54 freenas ipmi0: KCS: Failed to read address Jul 19 16:04:54 freenas ipmi0: KCS error: 5f Jul 19 16:05:00 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:00 freenas ipmi0: KCS error: 5f Jul 19 16:05:06 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:06 freenas ipmi0: KCS error: 5f Jul 19 16:05:06 freenas ipmi0: Failed to set watchdog Jul 19 16:05:12 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:12 freenas ipmi0: KCS error: 5f Jul 19 16:05:18 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:18 freenas ipmi0: KCS error: 5f Jul 19 16:05:24 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:24 freenas ipmi0: KCS error: 5f Jul 19 16:05:24 freenas ipmi0: Failed to set watchdog Jul 19 16:05:31 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:31 freenas ipmi0: KCS error: 5f Jul 19 16:05:37 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:37 freenas ipmi0: KCS error: 5f Jul 19 16:05:43 freenas ipmi0: KCS: Failed to read address Jul 19 16:05:43 freenas ipmi0: KCS error: 5f Jul 19 16:05:43 freenas ipmi0: Failed to set watchdog Jul 21 00:00:00 freenas newsyslog[95493]: logfile turned over due to size>100K

The IPMI stuff happens on occasion -- apparently it's a harmless quirk of the mini:

https://bugs.pcbsd.org/issues/5429

Since it doesn't seem to be hardware, where else can I look? Why is it rebooting and not halting?

Last edited:

More info -- finally contacted iXSystems after I couldn't repeat the memory test failures since they had shipped me a dimm to replace with. When they asked for the motherboard BIOS / BMC version info, I discovered that the BMC was currently in a failed state -- at least that's what the BIOS was reporting. After updating the BIOS, there was some hope that the BMC would get refreshed, but it hadn't after reboot.

I let FreeNAS boot itself up, and right before I normally would get the console menu, the system rebooted itself. I captured this in video (see attached). If I let it fully boot up again, it would reboot again, -- at the same spot.

What I'm curious about is what triggered the reboot -- was the OS somehow sensing a "ctrl-alt-del" type signal? The OS gracefully rebooted -- it wasn't a sudden thing. At around the 1:43 mark, you can see the ipmi errors I'd very occasionally get previous to any reboot issues -- perhaps related? The reboot trigger doesn't hit until around the 3:00 mark.

After powering down the server, removing the power cord, waiting, and then powering everything back on again, BIOS is correctly detecting the BMC now, and the system was able to boot up without issue. Now I'm wondering if I should re-run the memory tests -- did the previous tests pass because the BMC is what normally reports memory errors, but because it was faulty, it wasn't? So strange.

I let FreeNAS boot itself up, and right before I normally would get the console menu, the system rebooted itself. I captured this in video (see attached). If I let it fully boot up again, it would reboot again, -- at the same spot.

What I'm curious about is what triggered the reboot -- was the OS somehow sensing a "ctrl-alt-del" type signal? The OS gracefully rebooted -- it wasn't a sudden thing. At around the 1:43 mark, you can see the ipmi errors I'd very occasionally get previous to any reboot issues -- perhaps related? The reboot trigger doesn't hit until around the 3:00 mark.

After powering down the server, removing the power cord, waiting, and then powering everything back on again, BIOS is correctly detecting the BMC now, and the system was able to boot up without issue. Now I'm wondering if I should re-run the memory tests -- did the previous tests pass because the BMC is what normally reports memory errors, but because it was faulty, it wasn't? So strange.

Attachments

@SnakeByte

Can you please PM me with your iXsystems ticket ID and a phone number to contact you at? I have quite a bit to say and I don't want to write a book this morning.

Thanks.

Can you please PM me with your iXsystems ticket ID and a phone number to contact you at? I have quite a bit to say and I don't want to write a book this morning.

Thanks.

- Status

- Not open for further replies.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "FreeNAS server rebooted on its own -- troubleshooting possible?"

Similar threads

- Replies

- 3

- Views

- 738

- Replies

- 2

- Views

- 1K

- Replies

- 1

- Views

- 972

- Replies

- 31

- Views

- 7K