Hi All,

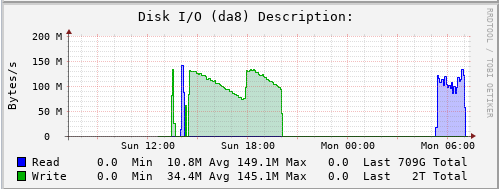

With no compression I am filling a disk to 77% to see how fast a scrub will run with no fragmentation. I figured I would see each disk start at the fast end and get slower until the dd was finished. 6 or so hours to fill it and 2 hours for the scrub. 12 drive, 3.0TB sas 7200 rpm 3.5 drives. What I saw was a saw tooth and I am not sure why:

This is how I filled the disk :

for i in $(awk 'BEGIN {for(i=0;i<1300;i++) print i}'); do dd if=/dev/zero of=ddfile$i.dat bs=2048k count=5100; done

This is the volume in question:

Filesystem Size Used Avail Capacity Mounted on

detvolaa 3.2T 92K 3.2T 0% /mnt/detvolaa

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

detvolaa 16.3T 12.6T 3.66T - - 0% 77% 1.00x ONLINE /mnt

zpool status -v detvolaa

pool: detvolaa

state: ONLINE

scan: resilvered 14.7G in 0 days 00:02:31 with 0 errors on Mon Dec 17 07:04:04 2018

config:

NAME STATE READ WRITE CKSUM

detvolaa ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/6c34431d-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/6d6b2014-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/95d6aa2d-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/97c50e2b-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/b0e198cd-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/b60c38f7-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

gptid/d051b1c1-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/d1dcaec9-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

gptid/29df61ea-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/2b8baab9-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

gptid/3c57e2f7-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/3e0c9d1f-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

errors: No known data errors

root@freenas[/mnt/detvolaa/noComp]#

With no compression I am filling a disk to 77% to see how fast a scrub will run with no fragmentation. I figured I would see each disk start at the fast end and get slower until the dd was finished. 6 or so hours to fill it and 2 hours for the scrub. 12 drive, 3.0TB sas 7200 rpm 3.5 drives. What I saw was a saw tooth and I am not sure why:

This is how I filled the disk :

for i in $(awk 'BEGIN {for(i=0;i<1300;i++) print i}'); do dd if=/dev/zero of=ddfile$i.dat bs=2048k count=5100; done

This is the volume in question:

Filesystem Size Used Avail Capacity Mounted on

detvolaa 3.2T 92K 3.2T 0% /mnt/detvolaa

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

detvolaa 16.3T 12.6T 3.66T - - 0% 77% 1.00x ONLINE /mnt

zpool status -v detvolaa

pool: detvolaa

state: ONLINE

scan: resilvered 14.7G in 0 days 00:02:31 with 0 errors on Mon Dec 17 07:04:04 2018

config:

NAME STATE READ WRITE CKSUM

detvolaa ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/6c34431d-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/6d6b2014-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/95d6aa2d-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/97c50e2b-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/b0e198cd-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/b60c38f7-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

gptid/d051b1c1-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/d1dcaec9-00c1-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

gptid/29df61ea-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/2b8baab9-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

gptid/3c57e2f7-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

gptid/3e0c9d1f-00c2-11e9-8345-246e968a4118 ONLINE 0 0 0

errors: No known data errors

root@freenas[/mnt/detvolaa/noComp]#