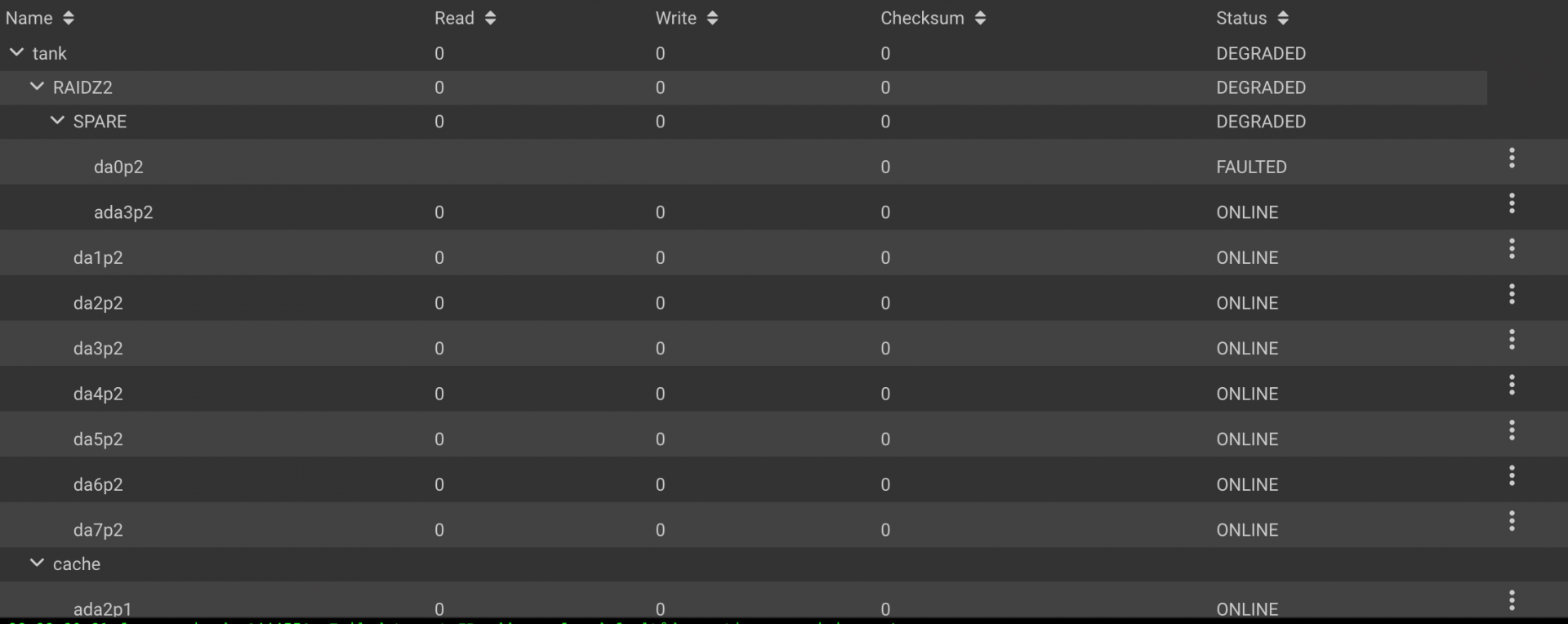

Wow -- something cool happened to me today. Failed drive within my Raid Z2 Zpool -- and the hot spare seems to have been activated:

Hmm - so that's now two WD Red Drives down in the last month. WTF??!!

Anyway, the main zpool physically is 8 5Tb drives. How do I physically identify the drive that is faulted so I can replace it?

So it's looking like the da0p2 disk which would correspond to:

So I'm guessing find whatever drive has serial number WD-WX11D86KC68Y?

Code:

freenas% zpool status

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0 in 0 days 00:02:54 with 0 errors on Tue Feb 25 03:47:54 2020

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ada0p2 ONLINE 0 0 0

ada1p2 ONLINE 0 0 0

errors: No known data errors

pool: tank

state: DEGRADED

status: One or more devices are faulted in response to persistent errors.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Replace the faulted device, or use 'zpool clear' to mark the device

repaired.

scan: resilvered 981G in 0 days 05:43:10 with 0 errors on Mon Mar 2 10:12:25 2020

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

spare-0 DEGRADED 0 0 0

gptid/2e48e04a-d2f0-11e6-8e60-0cc47a84a594 FAULTED 6 5 0 too many errors

gptid/a203d5ad-49e3-11ea-9739-0cc47a84a594 ONLINE 0 0 0

gptid/2eff3431-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

gptid/2fad6079-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

gptid/305f9785-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

gptid/310fd248-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

gptid/31c62952-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

gptid/32845d1c-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

gptid/3338ea10-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

cache

gptid/3385f967-d2f0-11e6-8e60-0cc47a84a594 ONLINE 0 0 0

spares

3314618157351433518 INUSE was /dev/gptid/a203d5ad-49e3-11ea-9739-0cc47a84a594

errors: No known data errorsHmm - so that's now two WD Red Drives down in the last month. WTF??!!

Anyway, the main zpool physically is 8 5Tb drives. How do I physically identify the drive that is faulted so I can replace it?

So it's looking like the da0p2 disk which would correspond to:

Code:

#geom disk list ... ... Geom name: da0 Providers: 1. Name: da0 Mediasize: 6001175126016 (5.5T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r2w2e5 descr: ATA WDC WD60EFRX-68L lunid: 50014ee2b894b316 ident: WD-WX11D86KC68Y rotationrate: 5700 fwsectors: 63 fwheads: 255 ... ...

So I'm guessing find whatever drive has serial number WD-WX11D86KC68Y?

Last edited: