danwestness

Dabbler

- Joined

- Apr 2, 2022

- Messages

- 22

ENVIRONMENT: TrueNAS-SCALE-22.02.2.1

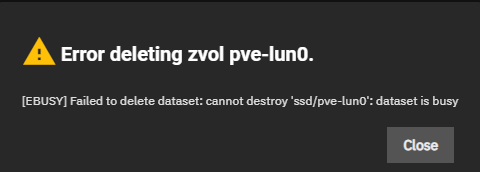

I am attempting to delete a ZVOL and keep receiving the error that the dataset is busy.

I have attempted a number of things to resolve this, unsuccessfully.

I have restarted TrueNAS multiple times.

I have ensured there are no NFS / iSCSI / SMB targets referencing this

I disabled all services (NFS / ISCSI / SMB)... Disabled them from the auto-start, and restarted fresh to make sure none of them were running at all

There are no snapshots

I removed all replication jobs that previously referenced this ZVOL

I have attempted from the WebUI as well as from the SSH shell via various command options. All yielding the same 'dataset is busy' response

I attempted to use a PRE-INIT command to delete the ZVOL at a low level point in system startup with the same commands and no luck there either.

I have inspected running processes, lsof, etc and cannot find anything using this ZVOL that would cause it to be busy.

This is all i see in the logs as well:

I am at a complete loss of how to delete this ZVOL and reclaim the space it is occupying. Please help!

I am attempting to delete a ZVOL and keep receiving the error that the dataset is busy.

I have attempted a number of things to resolve this, unsuccessfully.

I have restarted TrueNAS multiple times.

I have ensured there are no NFS / iSCSI / SMB targets referencing this

I disabled all services (NFS / ISCSI / SMB)... Disabled them from the auto-start, and restarted fresh to make sure none of them were running at all

There are no snapshots

I removed all replication jobs that previously referenced this ZVOL

I have attempted from the WebUI as well as from the SSH shell via various command options. All yielding the same 'dataset is busy' response

zfs destroy ssd/pve-lun0

zfs destroy -f ssd/pve-lun0

zfs destroy -fr ssd/pve-lun0

zfs destroy -fR ssd/pve-lun0

I attempted to use a PRE-INIT command to delete the ZVOL at a low level point in system startup with the same commands and no luck there either.

I have inspected running processes, lsof, etc and cannot find anything using this ZVOL that would cause it to be busy.

This is all i see in the logs as well:

[2022/07/17 21:39:39] (ERROR) ZFSDatasetService.do_delete():988 - Failed to delete dataset

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs.py", line 981, in do_delete

subprocess.run(

File "/usr/lib/python3.9/subprocess.py", line 528, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['zfs', 'destroy', '-r', 'ssd/pve-lun0']' returned non-zero exit status 1.

I am at a complete loss of how to delete this ZVOL and reclaim the space it is occupying. Please help!