I have run a custom bare metal Linux server for 20 years. The first 5 years of those were custom "Linux From Scratch" builds. Then the urge to be that hands on dwindled. So I used Gentoo, and now just Ubuntu.

I am also an enterprise software developer in the day time. I like to avoid evening work caused by home network failures. So I have kept things simple. Samba and NFS exports for:

/homes

/storage

Each user (me mostly) gets a home folder and the bulk storage location.

Empowered with dockerizing and k8s'ing application stacks in work, I started moving all my services into docker. dhcp, dns, zigbee, mqtt etc. etc. etc. This much aided me well when I had a live "/" rootfs incident. I can't honestly remember if it was a mistake, a power bouncing or a hardware fault. I lost the root FS. However, I installed Ubuntu, checked all the docker-compose meta data out of gitlab, the databases out of backups and managed to reconfigure and bring a brand new server up in about an hour.

This then has been working on an old doorstop, dust collector:

Dell Optiplex 7010 i7-4790, 16Gb with 2 internal SSDs and a 5 bay USB3-SATA enclosure with the bulk HDD and backup drives.

A few things have spoilt the simplicity. The bulk storage over flowed a 6Tb drive last year and I'm not prepared to go to a 8/10/12Tb, so I bought another 6Tb. This creates annoying "split file system" issues.

Then I had a rather more painful /home partial partition loss. Aka an SSD died in service, went read-only but not cleanly enough to stop a bunch of corruption and unreadable sectors.

There are now increased demands on the server. In a bid to save power I purchased a new "daily driver" in the form of a MinisForum UM560XT which sips about 15W on desktop, instead of 100-110W from my 5800X/3080 gaming rig. This then means I either install all my development environments and software on both main rig and "austerity rig" ... or... I use a dev VM. So I choose the later. Thus the server now has to run part time Windows10Pro and Linux Ubuntu VMs. The i7-4490 doesn't do too bad a job, but you can tell it's a 2010s CPU and 16Gb isn't really enough to run both at the same time without completely killing the servers cache memory.

The VMs also immediately allow access to these environments from any client, even remote over VPN or just from the bedroom TV when you have "that idea" which happens just as you want to go to sleep.

---- The future 2023 -

A credit card accident impulse buying occurred. Maybe it was retail therapy. Y'all know how it goes. Don't laugh, this has one main heavy user, there are 7 or 8 pc/laptop/mobile clients with more than 2 concurrent being extremely rare and a few dozen IoT devices using it. It doesn't really need to be that powerful. Remembering I've been making do on a 10 year old Optiplex 4th gen i7!

Asus B550 Prime-Plus, Ryzen 5600G, 32Gb 3600Mhz DDR4

2 x Gen3 NVMe 1Tb

First up this is standalone and I can play with it in the form above without any impact on my "production" server. I have already test installed a bare metal Ubuntu Server in RAID1+LVM.

Fine, but it's just so "twenty-teens" architecture. It could use a layer of virtualisation or two maybe. So I tried Proxmox. I got it working last night with a Ubuntu Server dockerhost up to the point all the services started and got kicked off the MQTT server for being duplicate connections. I'd say that was a successful deployment. I also got a rather nice Windows10Pro environment setup with STM32Cube running.

----

What brings me to TrueNAS?

A good assumption is that the 1Tb RAID1 (or zfs mirror) NVMe drive pair will hold all the VMs and "functional" system drives absolutely fine with LVM or pool space to spare for the foreseeable.

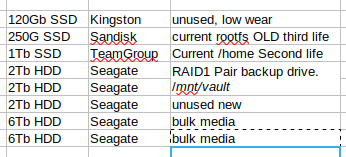

The remaining drives look like this;

My thoughts for a new layout (assuming I can manage to move the data already there around to not limit the new layout).

The RAID1 'vault' pair remain as an "offlined" backup drive for select specific folders of important stuff. Including some specific folders in the "Bulk media" category, such as family photos/videos etc. This has no fancy crap on it or involved in access it. It's just an mdadm pair which should be accessible in full from any Linux boot disk. It gets mounted and rsync'd (possible incrementals) then unmounted and powered down.

The "unused" 2Tb HDD I would like to nominate as a primary VM and boot/rootfs backup pool. Again, mounted, backups run, unmounted, powered down. It's a 2Tb drive backing up a 1Tb pair, but it has future proofing capacity.

So far I think that Proxmox alone can handle the above requirements.

It's how to manage the two bulk 6Tb spinners and the assorted, still valid for use, SSDs.

I am willing to upgrade further and get rid of the smaller SSDs in favour of a dual NVMe riser card with another pair of 1Tb Gen3 M.2s.

The trouble is... unless I sub categorise the "bulk media" into portions which can be moved to an SSD, I am stuck spinning up those drives every time an application refreshes a thumbnail on it. I have tried spinning them down and ... with them in a USB enclosure with 4 of them in it... it spins all 4 up sequencially if any spin up. This has to stay in the past somehow. I have considered using autofs and/or mergefs to allow out of hours data to be written to the underlying partition and then merged up when the HDD is mounted. Sounds like an up to 3am fixing stuff pattern.

SSD Cache pool in front of the HDDs sounds great until you research the pit falls of same. And, no, it would seem that you absolutely cannot just power down the HDDs and write cache on the SSDs for hours and hours... not while keeping your sanity. Maybe I would try this experimental, but ... again, I don't like fixing overly complicated home stuff in the evenings. Lab yes, 24/7 reliable no.

Someone must have come up with a solution to this by now. A pool of volumes striped in such a way as frequently accessed 'extents' or sectors can be migrated to higher quality storage. You have a pool level transaction log, yes? Can't it be analysed in batch, frequency of access determined and migration candidates selected, moved, readdressed and repeat every few hours. Eventually the most accessed stuff will end up in the "higher quality" parts of the pool. Hopefully enough that power management can power down the HDDs for at least a portion of the day.

I'd like to explore with you how TrueNAS or TrueNAS-Scale can help me pool and/or divi up that 12Tb of storage and maybe make use of SSD and M.2 to assist that. To give you examples of contents, there are 3Tb of movies, TV series etc. Another 3Tb of "camera memory card dump" and my actual created content, scratch drives and a whole ton of crap. 1Tb of assorted garbage.

The other thing I probably expect I will need is to either use TrueNAS exclusively or take the hurdle of virtualising it on Proxmox. Luckily (I hope) I do have the capacity of providing it access to a whole controller as I have the potential of either a PCIe SATA card or the onboard 6 SATA ports are 'free'. Either the SATA Card OR a dual M.2 Riser. Using both might be pushing the B550, especially with a 2.5Gb PCIe NIC. I have not yet confirmed if I "CAN" pass through PCIe controller card. Maybe I should start with that test.

So. Tell me why I should or shouldn't consider TrueNAS virtualised on top of Proxmox managing one (or more) controllers of SATA/NVMe with "austerity mode" HDD power management.

I am also an enterprise software developer in the day time. I like to avoid evening work caused by home network failures. So I have kept things simple. Samba and NFS exports for:

/homes

/storage

Each user (me mostly) gets a home folder and the bulk storage location.

Empowered with dockerizing and k8s'ing application stacks in work, I started moving all my services into docker. dhcp, dns, zigbee, mqtt etc. etc. etc. This much aided me well when I had a live "/" rootfs incident. I can't honestly remember if it was a mistake, a power bouncing or a hardware fault. I lost the root FS. However, I installed Ubuntu, checked all the docker-compose meta data out of gitlab, the databases out of backups and managed to reconfigure and bring a brand new server up in about an hour.

This then has been working on an old doorstop, dust collector:

Dell Optiplex 7010 i7-4790, 16Gb with 2 internal SSDs and a 5 bay USB3-SATA enclosure with the bulk HDD and backup drives.

A few things have spoilt the simplicity. The bulk storage over flowed a 6Tb drive last year and I'm not prepared to go to a 8/10/12Tb, so I bought another 6Tb. This creates annoying "split file system" issues.

Then I had a rather more painful /home partial partition loss. Aka an SSD died in service, went read-only but not cleanly enough to stop a bunch of corruption and unreadable sectors.

There are now increased demands on the server. In a bid to save power I purchased a new "daily driver" in the form of a MinisForum UM560XT which sips about 15W on desktop, instead of 100-110W from my 5800X/3080 gaming rig. This then means I either install all my development environments and software on both main rig and "austerity rig" ... or... I use a dev VM. So I choose the later. Thus the server now has to run part time Windows10Pro and Linux Ubuntu VMs. The i7-4490 doesn't do too bad a job, but you can tell it's a 2010s CPU and 16Gb isn't really enough to run both at the same time without completely killing the servers cache memory.

The VMs also immediately allow access to these environments from any client, even remote over VPN or just from the bedroom TV when you have "that idea" which happens just as you want to go to sleep.

---- The future 2023 -

A credit card accident impulse buying occurred. Maybe it was retail therapy. Y'all know how it goes. Don't laugh, this has one main heavy user, there are 7 or 8 pc/laptop/mobile clients with more than 2 concurrent being extremely rare and a few dozen IoT devices using it. It doesn't really need to be that powerful. Remembering I've been making do on a 10 year old Optiplex 4th gen i7!

Asus B550 Prime-Plus, Ryzen 5600G, 32Gb 3600Mhz DDR4

2 x Gen3 NVMe 1Tb

First up this is standalone and I can play with it in the form above without any impact on my "production" server. I have already test installed a bare metal Ubuntu Server in RAID1+LVM.

Fine, but it's just so "twenty-teens" architecture. It could use a layer of virtualisation or two maybe. So I tried Proxmox. I got it working last night with a Ubuntu Server dockerhost up to the point all the services started and got kicked off the MQTT server for being duplicate connections. I'd say that was a successful deployment. I also got a rather nice Windows10Pro environment setup with STM32Cube running.

----

What brings me to TrueNAS?

A good assumption is that the 1Tb RAID1 (or zfs mirror) NVMe drive pair will hold all the VMs and "functional" system drives absolutely fine with LVM or pool space to spare for the foreseeable.

The remaining drives look like this;

My thoughts for a new layout (assuming I can manage to move the data already there around to not limit the new layout).

The RAID1 'vault' pair remain as an "offlined" backup drive for select specific folders of important stuff. Including some specific folders in the "Bulk media" category, such as family photos/videos etc. This has no fancy crap on it or involved in access it. It's just an mdadm pair which should be accessible in full from any Linux boot disk. It gets mounted and rsync'd (possible incrementals) then unmounted and powered down.

The "unused" 2Tb HDD I would like to nominate as a primary VM and boot/rootfs backup pool. Again, mounted, backups run, unmounted, powered down. It's a 2Tb drive backing up a 1Tb pair, but it has future proofing capacity.

So far I think that Proxmox alone can handle the above requirements.

It's how to manage the two bulk 6Tb spinners and the assorted, still valid for use, SSDs.

I am willing to upgrade further and get rid of the smaller SSDs in favour of a dual NVMe riser card with another pair of 1Tb Gen3 M.2s.

The trouble is... unless I sub categorise the "bulk media" into portions which can be moved to an SSD, I am stuck spinning up those drives every time an application refreshes a thumbnail on it. I have tried spinning them down and ... with them in a USB enclosure with 4 of them in it... it spins all 4 up sequencially if any spin up. This has to stay in the past somehow. I have considered using autofs and/or mergefs to allow out of hours data to be written to the underlying partition and then merged up when the HDD is mounted. Sounds like an up to 3am fixing stuff pattern.

SSD Cache pool in front of the HDDs sounds great until you research the pit falls of same. And, no, it would seem that you absolutely cannot just power down the HDDs and write cache on the SSDs for hours and hours... not while keeping your sanity. Maybe I would try this experimental, but ... again, I don't like fixing overly complicated home stuff in the evenings. Lab yes, 24/7 reliable no.

Someone must have come up with a solution to this by now. A pool of volumes striped in such a way as frequently accessed 'extents' or sectors can be migrated to higher quality storage. You have a pool level transaction log, yes? Can't it be analysed in batch, frequency of access determined and migration candidates selected, moved, readdressed and repeat every few hours. Eventually the most accessed stuff will end up in the "higher quality" parts of the pool. Hopefully enough that power management can power down the HDDs for at least a portion of the day.

I'd like to explore with you how TrueNAS or TrueNAS-Scale can help me pool and/or divi up that 12Tb of storage and maybe make use of SSD and M.2 to assist that. To give you examples of contents, there are 3Tb of movies, TV series etc. Another 3Tb of "camera memory card dump" and my actual created content, scratch drives and a whole ton of crap. 1Tb of assorted garbage.

The other thing I probably expect I will need is to either use TrueNAS exclusively or take the hurdle of virtualising it on Proxmox. Luckily (I hope) I do have the capacity of providing it access to a whole controller as I have the potential of either a PCIe SATA card or the onboard 6 SATA ports are 'free'. Either the SATA Card OR a dual M.2 Riser. Using both might be pushing the B550, especially with a 2.5Gb PCIe NIC. I have not yet confirmed if I "CAN" pass through PCIe controller card. Maybe I should start with that test.

So. Tell me why I should or shouldn't consider TrueNAS virtualised on top of Proxmox managing one (or more) controllers of SATA/NVMe with "austerity mode" HDD power management.