TimTheSettler

Cadet

- Joined

- Aug 11, 2021

- Messages

- 4

I wanted to post my experience with dedup.

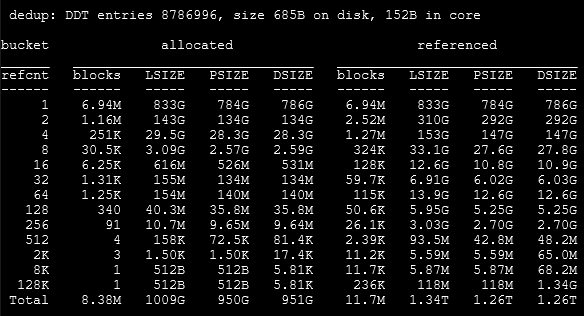

People tend to throw around numbers like, "for every TB of data you will need 5GB of RAM for dedup." Or you here or read things like, "the trade-off with deduplication is reduced server RAM/CPU/SSD performance." I think that's such a bad generalization. Dedup depends on the number of blocks and the smaller the recordsize means smaller block sizes and therefore many blocks. Lots of blocks means that the cost of dedup goes up. It can be calculated quite simply as [Number of Blocks] * 320 = [Size of Dedup table]. The trick is calculating how many blocks you will have. Use a typical 4MB picture. If the recordsize is 128KB then you will have about 32 blocks. That's not so bad.

Originally my vdev was set up with dedup with a recordsize of 4KB. It seemed like a good idea at the time. After all, if the recordsize is small enough then you would assume that they would be a lot of similar blocks. It didn't turn out that way. Very few blocks were duplicated and the small recordsize meant that there were lots of blocks. The hard drives were constantly working and the servers had a hard time doing anything.

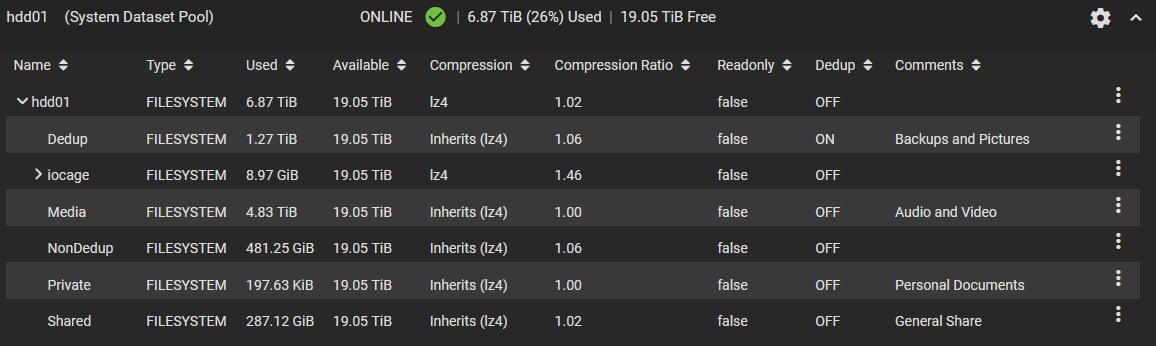

So I recreated the vdev and set up a couple of datasets, one as "dedup" with a recordsize of 128k. My intent was to save my backups and pictures to this dataset since those things tend to contain duplicate files. As you can see below, the dataset has a nice 1.32 dedup ratio and uses about 1GB for 1TB of data. I also don't have problems with the hard drives or CPU. Note that the compression is doing almost nothing for me especially my large Media dataset.

People tend to throw around numbers like, "for every TB of data you will need 5GB of RAM for dedup." Or you here or read things like, "the trade-off with deduplication is reduced server RAM/CPU/SSD performance." I think that's such a bad generalization. Dedup depends on the number of blocks and the smaller the recordsize means smaller block sizes and therefore many blocks. Lots of blocks means that the cost of dedup goes up. It can be calculated quite simply as [Number of Blocks] * 320 = [Size of Dedup table]. The trick is calculating how many blocks you will have. Use a typical 4MB picture. If the recordsize is 128KB then you will have about 32 blocks. That's not so bad.

Originally my vdev was set up with dedup with a recordsize of 4KB. It seemed like a good idea at the time. After all, if the recordsize is small enough then you would assume that they would be a lot of similar blocks. It didn't turn out that way. Very few blocks were duplicated and the small recordsize meant that there were lots of blocks. The hard drives were constantly working and the servers had a hard time doing anything.

So I recreated the vdev and set up a couple of datasets, one as "dedup" with a recordsize of 128k. My intent was to save my backups and pictures to this dataset since those things tend to contain duplicate files. As you can see below, the dataset has a nice 1.32 dedup ratio and uses about 1GB for 1TB of data. I also don't have problems with the hard drives or CPU. Note that the compression is doing almost nothing for me especially my large Media dataset.