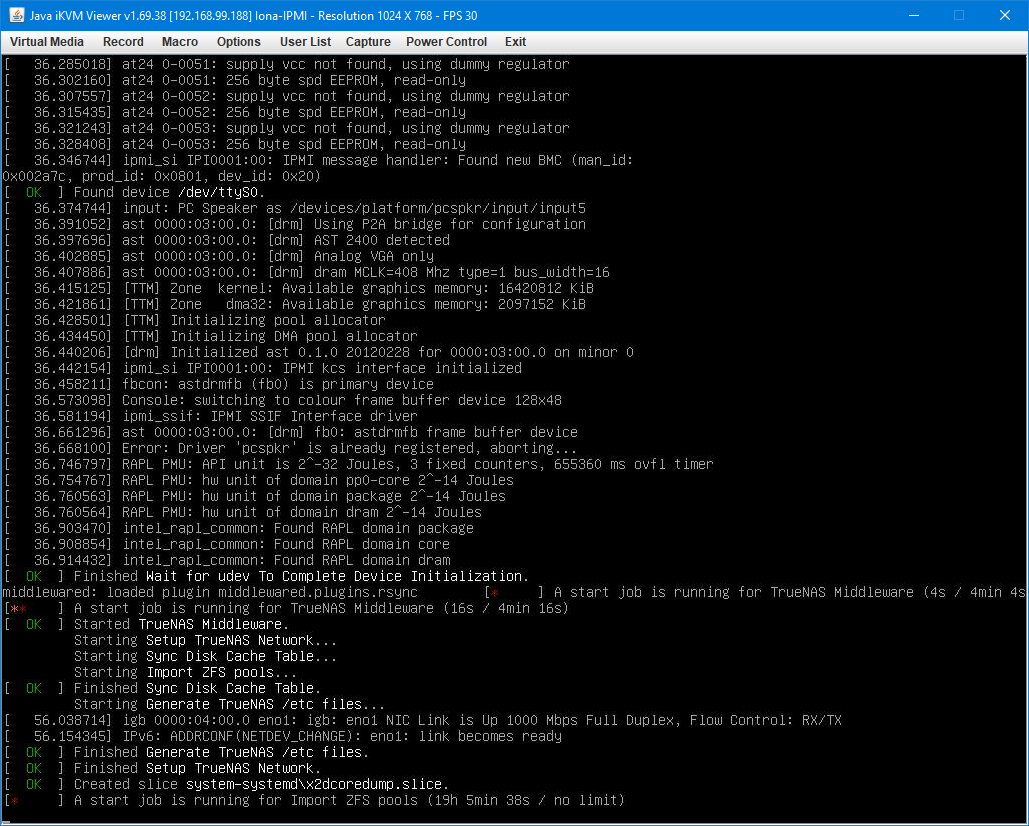

I've currently got an issue where TrueNAS Scale 21.06 is failing to boot, and appears to be stuck importing my pools. It's currently at over 19 hours, so I'm wondering if I should just give it more time or what.

Before rebooting, I had four pools. "Pool", which is 8 x 6 TB HDDs in RAID-Z2, "SSD" which is a single SSD of a few hundred GB and where the system dataset is stored, boot, which is a pair of mirrored USB drives, and "ExternalHome" which is a single 6 TB drive where I made an external backup of my most essential files for transport to an offsite server that I recently got working again after a hardware failure. I was a little optimistic about replication speeds to ExternalHome, so I tried to disconnect the pool prior to replication finishing on some non-essential datasets, but that hung in the GUI and failed to complete after several minutes so I eventually had to disconnect the drive physically so I could throw it in my car and leave for the offsite location. When I returned a couple days later I noticed the ExternalHome pool still listed as unavailable, so I attempted to disconnect the pool again, which still hung at 80%. Later that night I noticed every CPU running at 100%, so I attempted a reboot. When it hadn't come up after four hours I noticed the error and tried reconnecting the drive for ExternalHome, but it made no difference. Last night I just let it run and it's currently at 19 hours.

My theories are that a) there's some ZFS equivalent of chkdisk running and it's going to take a while, b) it's trying to remount ExternalHome and having difficulties, or c) something terrible has happened to one of my pools. Before I try a fresh TrueNAS install I figured I'd touch base with some more knowledgeable people to see how long I should wait, and perhaps get some advice for attempting a recovery if something truly bad happened.

Before rebooting, I had four pools. "Pool", which is 8 x 6 TB HDDs in RAID-Z2, "SSD" which is a single SSD of a few hundred GB and where the system dataset is stored, boot, which is a pair of mirrored USB drives, and "ExternalHome" which is a single 6 TB drive where I made an external backup of my most essential files for transport to an offsite server that I recently got working again after a hardware failure. I was a little optimistic about replication speeds to ExternalHome, so I tried to disconnect the pool prior to replication finishing on some non-essential datasets, but that hung in the GUI and failed to complete after several minutes so I eventually had to disconnect the drive physically so I could throw it in my car and leave for the offsite location. When I returned a couple days later I noticed the ExternalHome pool still listed as unavailable, so I attempted to disconnect the pool again, which still hung at 80%. Later that night I noticed every CPU running at 100%, so I attempted a reboot. When it hadn't come up after four hours I noticed the error and tried reconnecting the drive for ExternalHome, but it made no difference. Last night I just let it run and it's currently at 19 hours.

My theories are that a) there's some ZFS equivalent of chkdisk running and it's going to take a while, b) it's trying to remount ExternalHome and having difficulties, or c) something terrible has happened to one of my pools. Before I try a fresh TrueNAS install I figured I'd touch base with some more knowledgeable people to see how long I should wait, and perhaps get some advice for attempting a recovery if something truly bad happened.