I'm currently testing out my future backup & restore strategy to another (then to be offsite) TrueNAS device for my datasets as well as Zvols for VM usage.

While backing up and restoring dataset via Replication works like a charm, I'm having trouble to get replicated Zvols to run for VMs again.

I've set up my server A to push replications via SSH to server B, and then pull them back from B to A again.

Neither on server B, nor on server A (after receiving the replicated Zvol back again) I'm able to select the Zvol for VM usage (having set "Read Only" to "Off" on the Zvol). I've also tried to go with a "Full Filesystem Replication", to no avail.

I've then set up another Zvol, identical to the original one, and copied the contents of the replicated Zvol over to the new one via "dd". I'm losing my snapshots in the process, but lo and behold, I'm able to use this Zvol for a VM and it boots up correctly.

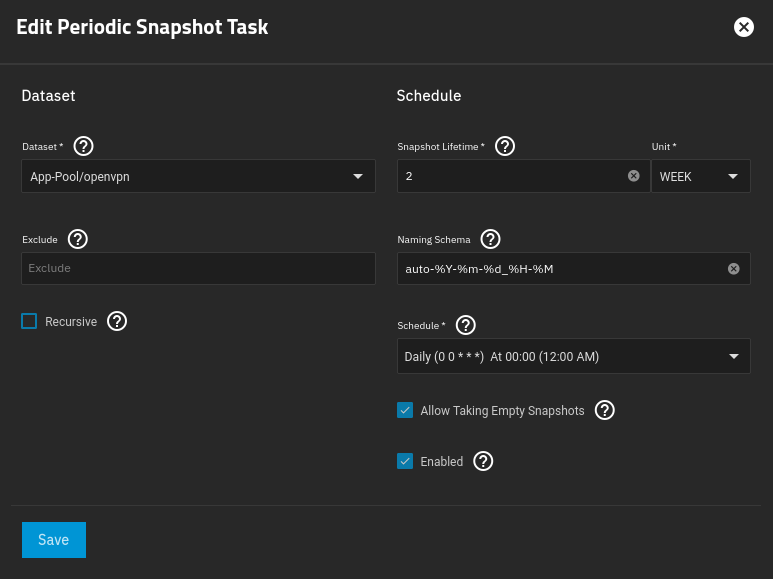

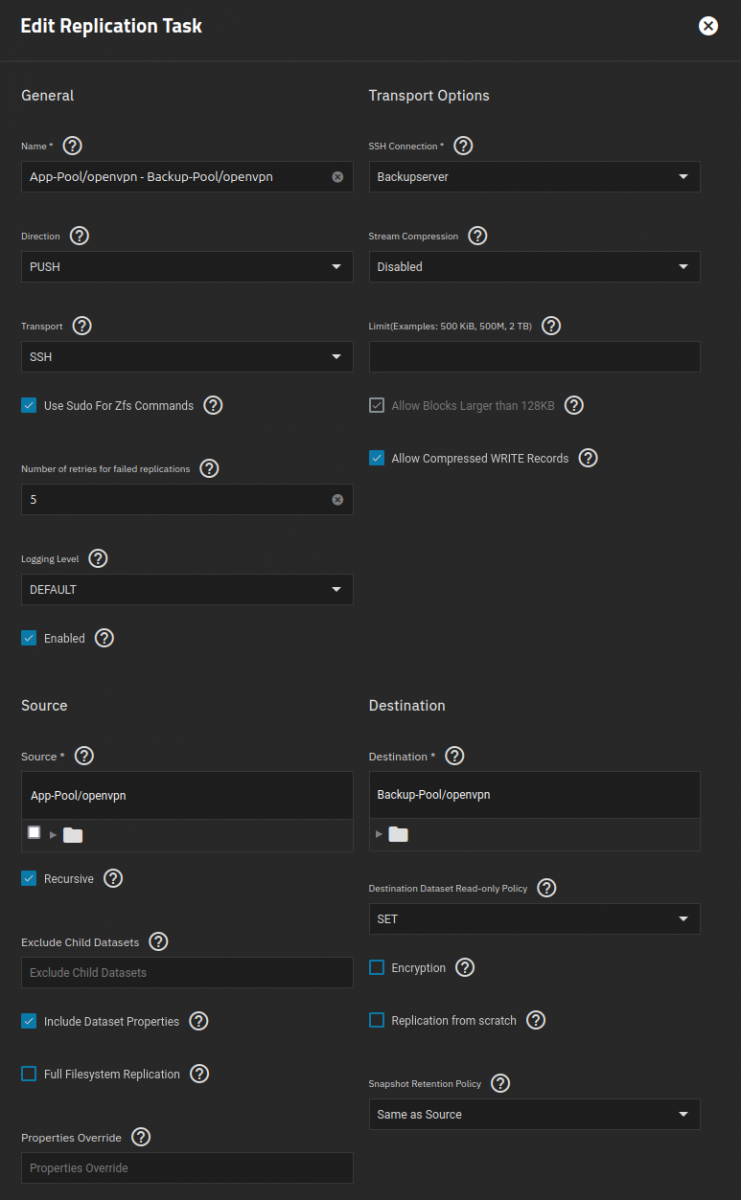

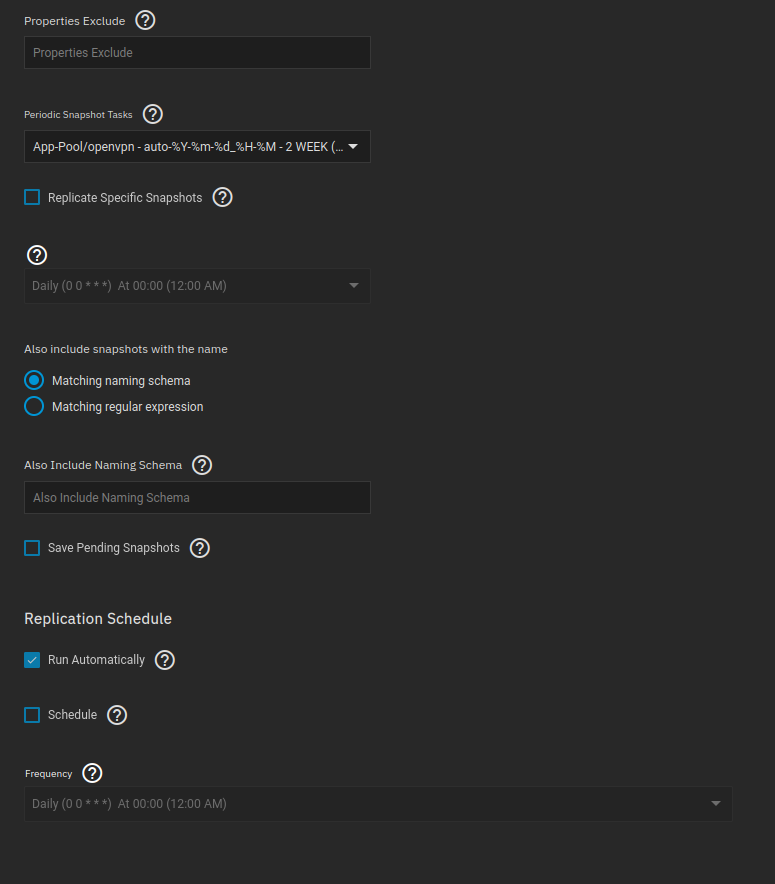

Is there a way to directly used a replicated Zvol for a VM. Is there maybe something wrong with my snapshot/replication tasks (see screenshots)?

I'm running on version 22.12.3.2 on both machines.

While backing up and restoring dataset via Replication works like a charm, I'm having trouble to get replicated Zvols to run for VMs again.

I've set up my server A to push replications via SSH to server B, and then pull them back from B to A again.

Neither on server B, nor on server A (after receiving the replicated Zvol back again) I'm able to select the Zvol for VM usage (having set "Read Only" to "Off" on the Zvol). I've also tried to go with a "Full Filesystem Replication", to no avail.

I've then set up another Zvol, identical to the original one, and copied the contents of the replicated Zvol over to the new one via "dd". I'm losing my snapshots in the process, but lo and behold, I'm able to use this Zvol for a VM and it boots up correctly.

Is there a way to directly used a replicated Zvol for a VM. Is there maybe something wrong with my snapshot/replication tasks (see screenshots)?

I'm running on version 22.12.3.2 on both machines.