That's a dangerous game to play. If your assumptions turn out wrong, the whole thing can come to a screeching halt and a crash. Your datastore, that is.

Hey jgreco, I'm sorry to bother you. But I sort of have the same questions as the original poster, though I'm not trying to create another zvol. I just am asking about the free space reported by ESXi.

In my situation ....

So like the original poster, my question is:

I have a 12.1TB ZVOL created for iSCSI, presented to my ESXi hosts. I have thin-provisioned 7.29TB of guests, of which, it looks like those represent about 4.71TB of actual raw data. Leaving 8.20TB of RAW available storage from ESXi's perspective.

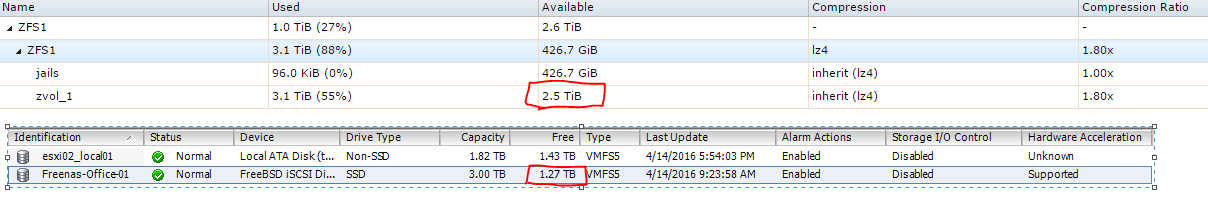

When you look at the FreeNAS side, you can see you actually are getting 1.32x compression, so FreeNAS figures that I have 11.5 TB of the 12.1TB available. If i'm following this right, the math here is that the FreeNAS is calculating that based on zvol is 12.1TB * 1.32 for current compression, figuring that the ZVOL net size with compression is actually about 15.972TB. When you subtract the 4.71TB of RAW usage that is being reported from the VMware side from this number, you get 11.262TB. Numbers don't completely match up, but it's close. I'm guessing this is where the math is coming together?

So ... Assuming that is the case, I think the posters original question, which is the same question I have, is ... With compression enabled, in the way this setup is running today, I would imagine (not saying it's smart to fill to 100%), but lets just assuming this situation for a second. What happens on the ESXi side of things, when I fill to 12.1TB of RAW data? Right now, I would assume, ESXi is going to say that the filesystem is full. And yet, even though it's full, with compression enabled, theoretically, FreeNAS is going to report that it sees (assuming 1.32x compression were to hold) that there is about 3TB of free space still available on the ZVOL.

So I think the question here is, if you are only using FreeNAS as a ESXi store, (and and my case, I am ... I know I have the CIFS mount there, but i'm using about 15GB of space for some ISO files and text config backups from some network gear, of which this amount of usage is never going to grow). But again, if you are only using FreeNAS for iSCSI ESXI storage, is there a benefit to using compression? It would seem to me that at the end of the day, compression doesn't really "buy" you anything, because any net savings based on compression is negated by the fact that the ESXi won't see that net savings. It would seem to me, in order to actually see the net savings in this manner, you'd almost have to be using NFS over iSCSI.

Sorry for the newb sort of question, but if I could beg you for a "teach me something new" response, I would greatly appreciate it!