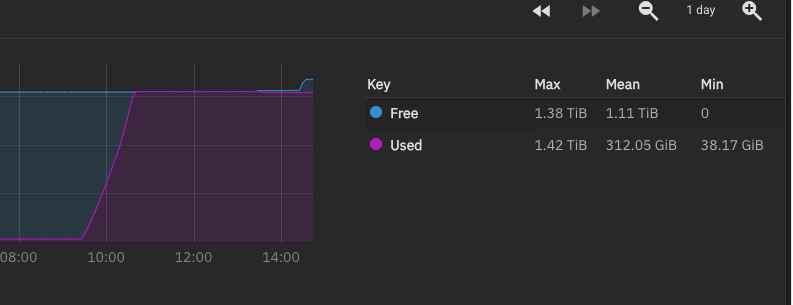

Today morning one of the pools reached 100% in roughly an hour:

During this period, 2 users were using a different pool, they have no access to this one, and it doesn't get much use, only a VM and a few containers. There's no reason why this happened, yet, smb became inaccassible. I can't seem to find what caused this, as none of the commands tell me where this 1.3TB is being used, already checked for snapshots, wiped all the containers, moved stuff temporarily, but nothing really helped. I managed to free up some space to get smb working, but I don't know what to do if this happens again. Anyone has an idea?

During this period, 2 users were using a different pool, they have no access to this one, and it doesn't get much use, only a VM and a few containers. There's no reason why this happened, yet, smb became inaccassible. I can't seem to find what caused this, as none of the commands tell me where this 1.3TB is being used, already checked for snapshots, wiped all the containers, moved stuff temporarily, but nothing really helped. I managed to free up some space to get smb working, but I don't know what to do if this happens again. Anyone has an idea?

Code:

zfs list -r SSD -t all NAME USED AVAIL REFER MOUNTPOINT SSD 1.52T 239G 1.41T /mnt/SSD SSD/.system 2.30G 239G 2.12G legacy SSD/.system/configs-03127bf8be404c849cf141a05d5f9b13 52.0M 239G 52.0M legacy SSD/.system/cores 300K 1024M 300K legacy SSD/.system/ctdb_shared_vol 96K 239G 96K legacy SSD/.system/glusterd 96K 239G 96K legacy SSD/.system/rrd-03127bf8be404c849cf141a05d5f9b13 82.4M 239G 82.4M legacy SSD/.system/samba4 6.34M 239G 772K legacy SSD/.system/samba4@update--2022-02-10-12-49--12.0-U5.1 168K - 508K - SSD/.system/samba4@wbc-1644497790 168K - 508K - SSD/.system/samba4@wbc-1644840524 340K - 516K - SSD/.system/samba4@wbc-1648719127 428K - 556K - SSD/.system/samba4@wbc-1652698096 244K - 540K - SSD/.system/samba4@wbc-1652700008 232K - 540K - SSD/.system/samba4@wbc-1652703977 216K - 540K - SSD/.system/samba4@wbc-1653312199 216K - 540K - SSD/.system/samba4@wbc-1653313603 216K - 540K - SSD/.system/samba4@wbc-1653590670 248K - 540K - SSD/.system/samba4@wbc-1653768564 256K - 548K - SSD/.system/samba4@wbc-1657276404 224K - 556K - SSD/.system/samba4@wbc-1657289419 224K - 556K - SSD/.system/samba4@wbc-1673785991 216K - 556K - SSD/.system/samba4@update--2023-01-15-13-27--SCALE-22.02.1 184K - 560K - SSD/.system/samba4@wbc-1673789450 176K - 556K - SSD/.system/samba4@update--2023-02-02-20-30--SCALE-22.02.4 372K - 580K - SSD/.system/samba4@update--2023-07-18-06-39--SCALE-22.12.0 356K - 620K - SSD/.system/samba4@update--2023-08-02-18-44--SCALE-22.12.3.2 396K - 660K - SSD/.system/services 96K 239G 96K legacy SSD/.system/syslog-03127bf8be404c849cf141a05d5f9b13 45.0M 239G 45.0M legacy SSD/.system/webui 96K 239G 96K legacy SSD/SSDDataset 38.7G 239G 38.7G /mnt/SSD/SSDDataset SSD/iocage 1.86G 239G 12.0M /mnt/SSD/iocage SSD/iocage/download 402M 239G 96K /mnt/SSD/iocage/download SSD/iocage/download/12.2-RELEASE 402M 239G 402M /mnt/SSD/iocage/download/12.2-RELEASE SSD/iocage/images 96K 239G 96K /mnt/SSD/iocage/images SSD/iocage/jails 96K 239G 96K /mnt/SSD/iocage/jails SSD/iocage/log 96K 239G 96K /mnt/SSD/iocage/log SSD/iocage/releases 1.45G 239G 96K /mnt/SSD/iocage/releases SSD/iocage/releases/12.2-RELEASE 1.45G 239G 96K /mnt/SSD/iocage/releases/12.2-RELEASE SSD/iocage/releases/12.2-RELEASE/root 1.45G 239G 1.45G /mnt/SSD/iocage/releases/12.2-RELEASE/root SSD/iocage/templates 96K 239G 96K /mnt/SSD/iocage/templates SSD/ix-applications 464M 239G 172K /mnt/SSD/ix-applications SSD/ix-applications/catalogs 42.7M 239G 42.7M /mnt/SSD/ix-applications/catalogs SSD/ix-applications/default_volumes 96K 239G 96K /mnt/SSD/ix-applications/default_volumes SSD/ix-applications/docker 289M 239G 289M /mnt/SSD/ix-applications/docker SSD/ix-applications/k3s 132M 239G 132M /mnt/SSD/ix-applications/k3s SSD/ix-applications/k3s/kubelet 280K 239G 280K legacy SSD/ix-applications/releases 96K 239G 96K /mnt/SSD/ix-applications/releases SSD/virtual-machines 72.4G 239G 6.40G /mnt/SSD/virtual-machines SSD/virtual-machines/perforcew10-izjhsr 66.0G 272G 33.5G -

Code:

zfs get all SSD NAME PROPERTY VALUE SOURCE SSD type filesystem - SSD creation Wed Feb 10 12:03 2021 - SSD used 1.52T - SSD available 239G - SSD referenced 1.41T - SSD compressratio 1.00x - SSD mounted yes - SSD quota none default SSD reservation none default SSD recordsize 128K default SSD mountpoint /mnt/SSD default SSD sharenfs off default SSD checksum on default SSD compression lz4 local SSD atime on default SSD devices on default SSD exec on default SSD setuid on default SSD readonly off default SSD zoned off default SSD snapdir hidden default SSD aclmode passthrough local SSD aclinherit passthrough local SSD createtxg 1 - SSD canmount on default SSD xattr on default SSD copies 1 default SSD version 5 - SSD utf8only off - SSD normalization none - SSD casesensitivity sensitive - SSD vscan off default SSD nbmand off default SSD sharesmb off default SSD refquota none default SSD refreservation none default SSD guid 18199034206595265360 - SSD primarycache all default SSD secondarycache all default SSD usedbysnapshots 0B - SSD usedbydataset 1.41T - SSD usedbychildren 116G - SSD usedbyrefreservation 0B - SSD logbias latency default SSD objsetid 54 - SSD dedup off default SSD mlslabel none default SSD sync standard default SSD dnodesize legacy default SSD refcompressratio 1.00x - SSD written 1.41T - SSD logicalused 1.50T - SSD logicalreferenced 1.41T - SSD volmode default default SSD filesystem_limit none default SSD snapshot_limit none default SSD filesystem_count none default SSD snapshot_count none default SSD snapdev hidden default SSD acltype nfsv4 default SSD context none default SSD fscontext none default SSD defcontext none default SSD rootcontext none default SSD relatime off default SSD redundant_metadata all default SSD overlay on default SSD encryption off default SSD keylocation none default SSD keyformat none default SSD pbkdf2iters 0 default SSD special_small_blocks 0 default SSD org.freebsd.ioc:active yes local