Elliot Dierksen

Guru

- Joined

- Dec 29, 2014

- Messages

- 1,135

I just upgraded my two FreeNAS systems with Chelsio T580 40G NIC's from Chelsio T520 NIC's. Both systems are Cisco C240 M3S units with LSI-9207-8i and LSI-9207-8e and are running FreeNAS 11.1-U7. The primary unit has dual E5-2637 v2 @ 3.50GHz CPU's and 256G RAM. The secondary has dual E5-2637 @ 3.00GHz CPU's and 128G RAM. The switch is a cisco Nexus3000 C3064PQ and I am using 2M OM4 fiber cables and new QSFP+ modules from FS.COM. I guess the performance isn't terrible with iperf3, but it is certainly a lot less than I had hoped/expected.

The switch is running NXOS: version 7.0(3)I7(6). I am not using jumbo frames at the moment. The ESXi hosts access the FreeNAS data stores via NFS which are RAIDZ2, I haven't compared anything with the data stores yet. I am only examining the iperf3 data.

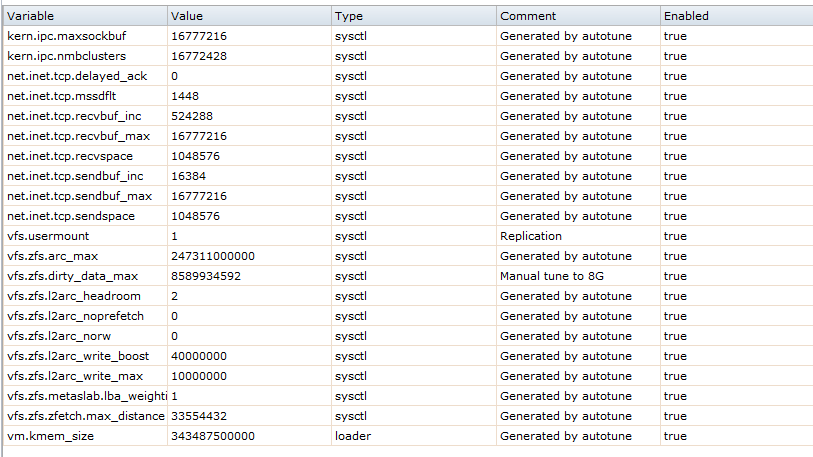

These are the tunables from the primary:

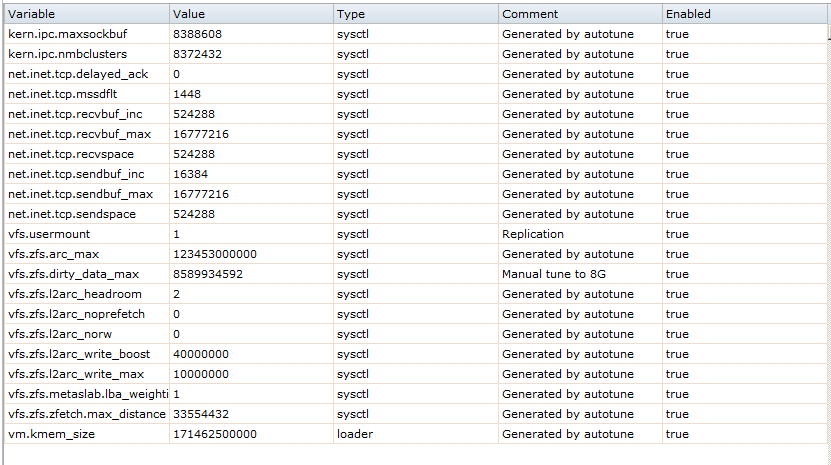

And these are the tunables from the secondary.

The switch port configs could hardly be more vanilla:

FYI, FreeNAS2 is the primary. Any ideas on where to hunt?

Code:

Primary as client: Connecting to host 192.168.252.23, port 5201 [ 5] local 192.168.252.27 port 18741 connected to 192.168.252.23 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 1.97 GBytes 16.9 Gbits/sec 0 841 KBytes [ 5] 1.00-2.00 sec 1.73 GBytes 14.9 Gbits/sec 0 845 KBytes [ 5] 2.00-3.00 sec 1.96 GBytes 16.8 Gbits/sec 0 872 KBytes [ 5] 3.00-4.00 sec 1.68 GBytes 14.5 Gbits/sec 0 872 KBytes [ 5] 4.00-5.00 sec 1.71 GBytes 14.7 Gbits/sec 0 897 KBytes [ 5] 5.00-6.00 sec 1.73 GBytes 14.8 Gbits/sec 0 912 KBytes [ 5] 6.00-7.00 sec 1.75 GBytes 15.0 Gbits/sec 0 920 KBytes [ 5] 7.00-8.00 sec 1.87 GBytes 16.1 Gbits/sec 0 958 KBytes [ 5] 8.00-9.00 sec 1.70 GBytes 14.6 Gbits/sec 0 982 KBytes [ 5] 9.00-10.00 sec 1.83 GBytes 15.7 Gbits/sec 0 982 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 17.9 GBytes 15.4 Gbits/sec 0 sender [ 5] 0.00-10.00 sec 17.9 GBytes 15.4 Gbits/sec receiver Secondary as client: Connecting to host 192.168.252.27, port 5201 [ 5] local 192.168.252.23 port 38278 connected to 192.168.252.27 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 2.06 GBytes 17.7 Gbits/sec 0 594 KBytes [ 5] 1.00-2.00 sec 2.05 GBytes 17.6 Gbits/sec 0 594 KBytes [ 5] 2.00-3.00 sec 2.98 GBytes 25.6 Gbits/sec 0 5.44 MBytes [ 5] 3.00-4.00 sec 3.03 GBytes 26.0 Gbits/sec 530 818 KBytes [ 5] 4.00-5.00 sec 2.87 GBytes 24.6 Gbits/sec 0 818 KBytes [ 5] 5.00-6.00 sec 2.80 GBytes 24.1 Gbits/sec 0 818 KBytes [ 5] 6.00-7.00 sec 2.82 GBytes 24.2 Gbits/sec 66 495 KBytes [ 5] 7.00-8.00 sec 2.05 GBytes 17.6 Gbits/sec 0 595 KBytes [ 5] 8.00-9.00 sec 2.05 GBytes 17.6 Gbits/sec 0 595 KBytes [ 5] 9.00-10.00 sec 2.05 GBytes 17.6 Gbits/sec 0 595 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 24.8 GBytes 21.3 Gbits/sec 596 sender [ 5] 0.00-10.02 sec 24.7 GBytes 21.2 Gbits/sec receiver

The switch is running NXOS: version 7.0(3)I7(6). I am not using jumbo frames at the moment. The ESXi hosts access the FreeNAS data stores via NFS which are RAIDZ2, I haven't compared anything with the data stores yet. I am only examining the iperf3 data.

These are the tunables from the primary:

And these are the tunables from the secondary.

The switch port configs could hardly be more vanilla:

Code:

interface Ethernet1/49 description FreeNAS2 switchport access vlan 252 spanning-tree link-type point-to-point interface Ethernet1/50 description FreeNAS switchport access vlan 252 spanning-tree link-type point-to-point

FYI, FreeNAS2 is the primary. Any ideas on where to hunt?