Hello everybody,

i have a little problem and would need some advice where to look in order to fix it.

my current setup consists of

1. PC: ryzen3600 + 32gb ram + 970 PRO ssd with a onboard 10Gbps Aquantia card on x470 Taichi ultimate

2. Microserver gen8 (16gb, 1265Lv2) with 2x 1tb red and 1x 256gb samsung 850 pro. it has the SFP+ Mellanox Connext-2 (mnpa19-xtr) with the Microtik S+R10

they are connected directly with static IPs. (PC >> cable cat6>> wall socket cat6 >> cat6 cable in the wall>>patch panel cat6a keystone >> patch cable cat6)

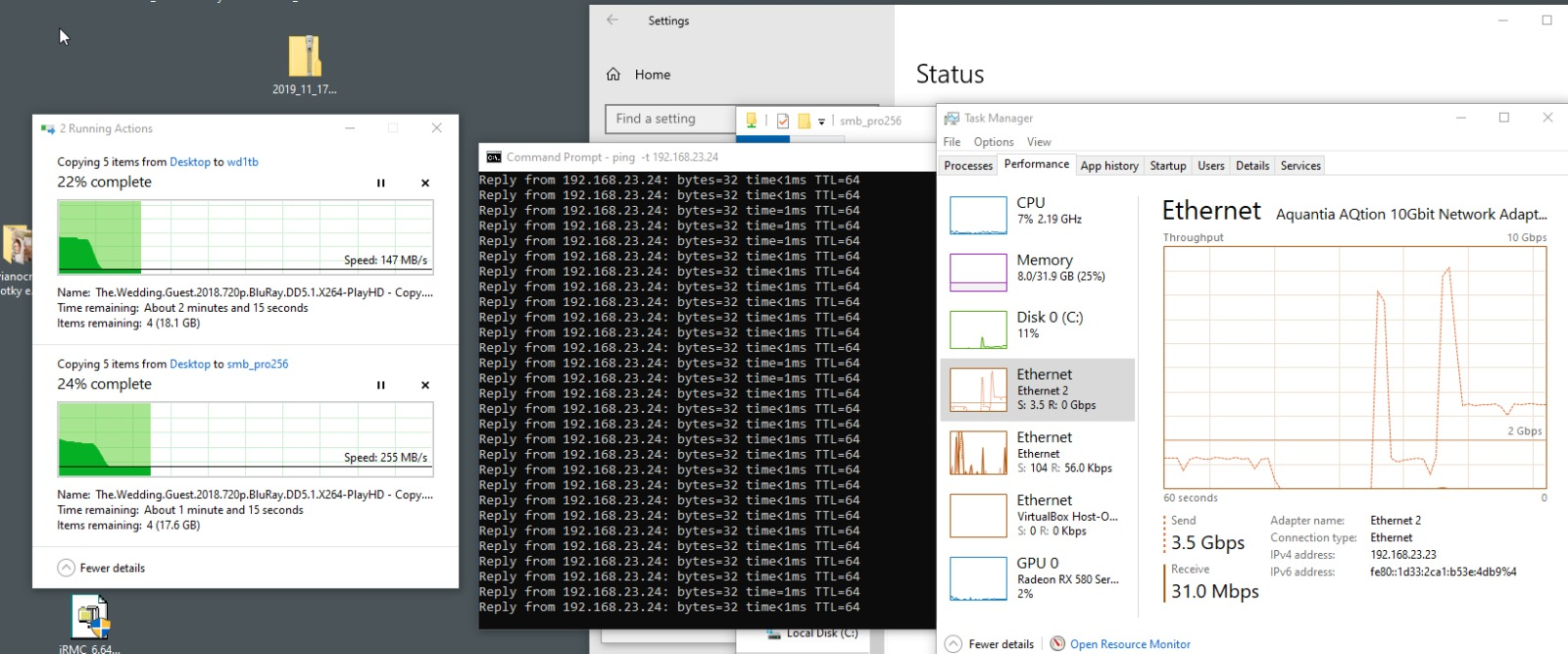

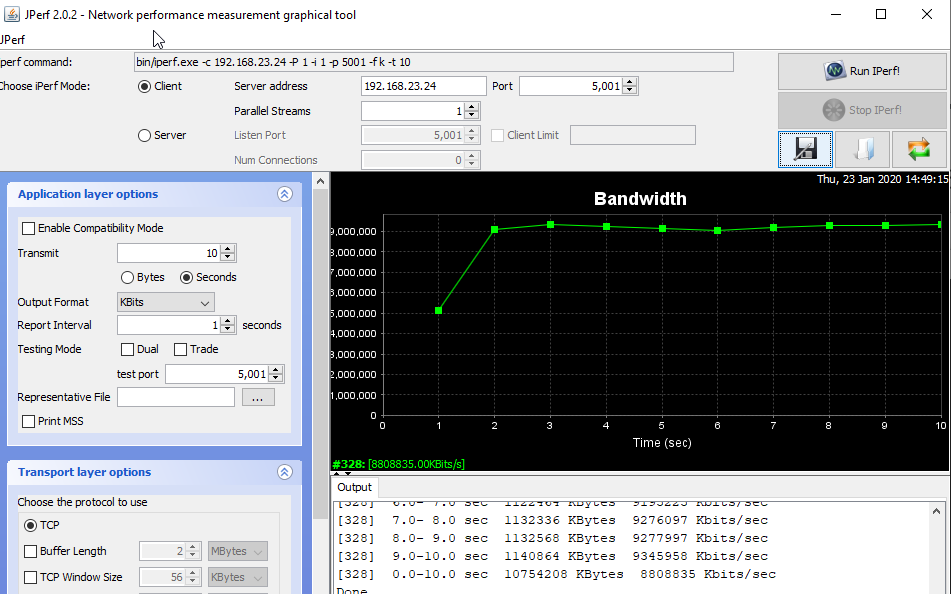

the problem is that i am not getting close to the full speed when trying to copy files over samba. you can see some peaks about 8-9Gbps but usually it is around 3.5Gbps. I am not sure what it is caused by. First i thought it might be the cable/sockets/patch panel issue but i run iperf and it looks fine:

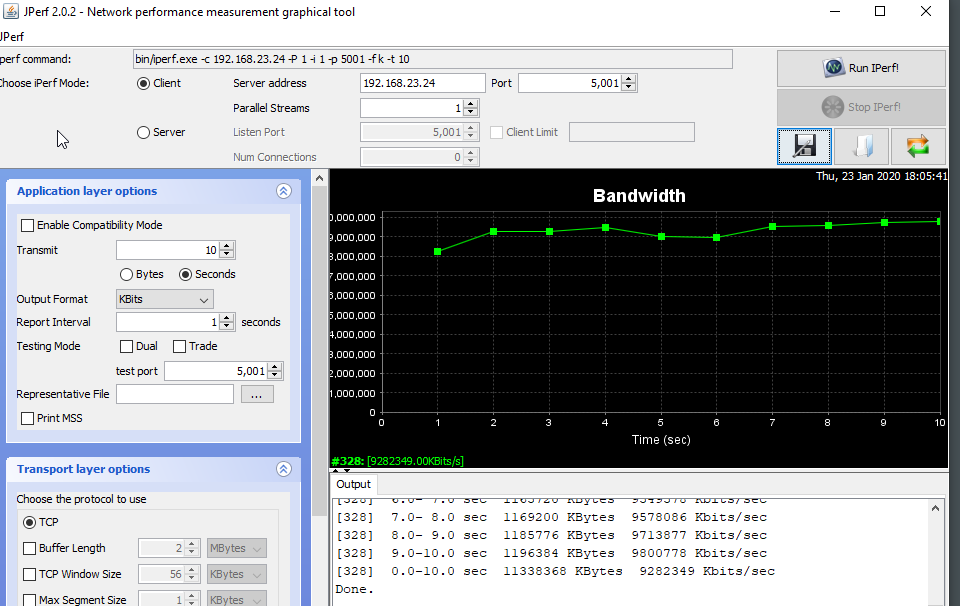

then i tried to use jumbo frame so i set MTU to 9014 because this setting was available on the Aquantia. this only helped for the iperf a little:

So currently i think it might be a samba problem but i am free to any suggestions.

btw it is my testing server. the "production" is the same GEN8 but with 4x3tb in raid and the reason i want to go 10gb is that i plan to switch to 4x1tb ssd. i dont need to but i want to :)

thanks for you time

i have a little problem and would need some advice where to look in order to fix it.

my current setup consists of

1. PC: ryzen3600 + 32gb ram + 970 PRO ssd with a onboard 10Gbps Aquantia card on x470 Taichi ultimate

2. Microserver gen8 (16gb, 1265Lv2) with 2x 1tb red and 1x 256gb samsung 850 pro. it has the SFP+ Mellanox Connext-2 (mnpa19-xtr) with the Microtik S+R10

they are connected directly with static IPs. (PC >> cable cat6>> wall socket cat6 >> cat6 cable in the wall>>patch panel cat6a keystone >> patch cable cat6)

the problem is that i am not getting close to the full speed when trying to copy files over samba. you can see some peaks about 8-9Gbps but usually it is around 3.5Gbps. I am not sure what it is caused by. First i thought it might be the cable/sockets/patch panel issue but i run iperf and it looks fine:

then i tried to use jumbo frame so i set MTU to 9014 because this setting was available on the Aquantia. this only helped for the iperf a little:

So currently i think it might be a samba problem but i am free to any suggestions.

btw it is my testing server. the "production" is the same GEN8 but with 4x3tb in raid and the reason i want to go 10gb is that i plan to switch to 4x1tb ssd. i dont need to but i want to :)

thanks for you time

Last edited by a moderator: