14. Virtual Machines¶

A Virtual Machine (VM) is an environment on a host computer that can be used as if it were a separate physical computer. VMs can be used to run multiple operating systems simultaneously on a single computer. Operating systems running inside a VM see emulated virtual hardware rather than the actual hardware of the host computer. This provides more isolation than Jails, although there is additional overhead. A portion of system RAM is assigned to each VM, and each VM uses a zvol for storage. While a VM is running, these resources are not available to the host computer or other VMs.

FreeNAS® VMs use the bhyve(8) virtual machine software. This type of virtualization requires an Intel processor with Extended Page Tables (EPT) or an AMD processor with Rapid Virtualization Indexing (RVI) or Nested Page Tables (NPT).

To verify that an Intel processor has the required features, use

Shell to run grep VT-x /var/run/dmesg.boot. If the

EPT and UG features are shown, this processor can be used with

bhyve.

To verify that an AMD processor has the required features, use Shell to run grep POPCNT /var/run/dmesg.boot. If the output shows the POPCNT feature, this processor can be used with bhyve.

Note

By default, new VMs have the

bhyve(8)

-H option set. This causes the virtual CPU thread to

yield when a HLT instruction is detected, and prevents idle VMs

from consuming all of the host’s CPU.

Note

AMD K10 “Kuma” processors include POPCNT but do not support NRIPS, which is required for use with bhyve. Production of these processors ceased in 2012 or 2013.

14.1. Creating VMs¶

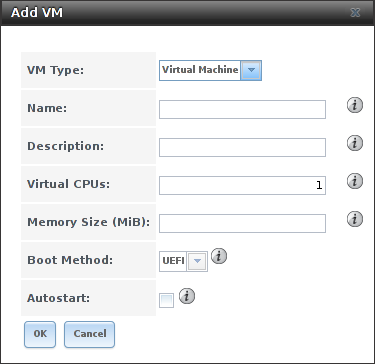

Select for the Add VM dialog shown in Figure 14.1.1:

Fig. 14.1.1 Add VM

VM configuration options are described in Table 14.1.1.

| Setting | Value | Description |

|---|---|---|

| VM Type | drop-down menu | Choose between a standard VM or a specialized Docker VM VM. |

| Name | string | Enter a name to identify the VM. |

| Description | string | Enter a short description of the VM or its purpose. |

| Virtual CPUs | integer | Select the number of virtual CPUs to allocate to the VM. The maximum is 16 unless the host CPU limits the maximum. The VM operating system might also have operational or licensing restrictions on the number of CPUs. |

| Memory Size (MiB) | integer | Allocate the amount of RAM in mebibytes for the VM. |

| Boot Method | drop-down menu | Select UEFI for newer operating systems, or UEFI-CSM for (Compatibility Support Mode) older operating systems that only understand BIOS booting. |

| Autostart | checkbox | Set to start the VM automatically when the system boots. |

14.2. Adding Devices to a VM¶

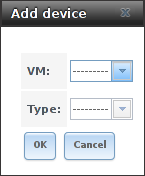

After creating the VM, click it to select it, then click Devices and Add Device to add virtual hardware to it:

Fig. 14.2.1 Add Devices to a VM

Select the name of the VM from the VM drop-down menu, then select the Type of device to add. These types are available:

Note

A Docker VM does not support VNC connections.

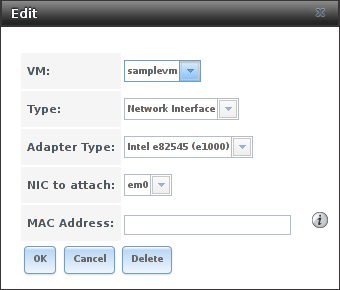

Figure 14.2.2 shows the fields that appear when Network Interface is the selected Type.

14.2.1. Network Interfaces¶

Fig. 14.2.2 VM Network Interface Device

The default Adapter Type emulates an Intel e82545 (e1000) Ethernet card for compatibility with most operating systems. VirtIO can provide better performance when the operating system installed in the VM supports VirtIO paravirtualized network drivers.

If the system has multiple physical network interface cards, use the Nic to attach drop-down menu to specify which physical interface to associate with the VM.

By default, the VM receives an auto-generated random MAC address. To override the default with a custom value, enter the desired address into the MAC Address field.

14.2.2. Disk Devices¶

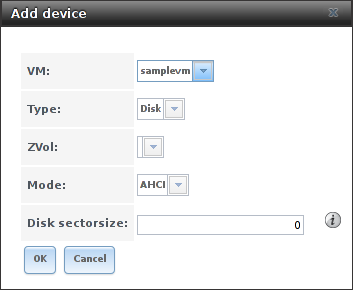

Zvols are typically used as virtual hard drives. After creating a zvol, associate it with the VM by selecting Add device.

Fig. 14.2.3 VM Disk Device

Choose the VM, select a Type of Disk, select the created zvol, then set the Mode:

- AHCI emulates an AHCI hard disk for best software compatibility. This is recommended for Windows VMs.

- VirtIO uses paravirtualized drivers and can provide better performance, but requires the operating system installed in the VM to support VirtIO disk devices.

If a specific sector size is required, enter the number of bytes into Disk sector size. The default of 0 uses an autotune script to determine the best sector size for the zvol.

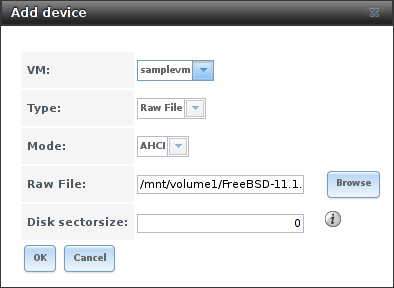

14.2.3. Raw Files¶

Raw Files are similar to Zvol disk devices, but the disk image comes from a file. These are typically used with existing read-only binary images of drives, like an installer disk image file meant to be copied onto a USB stick.

After obtaining and copying the image file to the FreeNAS® system, select Add device, choose the VM, select a Type of Raw File, browse to the image file, then set the Mode:

- AHCI emulates an AHCI hard disk for best software compatibility.

- VirtIO uses paravirtualized drivers and can provide better performance, but requires the operating system installed in the VM to support VirtIO disk devices.

A Docker VM also has a password field. This is the login password for the Docker VM.

If a specific sector size is required, enter the number of bytes into Disk sectorsize. The default of 0 uses an autotuner to find and set the best sector size for the file.

Fig. 14.2.4 VM Raw File Disk Device

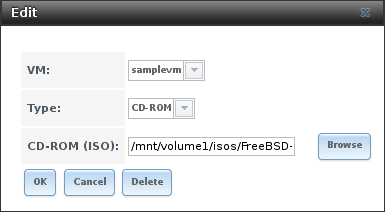

14.2.4. CD-ROM Devices¶

Adding a CD-ROM device makes it possible to boot the VM from a CD-ROM image, typically an installation CD. The image must be present on an accessible portion of the FreeNAS® storage. In this example, a FreeBSD installation image is shown:

Fig. 14.2.5 VM CD-ROM Device

Note

VMs from other virtual machine systems can be recreated for use in FreeNAS®. Back up the original VM, then create a new FreeNAS® VM with virtual hardware as close as possible to the original VM. Binary-copy the disk image data into the zvol created for the FreeNAS® VM with a tool that operates at the level of disk blocks, like dd(1). For some VM systems, it is best to back up data, install the operating system from scratch in a new FreeNAS® VM, and restore the data into the new VM.

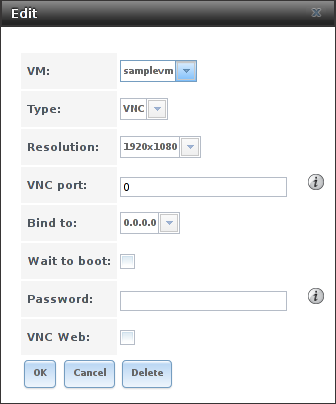

14.2.5. VNC Interface¶

VMs set to UEFI booting are also given a VNC (Virtual Network Computing) remote connection. A standard VNC client can connect to the VM to provide screen output and keyboard and mouse input. Each standard VM can have a single VNC device. A Docker VM does not support VNC devices.

Note

Using a non-US keyboard with VNC is not yet supported. As a workaround, select the US keymap on the system running the VNC client, then configure the operating system running in the VM to use a keymap that matches the physical keyboard. This will enable passthrough of all keys regardless of the keyboard layout.

Figure 14.2.6 shows the fields that appear when VNC is the selected Type.

Fig. 14.2.6 VM VNC Device

The Resolution drop-down menu can be used to modify the default screen resolution used by the VNC session.

The VNC port can be set to 0, left empty for FreeNAS® to assign a port when the VM is started, or set to a fixed, preferred port number.

Select the IP address for VNC to listen on with the Bind to drop-down menu.

Set Wait to boot to indicate that the VNC client should wait until the VM has booted before attempting the connection.

To automatically pass the VNC password, enter it into the Password field. Note that the password is limited to 8 characters.

To use the VNC web interface, set VNC Web.

Tip

If a RealVNC 5.X Client shows the error

RFB protocol error: invalid message type, disable the

Adapt to network speed option and move the slider to

Best quality. On later versions of RealVNC, select

,

click Expert, ProtocolVersion, then

select 4.1 from the drop-down menu.

14.2.6. Virtual Serial Ports¶

VMs automatically include a virtual serial port.

/dev/nmdm1Bis assigned to the first VM/dev/nmdm2Bis assigned to the second VM

And so on. These virtual serial ports allow connecting to the VM console from the Shell.

Tip

The nmdm

device is dynamically created. The actual nmdm name can

differ on each system.

To connect to the first VM:

cu -s 9600 -l /dev/nmdm1B

See cu(1) for more information on operating cu.

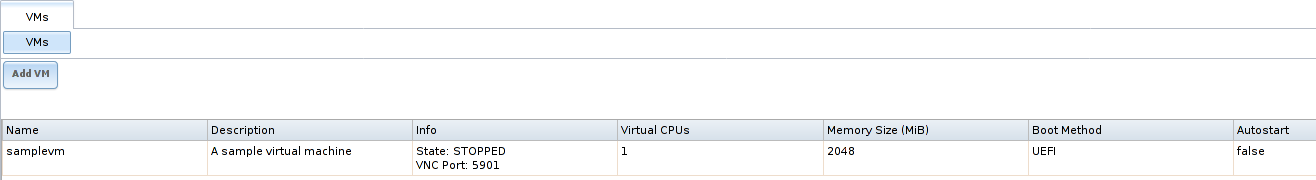

14.3. Running VMs¶

Select to see a list of configured VMs. Configuration and control buttons appear at the bottom of the screen when an individual VM is selected with a mouse click:

Fig. 14.3.1 VM Configuration and Control Buttons

The name, description, running state, VNC port (if present), and other configuration values are shown. Click on an individual VM for additional options.

Some standard buttons are shown for all VMs:

- Edit changes VM settings.

- Delete removes the VM.

- Devices is used to add and remove devices to this VM.

When a VM is not running, these buttons are available:

- Start starts the VM.

- Clone clones or copies the VM to a new VM. The new VM is given the same name as the original, with _cloneN appended.

When a VM is already running, these buttons are available:

- Stop shuts down the VM.

- Power off immediately halts the VM, equivalent to disconnecting the power on a physical computer.

- Restart restarts the VM.

- Vnc via Web starts a web VNC connection to the VM. The VM must have a VNC device and VNC Web enabled in that device.

14.4. Deleting VMs¶

A VM is deleted by clicking the VM, then Delete at the bottom of the screen. A dialog shows any related devices that will also be deleted and asks for confirmation.

Tip

Zvols used in disk devices and image files used in raw file devices are not removed when a VM is deleted. These resources can be removed manually after it is determined that the data in them has been backed up or is no longer needed.

14.5. Docker VM¶

Docker is open source software for automating application deployment inside containers. A container provides a complete filesystem, runtime, system tools, and system libraries, so applications always see the same environment.

Rancher is a web-based tool for managing Docker containers.

FreeNAS® runs the Rancher web interface within the Docker VM.

14.5.1. Docker VM Requirements¶

The system BIOS must have virtualization support enabled for a Docker VM to work properly. On Intel systems this is typically an option called VT-x. AMD systems generally have an SVM option.

20 GiB of storage space is required for the Docker VM. For setup, the SSH service must be enabled.

The Docker VM requires 2 GiB of RAM while running.

14.5.2. Create the Docker VM¶

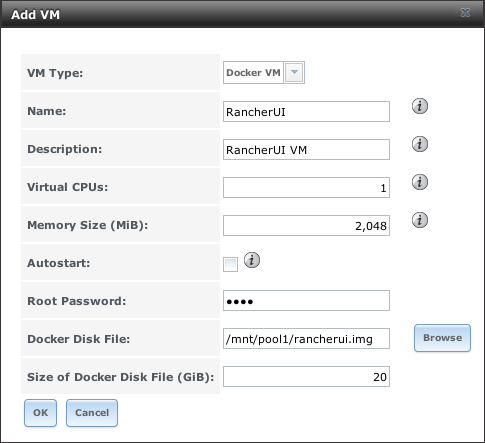

Figure 14.5.1 shows the window that appears after going to the page, clicking Add VM, and selecting Docker VM as the VM Type.

Fig. 14.5.1 Docker VM Configuration

| Setting | Value | Description |

|---|---|---|

| VM Type | drop-down menu | Choose between a standard VM or a specialized Docker VM VM. |

| Name | string | A descriptive name for the Docker VM. |

| Description | string | A description of this Docker VM. |

| Virtual CPUs | integer | Number of virtual CPUs to allocate to the Docker VM. The maximum is 16 unless the host CPU also limits the maximum. The VM operating system can also have operational or licensing restrictions on the number of CPUs. |

| Memory Size (MiB) | integer | Allocate this amount of RAM in MiB for the Docker VM. A minimum 2048 MiB of RAM is required. |

| Autostart | checkbox | Set to start this Docker VM when the FreeNAS® system boots. |

| Root Password | string | Enter a password to use with the Docker VM root account. The password cannot contain a space. |

| Docker Disk File | string | Browse to the location to store a new raw file. Add /, a

unique name to the end of the path, and .img to create a new raw file

with that name. Example: /mnt/pool1/rancherui.img |

| Size of Docker Disk File (GiB) | integer | Allocate storage size in GiB for the new raw file. 20 is the minimum recommendation. |

Recommendations for the Docker VM:

- Enter Rancher UI VM for the Description.

- Leave the number of Virtual CPUs at 1.

- Enter 2048 for the Memory Size.

- Leave 20 as the Size of Docker Disk File (GiB).

Click OK to create the virtual machine.

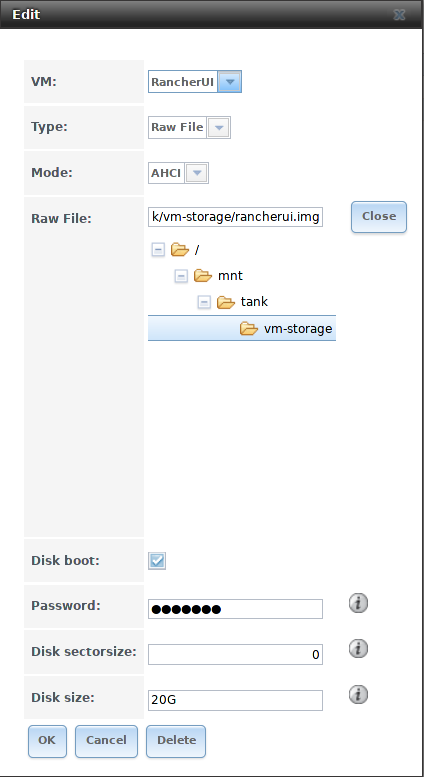

To make any changes to the raw file after creating the Docker VM, click on the Devices button for the VM to show the devices attached to that VM. Click on the RAW device to select it, then click Edit. Figure 14.5.2 shows the options for editing the Docker VM raw file options.

Fig. 14.5.2 Changing the Docker VM Password

The raw file options section describes the options in this window.

14.5.3. Start the Docker VM¶

Click VMs, then click on the Docker VM line to select it. Click the Start button and Yes to start the VM.

14.5.4. SSH into the Docker VM¶

It is possible to SSH into a running Docker VM. Go to the

page and find the Docker VM. The Info column shows the

Docker VM Com Port. In this example,

/dev/nmdm12B is used.

Use an SSH client to connect to the FreeNAS® server. Remember this also requires the SSH service to be running. Depending on the FreeNAS® system configuration, it might also require changes to the SSH service settings, like setting Login as Root with Password.

At the FreeNAS® console prompt, connect to the running Docker VM with

cu, replacing

/dev/nmdm12B with the value from the Docker VM

Com Port:

cu -l /dev/nmdm12B -s 9600

If the terminal does not show a rancher login: prompt,

press Enter. The Docker VM can take several minutes to start

and display the login prompt.

14.5.5. Installing and Configuring the Rancher Server¶

Using the Docker VM to install and configure the Rancher Server is

done from the command line. Open the Shell and enter the command

cu -l /dev/nmdm12B -s 9600, where /dev/nmdm12B is

the Com Port value in the Info column for the

Docker VM.

If the terminal does not show a rancher login: prompt after

a few moments, press Enter.

Enter rancher as the username, press Enter, then type the

password that was entered when the raw file was created above and

press Enter again. After logging in, a

[rancher@rancher ~]$ prompt is displayed.

Ensure Rancher has functional networking and can ping an outside website.

[rancher]@ClientHost ~]$ ping -c 3 google.com

PING google.com (172.217.0.78): 56 data bytes

64 bytes from 172.217.0.78: seq=0 ttl=54 time=18.613 ms

64 bytes from 172.217.0.78: seq=1 ttl=54 time=18.719 ms

64 bytes from 172.217.0.78: seq=2 ttl=54 time=18.788 ms

--- google.com ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 18.613/18.706/18.788 ms

If ping returns an error, adjust the VM Network Interface and reboot the Docker VM.

Download and install the Rancher server with sudo docker run -d --restart=unless-stopped -p 8080:8080 rancher/server.

If a Cannot connect to the Docker daemon error is shown,

enter sudo dockerd and try

sudo docker run -d --restart=unless-stopped -p 8080:8080 rancher/server

again. Installation time varies with processor and network connection

speed. [rancher@ClientHost ~]$ is shown when the installation

is finished.

Enter ifconfig eth0 | grep 'inet addr' to view the Rancher

IP address. Enter the IP address followed by :8080 into a web

browser to connect to the Rancher web interface. For example, if the IP

address is 10.231.3.208, enter 10.231.3.208:8080

in the browser.

The Rancher web interface takes a few minutes to start. The web browser

might show a connection error while the web interface starts. If a

connection has timed out error is shown, wait one minute and

refresh the page.

When the Rancher web interface loads, click Add a host from the banner across the top of the screen. Verify that This site’s address is chosen and click Save.

Follow the steps shown in the Rancher web interface and copy the full

sudo docker run command from the text box. Paste it in the

Docker VM shell. The Docker VM will finish configuring Rancher. A

[rancher@ClientHost ~]$ prompt is shown when the

configuration is complete.

Verify that the configuration is complete. Go to the Rancher web interface and click . When a host with the Rancher IP address is shown, configuration is complete and Rancher is ready to use.

For more information on Rancher, see the Rancher documentation.