dpskipper

Dabbler

- Joined

- Jan 25, 2020

- Messages

- 13

So i'm experiencing some super weird issues with my pool of 6 SSDs in RAID 0. I seem to be unable to get write speeds above ~560MB/s and read speeds above 600MB/s over SMB. I've done a ton of reading and testing but I can't seem to figure out whats going wrong.

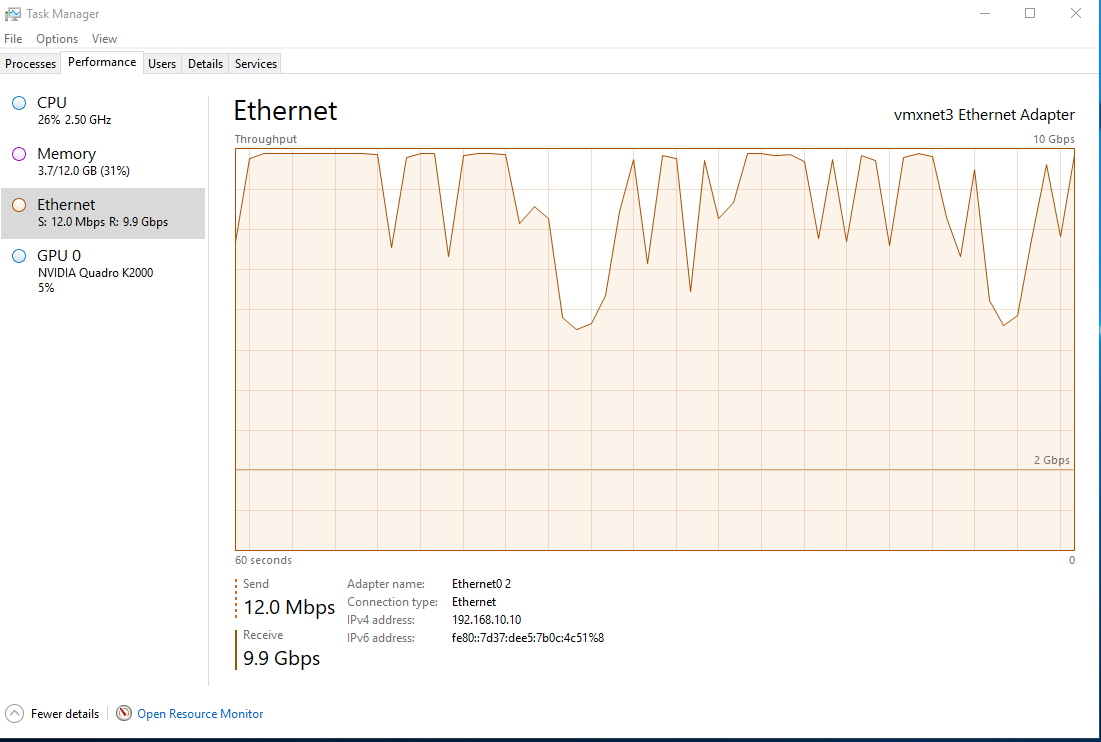

I posted a similar story at the networking section, but after running some extensive iperf tests and concluded I can get on average about 8Gbps through my switch from my PC to a VM inside the R720. Since the network is capable of pushing that bandwidth I think the problem lies elsewhere.

Full specs:

Host: R720

FreeNAS VM: 32GB ram, 8 cores, 2 sockets

Disk shelf: MD1220

HBA: 9207-8e IT mode

Disks: x6 Segate 1200 SAS3

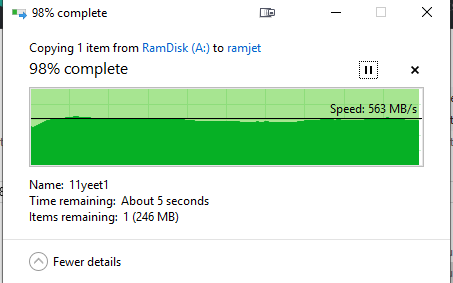

The HBA is passed to the FreeNAS VM and I added a simple striped pool with all 6 drives. My windows PC is connected to the R720 via SFP+ fiber and a 10gig switch and I do not get faster than 550 MB/s. I created a large file filled with random noise and copied it to a ramdisk and then from my local ramdisk to the FreeNAS pool.

Putting jumbo frames on did improve my speeds but obviously not enough that I'm happy. I'd like to saturate my 10gig network. These drives are more than capable.

I also ran a FIO benchmark from within the freenas terminal. Here are the results:

Now i've read that SMB is single threaded and shit blah blah blah. So I created a Linux VM and mounted the pool via NFS and ran a simple DD test and got 368MB/s write speeds from a 20GB file to the mounted share. I'm not really a linux user or NFS expert but at the moment I'm not seeing better speeds from NFS.

Really appreciate any input from storage gurus.

I posted a similar story at the networking section, but after running some extensive iperf tests and concluded I can get on average about 8Gbps through my switch from my PC to a VM inside the R720. Since the network is capable of pushing that bandwidth I think the problem lies elsewhere.

Full specs:

Host: R720

FreeNAS VM: 32GB ram, 8 cores, 2 sockets

Disk shelf: MD1220

HBA: 9207-8e IT mode

Disks: x6 Segate 1200 SAS3

The HBA is passed to the FreeNAS VM and I added a simple striped pool with all 6 drives. My windows PC is connected to the R720 via SFP+ fiber and a 10gig switch and I do not get faster than 550 MB/s. I created a large file filled with random noise and copied it to a ramdisk and then from my local ramdisk to the FreeNAS pool.

Putting jumbo frames on did improve my speeds but obviously not enough that I'm happy. I'd like to saturate my 10gig network. These drives are more than capable.

I also ran a FIO benchmark from within the freenas terminal. Here are the results:

Code:

seqread: (groupid=0, jobs=8): err= 0: pid=16497: Mon Jan 20 23:37:44 2020

read: IOPS=533k, BW=4160MiB/s (4363MB/s)(8192MiB/1969msec)

clat (usec): min=5, max=11833, avg=12.53, stdev=57.32

lat (usec): min=5, max=11833, avg=12.64, stdev=57.34

clat percentiles (usec):

| 1.00th=[ 6], 5.00th=[ 6], 10.00th=[ 7], 20.00th=[ 7],

| 30.00th=[ 7], 40.00th=[ 7], 50.00th=[ 7], 60.00th=[ 8],

| 70.00th=[ 9], 80.00th=[ 14], 90.00th=[ 23], 95.00th=[ 46],

| 99.00th=[ 83], 99.50th=[ 89], 99.90th=[ 126], 99.95th=[ 147],

| 99.99th=[ 1500]

bw ( KiB/s): min=255765, max=752015, per=12.88%, avg=548751.71, stdev=186495.09, samples=24

iops : min=31970, max=94001, avg=68593.50, stdev=23311.93, samples=24

lat (usec) : 10=71.63%, 20=17.93%, 50=7.63%, 100=2.45%, 250=0.34%

lat (usec) : 500=0.01%, 750=0.01%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%

cpu : usr=10.83%, sys=85.20%, ctx=940, majf=0, minf=32

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=1048576,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=4160MiB/s (4363MB/s), 4160MiB/s-4160MiB/s (4363MB/s-4363MB/s), io=8192MiB (8590MB), run=1969-1969msecNow i've read that SMB is single threaded and shit blah blah blah. So I created a Linux VM and mounted the pool via NFS and ran a simple DD test and got 368MB/s write speeds from a 20GB file to the mounted share. I'm not really a linux user or NFS expert but at the moment I'm not seeing better speeds from NFS.

Really appreciate any input from storage gurus.