AbsolutIggy

Dabbler

- Joined

- Feb 29, 2020

- Messages

- 31

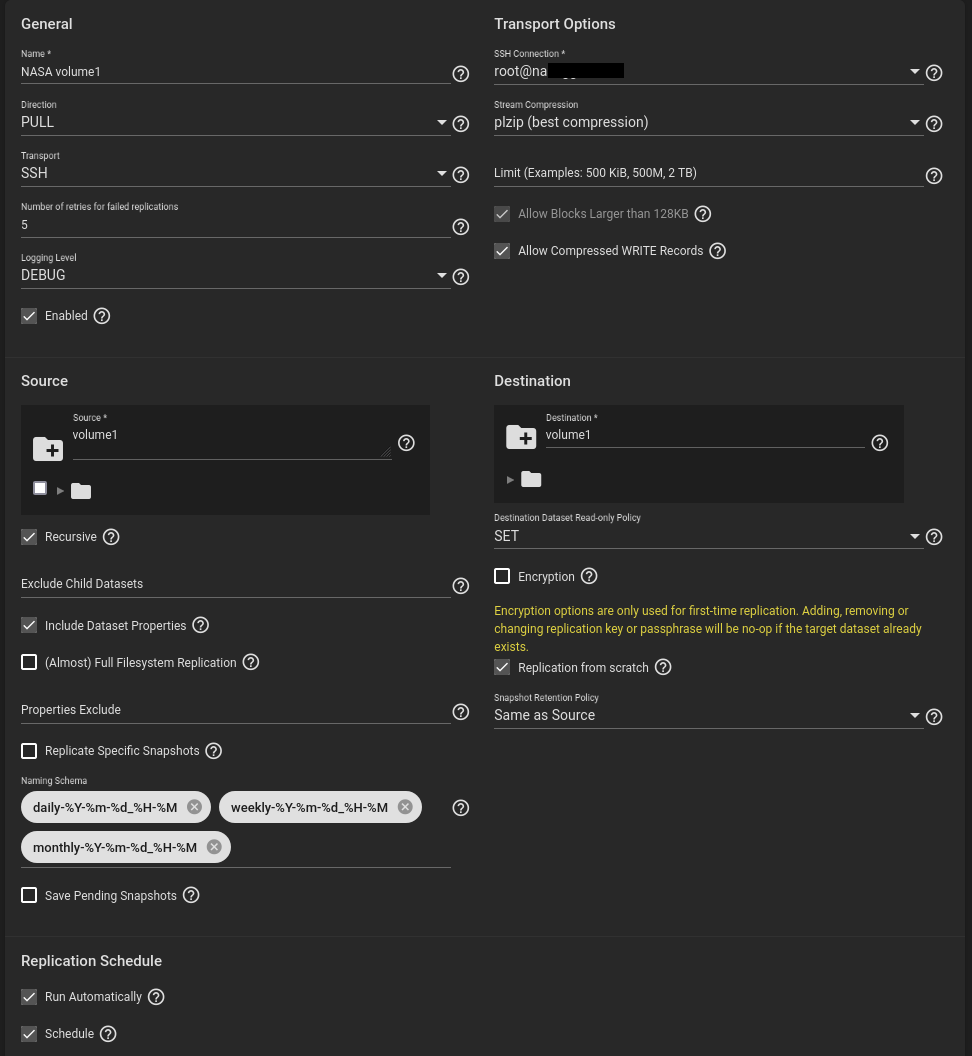

I have two TrueNAS servers in different locations, nasA and nasB. nasB pulls snapshots from nasA every day, using a replication task.

This replication worked well for a long time. I recently had to reinstall the operating system due to a failure of the boot drive, and the problems started after that. Both systems are on TrueNAS-13.0-U3.1.

The replication is set to run in the evening. Often, but not every time, I get an email after about half an hour with the following alert:

The last line continues with a string of alphanumeric characters, ending in '..'. The console log around the time the mail is sent shows nothing. Settings for the replication task are below.

If anybody has any ideas about this, I would be glad to hear them. Where can I find more information, is there another log?

This replication worked well for a long time. I recently had to reinstall the operating system due to a failure of the boot drive, and the problems started after that. Both systems are on TrueNAS-13.0-U3.1.

The replication is set to run in the evening. Often, but not every time, I get an email after about half an hour with the following alert:

Code:

* Replication "NASA volume1" failed: ssh_dispatch_run_fatal: Connection to 10.xx.x.Y port 22: message authentication code incorrect

(stdin): Unexpected EOF in worker 3

cannot receive incremental stream: checksum mismatch or incomplete stream.

Partially received snapshot is saved.

A resuming stream can be generated on the sending system by running:

zfs send -t 1-14026c5196-118-789c636064000310a50...The last line continues with a string of alphanumeric characters, ending in '..'. The console log around the time the mail is sent shows nothing. Settings for the replication task are below.

If anybody has any ideas about this, I would be glad to hear them. Where can I find more information, is there another log?

Last edited: