So I waited to see what the general consensus was and felt it was fine to upgrade to Cobia 23.10.0.1 from my Bluefin install. I'm beginning to regret it though as it seems that every so often, Cobia crashes. None of my mounts (nfs or smb) are accessible. Can't ssh into the appliance. Web UI loads the login page but that's where it ends.

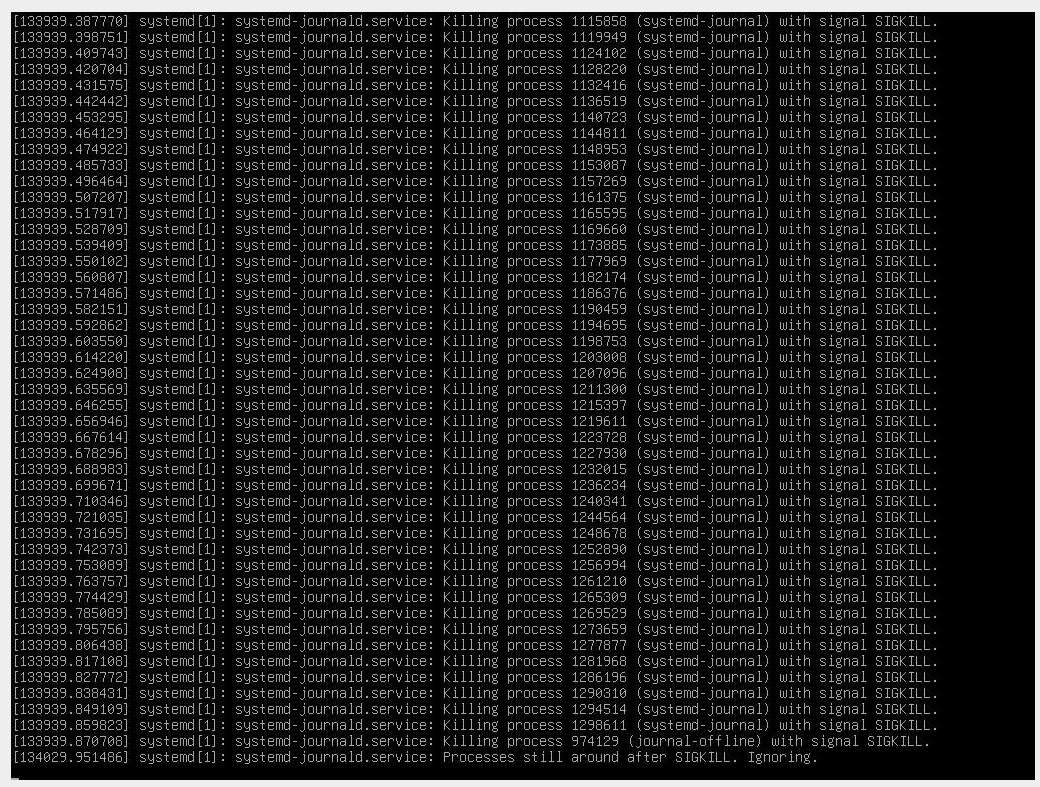

When I look at the IPMI, I see the following:

I have syslog streaming to Graylog but since the storage is an nfs share on the NAS, I lose the ability to continue logging, so there's a gap between the failure and the point where storage is restored after a lengthy shutdown and reboot. I'm going to change that to an alternative storage point to see what, if anything, the syslog may further reveal.

The storage itself survives, which is comforting, but the system just grinds to a halt. It's an Atom-based Supermicro system, using a pair of SSDs in a hardware RAID1 for system drives, so they're not at all part of the ZFS pool, if that detail is of any value in this discussion.

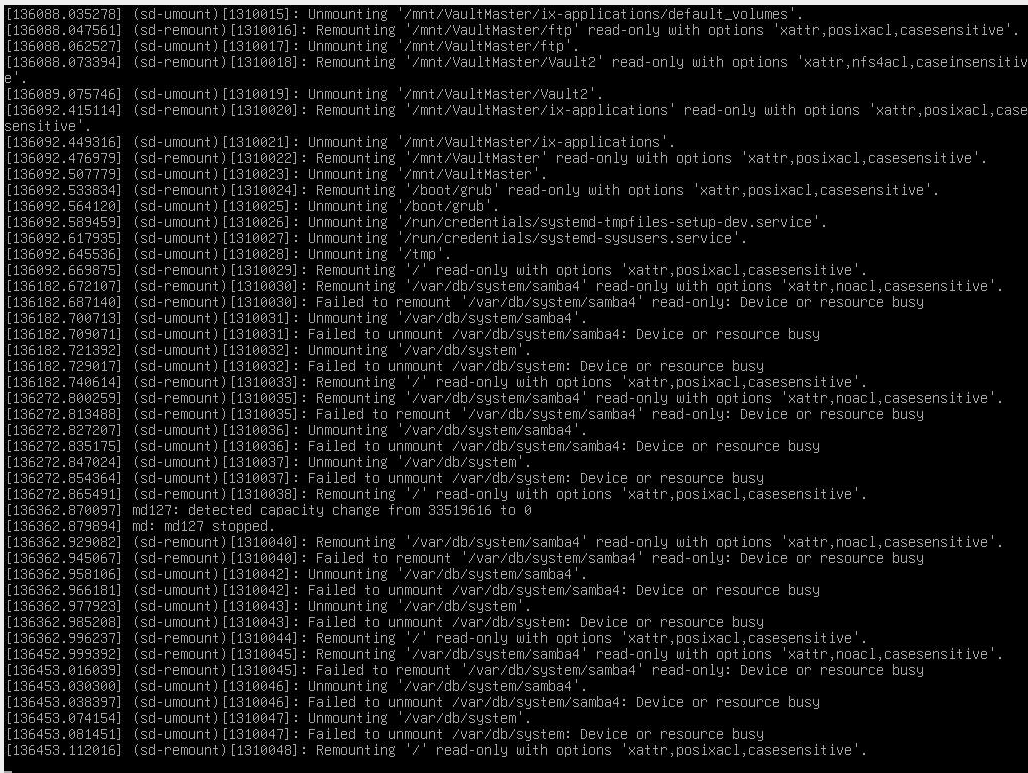

The shutdown process is long, as everything being shut down times out, including unmounts:

Is there anyone else seeing similar issues and if so, were you able to resolve it?

When I look at the IPMI, I see the following:

I have syslog streaming to Graylog but since the storage is an nfs share on the NAS, I lose the ability to continue logging, so there's a gap between the failure and the point where storage is restored after a lengthy shutdown and reboot. I'm going to change that to an alternative storage point to see what, if anything, the syslog may further reveal.

The storage itself survives, which is comforting, but the system just grinds to a halt. It's an Atom-based Supermicro system, using a pair of SSDs in a hardware RAID1 for system drives, so they're not at all part of the ZFS pool, if that detail is of any value in this discussion.

The shutdown process is long, as everything being shut down times out, including unmounts:

Is there anyone else seeing similar issues and if so, were you able to resolve it?

Last edited: