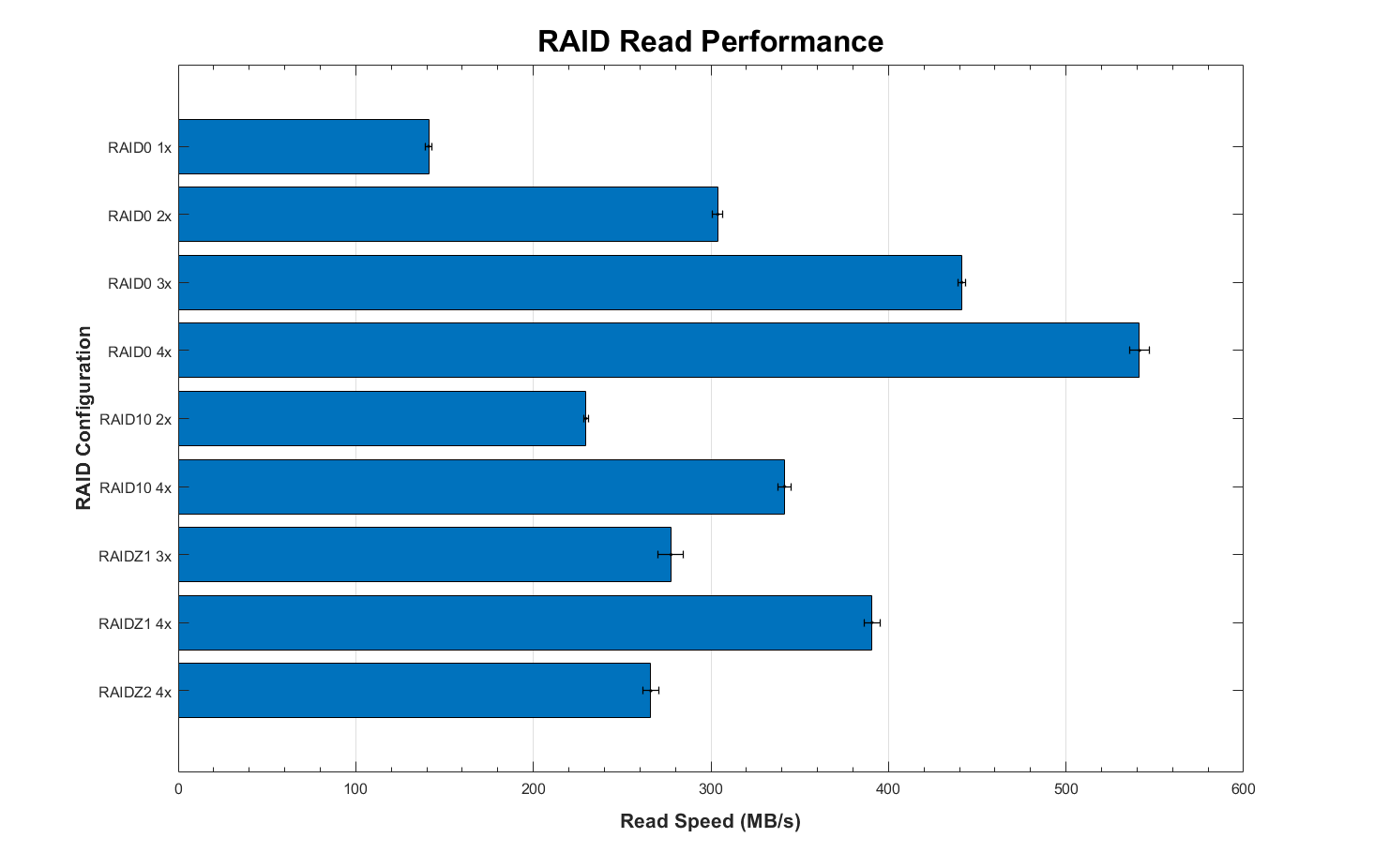

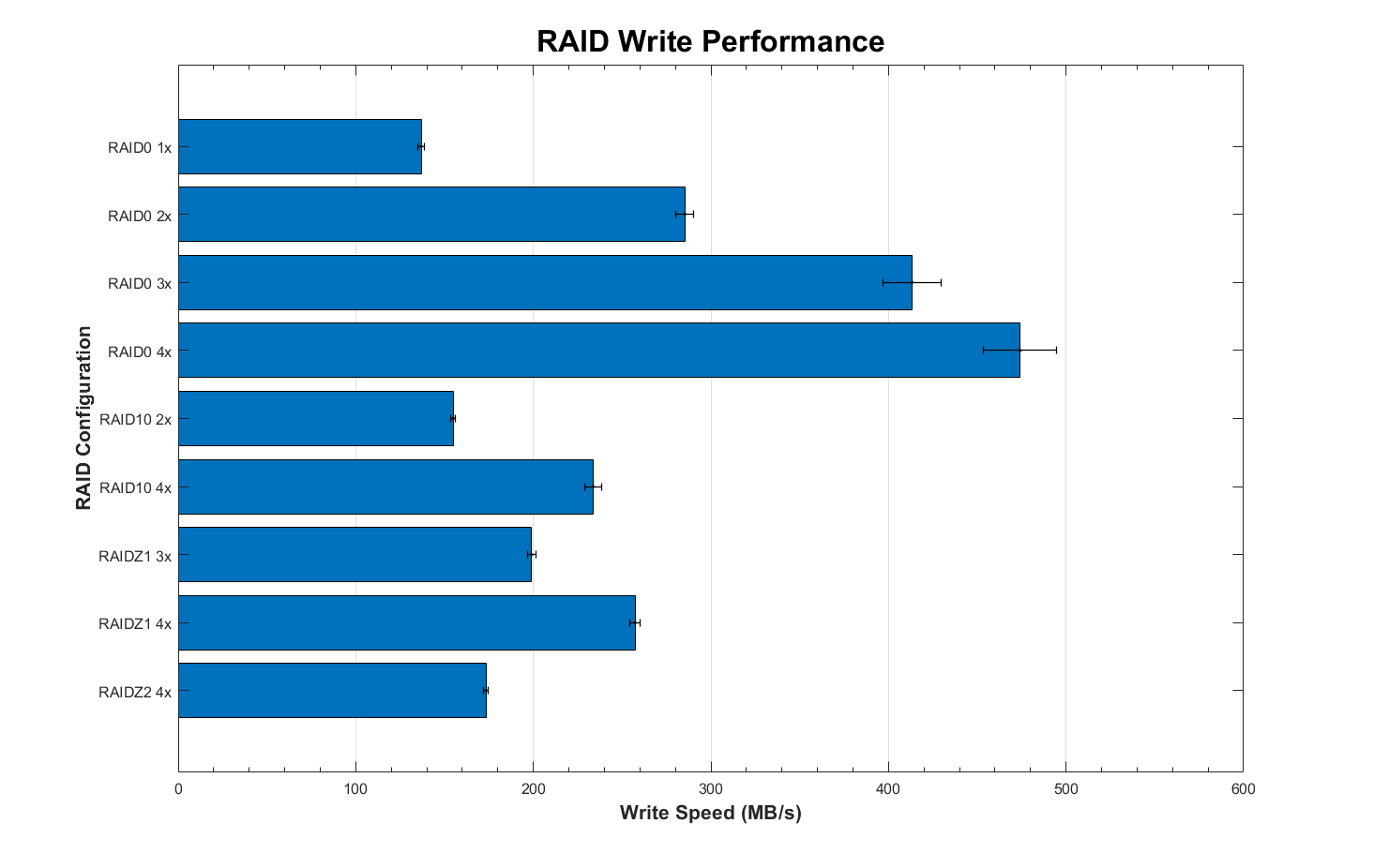

I've been testing different RAID configuration to optimize performance. I would setup different configurations and test both read and write performances of 1-4 drives (3TB capacity drives) with dd command. I ran the benchmark 5 times per configuration for both read and write. In my testing, I noticed that RAID10 (mirror 2x2x3TB) configuration with 4 drives suffered a read performance penalty that I didn't expect (It's not scaling as I would expect it would).

In the plots, RAID0 refers stripe setup and Nx refers to the number of drives in the configuration (RAID10 refers to mirror). Error bars denote 1 standard deviation.

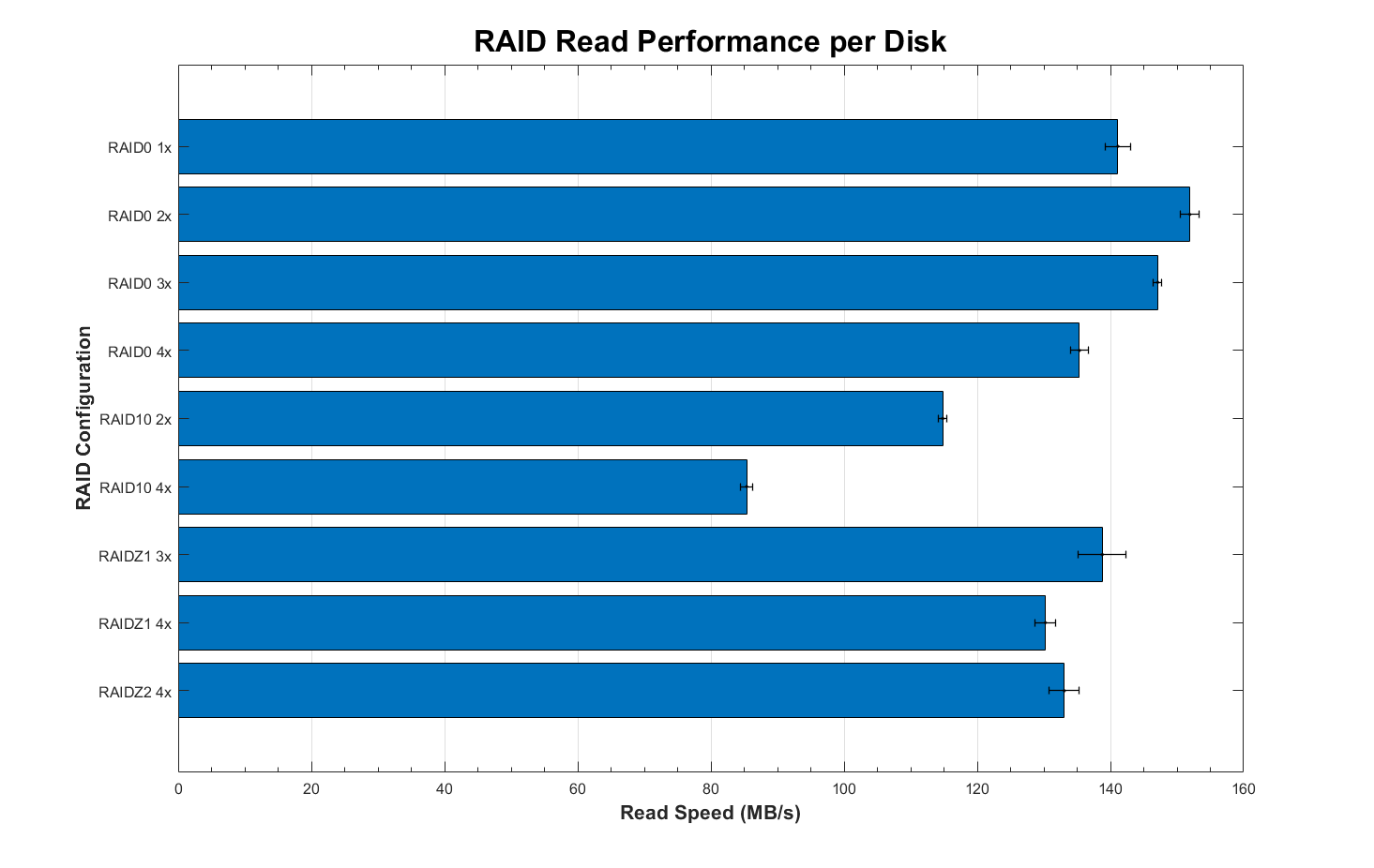

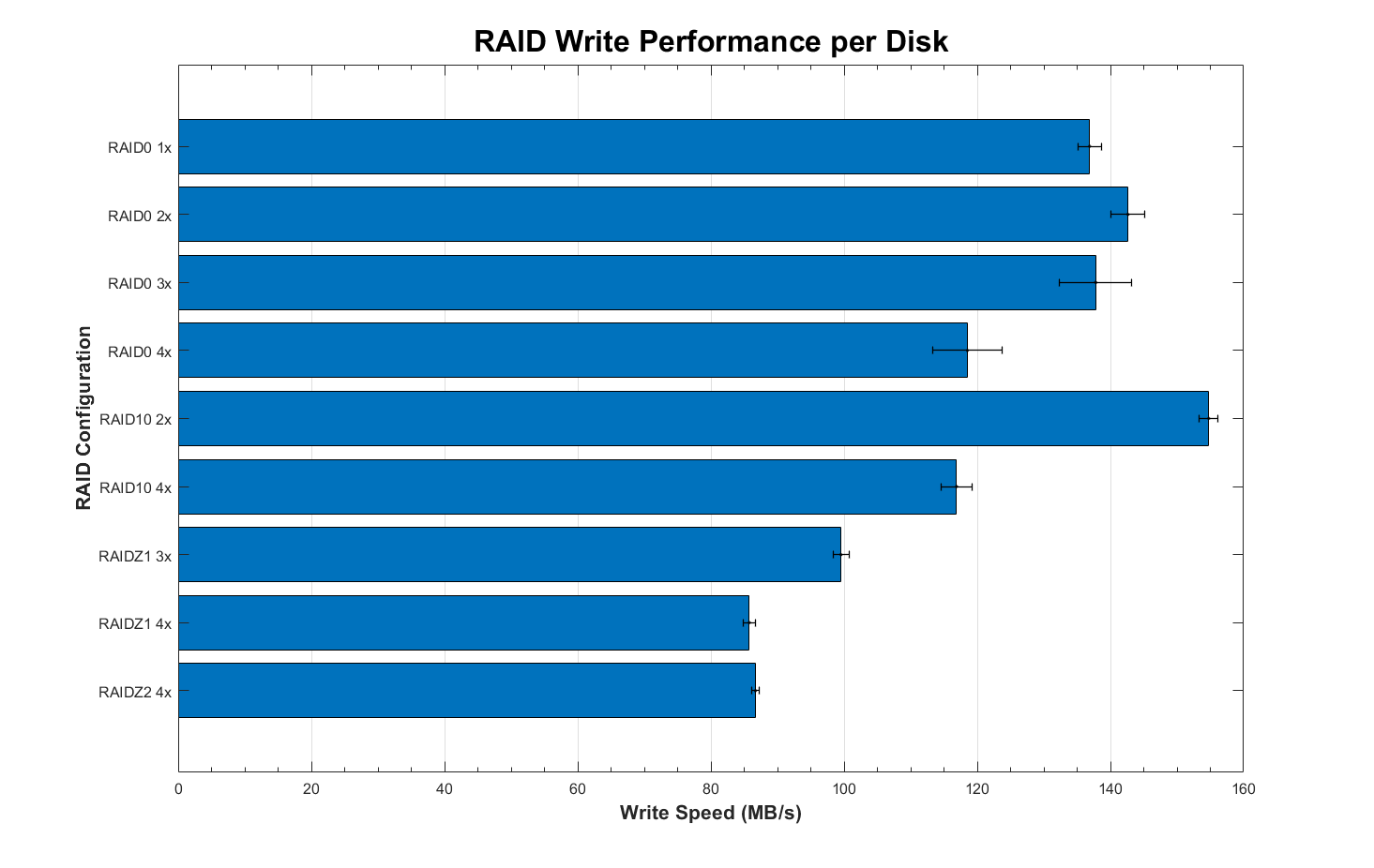

The following plots are scaled based on how I would expect performance to scale.

Basically,

RAID0 scales linearly with drive number for both read and write.

RAID10 read scale linearly with drive number and write scale as N/2.

RAIDZ read scale as (N-1) and write scale as (N-1)/(some parity overhead factor).

RAIDZ2 read scale as (N-2) and write scale as (N-2)/(some parity overhead factor).

As you can see, write performance per disk is more or less what I would expect, about the performance of just a single disk drive.

Read for the most part is what I would expect as well except for RAID10 configuration which have a much lower per disk performance than a single drive.

I was wondering if I did something wrong or is there some overhead involved with mirror that causes a lost of nearly 30% in read performance?

In the plots, RAID0 refers stripe setup and Nx refers to the number of drives in the configuration (RAID10 refers to mirror). Error bars denote 1 standard deviation.

The following plots are scaled based on how I would expect performance to scale.

Basically,

RAID0 scales linearly with drive number for both read and write.

RAID10 read scale linearly with drive number and write scale as N/2.

RAIDZ read scale as (N-1) and write scale as (N-1)/(some parity overhead factor).

RAIDZ2 read scale as (N-2) and write scale as (N-2)/(some parity overhead factor).

As you can see, write performance per disk is more or less what I would expect, about the performance of just a single disk drive.

Read for the most part is what I would expect as well except for RAID10 configuration which have a much lower per disk performance than a single drive.

I was wondering if I did something wrong or is there some overhead involved with mirror that causes a lost of nearly 30% in read performance?