Jeremy POP-IT

Cadet

- Joined

- Jun 16, 2016

- Messages

- 4

Hello,

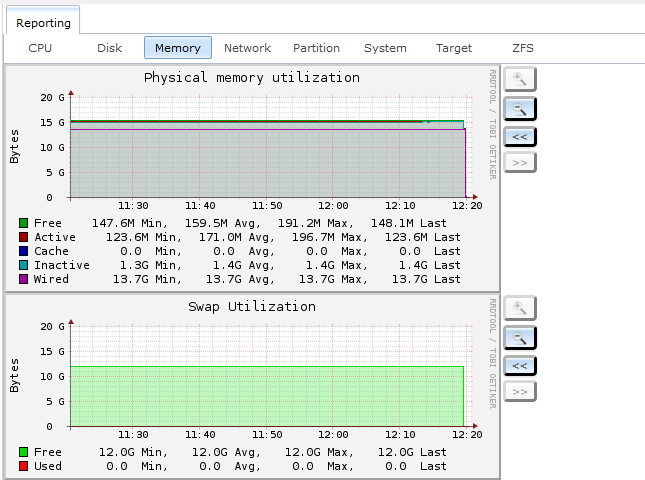

i would use Freenas for copy by iSCSI a save VEEAM. but my memory usage is constantly full after 3 backup. The Freenas memory full then crashed, now my disks is degraded mode :( and the repair full the memory...

I have not installed the driver for the RAID card, this may be the problem ?

for install the driver, just to copy mrsas.ko in /boot/kernel ? and reboot freenas ?

Freenas 9.10 stable

Hardware SuperMicro

16Go RAM

Raid Card Mega Raid SAS9341-8i

6 disk 3To in JBOD

RAid-Z 5 Disk + 1 Spare

For the choise of mahcine is not me, sorry ..

Thanks for your reply !

i would use Freenas for copy by iSCSI a save VEEAM. but my memory usage is constantly full after 3 backup. The Freenas memory full then crashed, now my disks is degraded mode :( and the repair full the memory...

I have not installed the driver for the RAID card, this may be the problem ?

for install the driver, just to copy mrsas.ko in /boot/kernel ? and reboot freenas ?

Freenas 9.10 stable

Hardware SuperMicro

16Go RAM

Raid Card Mega Raid SAS9341-8i

6 disk 3To in JBOD

RAid-Z 5 Disk + 1 Spare

For the choise of mahcine is not me, sorry ..

Thanks for your reply !