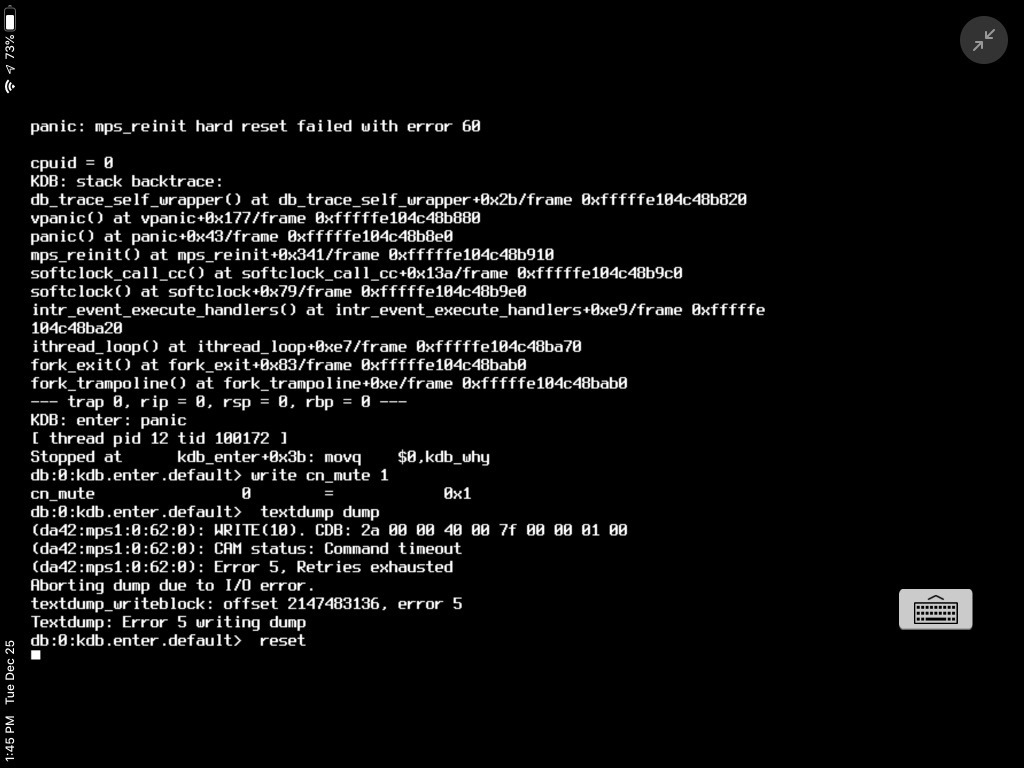

I sm in the process of replacing the drives on one vdev of a 4 X raidz-2 pool. The first two drives went fine. On the third drive, very roughly 20 minutes into the resilver process, the system panics and reboots and attempts to resilver from the beginning. I have tried changing the destination drive to a fresh HD as well as removing the original disk. I have not been able to get the resilver to complete and the current state is degraded.

I've never seen a panic on FreeBSD before and I don't know where to start to diagnose the problem. Any advice?

System:

FreeNAS 11.2-RELEASE

Platform: Intel(R) Xeon(R) CPU E5-2660 0 @ 2.20GHz, 16 Cores @ 2.2GHz x 2

Supermicro X9DRD

LSI SAS2308 PCI-Express Fusion-MPT SAS-2

Chelsio S320e Dual-Port 10GBe

CPU VM Support: Full

Memory: 64GB

Chassis: Chambro 40700 4U-48 bay / SAS-2 Backplane

HDD: 6TB x 16, 4TB x 5, 3TB x 3, 2TB x 10, 1.5TB x 12

SSD: 120GB x 2 (mirrored boot), 18.64GB x 1 (SLOG

I've never seen a panic on FreeBSD before and I don't know where to start to diagnose the problem. Any advice?

System:

FreeNAS 11.2-RELEASE

Platform: Intel(R) Xeon(R) CPU E5-2660 0 @ 2.20GHz, 16 Cores @ 2.2GHz x 2

Supermicro X9DRD

LSI SAS2308 PCI-Express Fusion-MPT SAS-2

Chelsio S320e Dual-Port 10GBe

CPU VM Support: Full

Memory: 64GB

Chassis: Chambro 40700 4U-48 bay / SAS-2 Backplane

HDD: 6TB x 16, 4TB x 5, 3TB x 3, 2TB x 10, 1.5TB x 12

SSD: 120GB x 2 (mirrored boot), 18.64GB x 1 (SLOG