Herr_Merlin

Patron

- Joined

- Oct 25, 2019

- Messages

- 200

I've setup an ESXi with FreeNas.

Now I got 4 NVMe drives, which I passed to the freenas VM.

The performance is, well I would expect the same performance out of a floppy Z1 volume..

So clearly I missed some tuning and setup.

Are there guidelines, I couldn't find any, for NVMe pools?

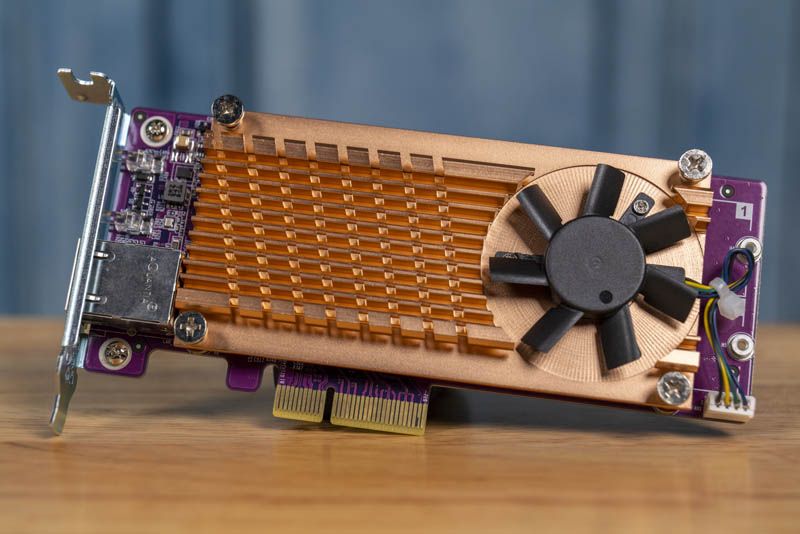

Using 4 of those: TS1TMTE220S mounted to a card with a PCIe Switch. So maximum bandwidth is limited to x8 for all 4.

I don't see any errors within freenas or the log.

As a little reference I've tested the 4 SSDs with the card together with windows server 2019 and build a Storage Spaces pool with one drive parity and ReFS as file system.. with that setup I was able to get nearly the bandwidth of the PCIe x8 3.0..

Thus I spend some time googling for best practice for NVMe pools but didn't find anything.

Now I got 4 NVMe drives, which I passed to the freenas VM.

The performance is, well I would expect the same performance out of a floppy Z1 volume..

So clearly I missed some tuning and setup.

Are there guidelines, I couldn't find any, for NVMe pools?

Using 4 of those: TS1TMTE220S mounted to a card with a PCIe Switch. So maximum bandwidth is limited to x8 for all 4.

I don't see any errors within freenas or the log.

As a little reference I've tested the 4 SSDs with the card together with windows server 2019 and build a Storage Spaces pool with one drive parity and ReFS as file system.. with that setup I was able to get nearly the bandwidth of the PCIe x8 3.0..

Thus I spend some time googling for best practice for NVMe pools but didn't find anything.

Attachments

Last edited: