IonutZ

Contributor

- Joined

- Aug 17, 2014

- Messages

- 108

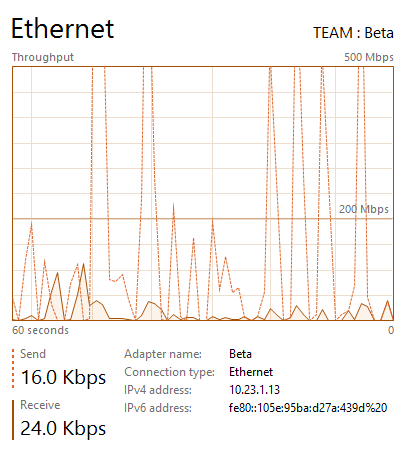

Is there any reason why my network throughput would dip when transferring many small files from a client to the NAS? Both client and NAS have 2x Gigabit Interfaces set as LAGG (LACP) going through a LACP capable switch. Both read and write happens to very capable media. When there is a large file, throughput goes up to 950mbps, but for many small files it drops to as low as 10mbps... quite inconsistent behavior. I don't even know where to start looking to debug this issue, I'm hoping maybe someone has had similar experiences and was able to fix it. Also, my CAT6 cables are fine.