Elliot Dierksen

Guru

- Joined

- Dec 29, 2014

- Messages

- 1,135

After some research reading older posts in the forums, it seems obvious the using all of my Optane 900P 280G for an SLOG is total overkill. I have posted some of my basis results on the speed on the Optane here https://forums.freenas.org/index.php?posts/485730/ and my initial steps for partitioning the Optane here https://forums.freenas.org/index.php?posts/484003/. I have redone the split of my Optane to use some of it for an L2ARC. No particular reason, really just because I can.

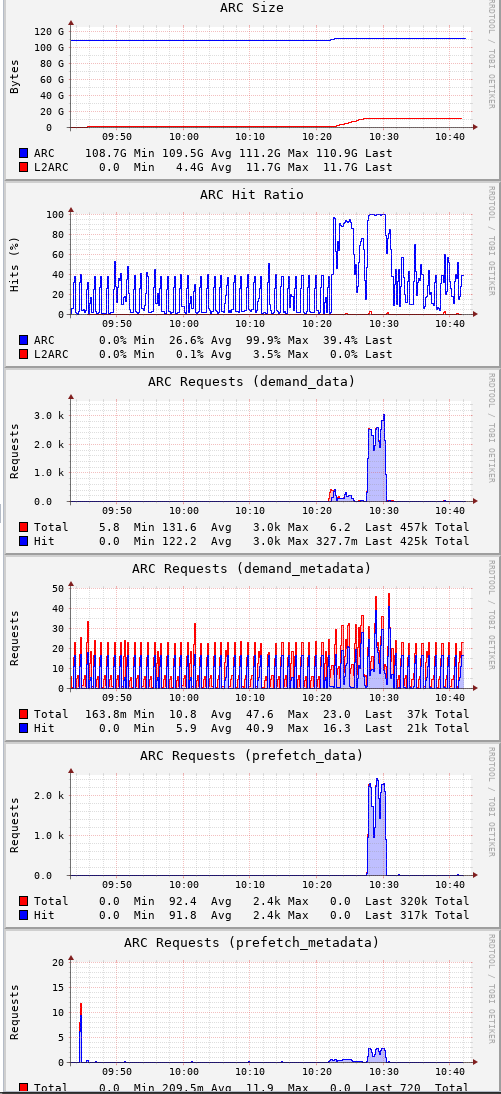

I was trying to think of what I could do to see if it was really using that L2ARC. My

I can't tell if the ZFS reporting stats are showing that or not.

What I was trying to do is test from a FreeBSD VM running on one of my ESXi hosts. ESXi mounts the datastore on FreeNAS via NFS on a 10Gb link (detail all in my sig).

I guess my question is does that indicate that I am using some of the L2ARC as I suspect? Since my test file is 16G, that could certainly all fit into the regular ARC. I can't see how I would be hurting anything, but is there a better way to see if this is helping?

Edit: I forgot to post the zpool status.

Code:

gpart destroy -F nvd0 # just making sure gpart create -s GPT nvd0 gpart add -t freebsd-zfs -a 1m -l sloga -s 16G nvd0 gpart add -t freebsd-zfs -a 1m -l slogb -s 16G nvd0 gpart add -t freebsd-zfs -a 1m -l slogc -s 16G nvd0 gpart add -t freebsd-zfs -a 1m -l l2arca nvd0 # takes the rest zpool add RAIDZ2-I log nvd0p1 zpool add RAIDZ2-I cache nvd0p4

I was trying to think of what I could do to see if it was really using that L2ARC. My

zpool iostat -v would seem to indicate so.Code:

root@freenas2:/mnt/RAIDZ2-I/VMWare # zpool iostat -v RAIDZ2-I capacity operations bandwidth pool alloc free read write read write -------------------------------------- ----- ----- ----- ----- ----- ----- RAIDZ2-I 5.75T 8.75T 43 53 4.21M 1.11M raidz2 5.52T 1.73T 41 18 4.07M 393K gptid/bd041ac6-9e63-11e7-a091-e4c722848f30 - - 2 5 824K 84.5K gptid/bdef2899-9e63-11e7-a091-e4c722848f30 - - 4 6 821K 84.5K gptid/bed51d90-9e63-11e7-a091-e4c722848f30 - - 3 6 821K 84.5K gptid/bfb76075-9e63-11e7-a091-e4c722848f30 - - 4 6 821K 84.5K gptid/c09c704a-9e63-11e7-a091-e4c722848f30 - - 3 5 822K 84.5K gptid/c1922b7c-9e63-11e7-a091-e4c722848f30 - - 3 6 821K 84.5K gptid/c276eb75-9e63-11e7-a091-e4c722848f30 - - 3 6 821K 84.5K gptid/c3724eeb-9e63-11e7-a091-e4c722848f30 - - 4 5 820K 84.5K raidz2 233G 7.02T 1 34 144K 728K gptid/a1b7ef4b-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.5K 155K gptid/a2eb419f-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.4K 155K gptid/a41758d7-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.5K 155K gptid/a5444dfb-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.5K 155K gptid/a6dcd16f-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.3K 155K gptid/a80cd73c-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.4K 155K gptid/a94711a5-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.5K 155K gptid/aaa6631d-3c2a-11e8-978a-e4c722848f30 - - 0 7 24.5K 155K logs - - - - - - nvd0p1 8.11M 15.9G 0 89 8 6.27M cache - - - - - - nvd0p4 9.86G 203G 0 5 514 3.70M -------------------------------------- ----- ----- ----- ----- ----- -----

I can't tell if the ZFS reporting stats are showing that or not.

What I was trying to do is test from a FreeBSD VM running on one of my ESXi hosts. ESXi mounts the datastore on FreeNAS via NFS on a 10Gb link (detail all in my sig).

Code:

# dd if=/dev/random of=/tmp/write.foo bs=1m count=16384 16384+0 records in 16384+0 records out 17179869184 bytes transferred in 293.751313 secs (58484400 bytes/sec) # dd of=/dev/null if=/tmp/write.foo bs=1m 16384+0 records in 16384+0 records out 17179869184 bytes transferred in 47.265901 secs (363472795 bytes/sec) # dd of=/dev/null if=/tmp/write.foo bs=1m 16384+0 records in 16384+0 records out 17179869184 bytes transferred in 49.936403 secs (344034975 bytes/sec) # dd of=/dev/null if=/tmp/write.foo bs=1m 16384+0 records in 16384+0 records out 17179869184 bytes transferred in 47.397839 secs (362461022 bytes/sec)

I guess my question is does that indicate that I am using some of the L2ARC as I suspect? Since my test file is 16G, that could certainly all fit into the regular ARC. I can't see how I would be hurting anything, but is there a better way to see if this is helping?

Edit: I forgot to post the zpool status.

Code:

root@freenas2:/mnt/RAIDZ2-I/VMWare # zpool status -v RAIDZ2-I pool: RAIDZ2-I state: ONLINE scan: scrub repaired 0 in 0 days 02:47:24 with 0 errors on Wed Oct 3 23:54:43 2018 config: NAME STATE READ WRITE CKSUM RAIDZ2-I ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 gptid/bd041ac6-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 gptid/bdef2899-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 gptid/bed51d90-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 gptid/bfb76075-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 gptid/c09c704a-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 gptid/c1922b7c-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 gptid/c276eb75-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 gptid/c3724eeb-9e63-11e7-a091-e4c722848f30 ONLINE 0 0 0 raidz2-1 ONLINE 0 0 0 gptid/a1b7ef4b-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 gptid/a2eb419f-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 gptid/a41758d7-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 gptid/a5444dfb-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 gptid/a6dcd16f-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 gptid/a80cd73c-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 gptid/a94711a5-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 gptid/aaa6631d-3c2a-11e8-978a-e4c722848f30 ONLINE 0 0 0 logs nvd0p1 ONLINE 0 0 0 cache nvd0p4 ONLINE 0 0 0 spares gptid/4abff125-23a2-11e8-a466-e4c722848f30 AVAIL errors: No known data errors