sunshine931

Explorer

- Joined

- Jan 23, 2018

- Messages

- 54

FreeNAS 11.2-U4

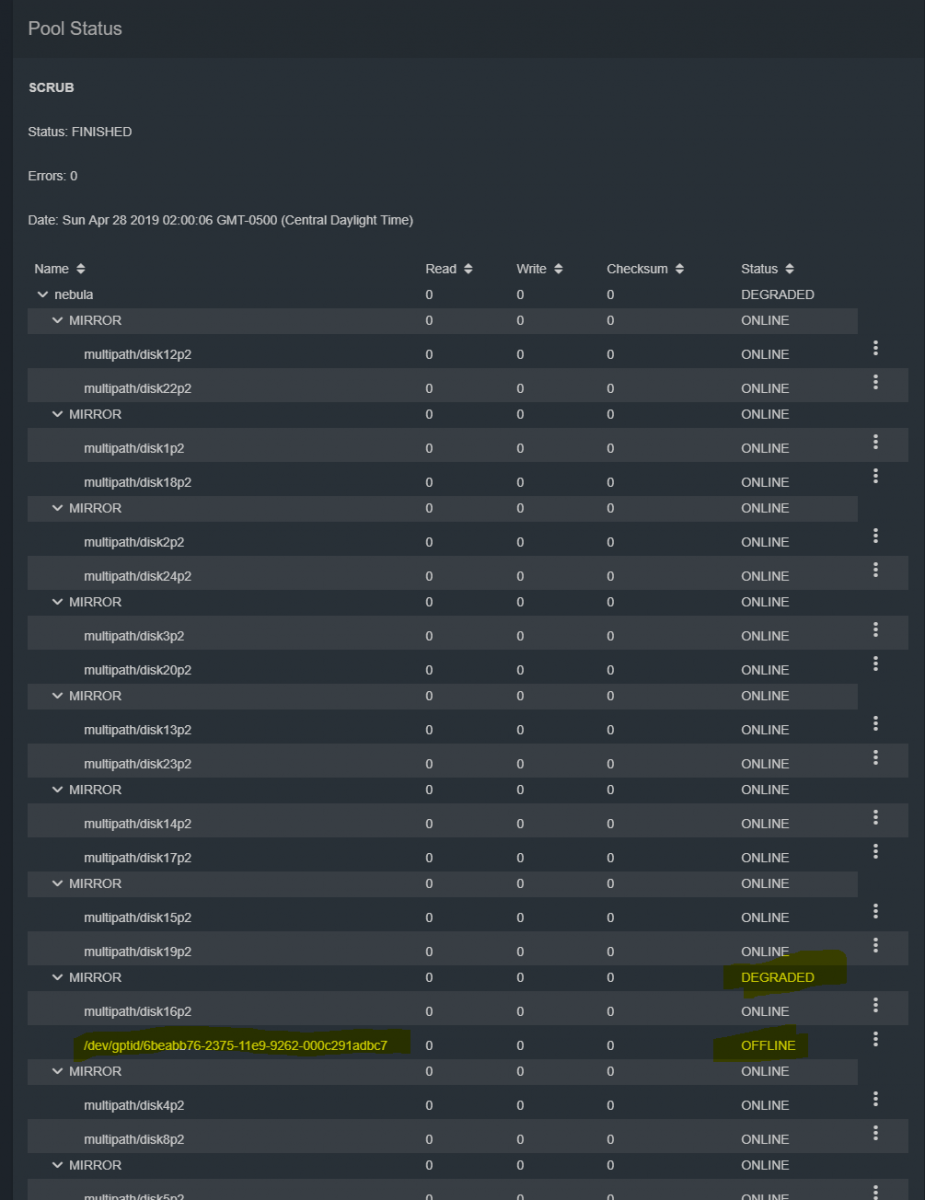

My pool consists of 24 SAS disks, multipath, in mirrored vdevs.

One of my drives was dying, reporting:

My pool is healthy, but the drive is on its last legs.. This is bad news of course, so I decided I'd replace it before it's totally dead.

I followed the procedure described in the FreeNAS docs, and marked the failing drive as "OFFLINE" via the WebUI, removed the old drive, and replaced it with a new drive. Then I went back to the WebUI to complete the drive replacement. That's where I'm at now.

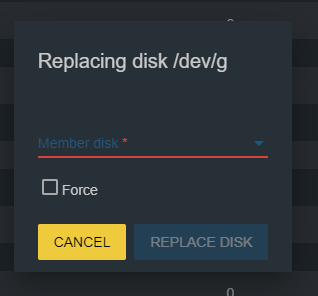

The issue I'm seeing is that I cannot complete the step to replace the OFFLINE drive. I go into the WebUI, navigate to Pools, view the status of the pool and find my "OFFLINE" drive, and attempt to Replace it.

The WebUI pops up a box from which to select the replacement drive, but my multipath device is NOT listed. The /dev/daXXX device is there, but not the multipath version of that. Thinking I might need to select the non-multipath device name and use that, I did so.. but the WebUI throws an error complaining about it.

Note that in the screen-shots below, the now "OFFLINE" drive appears with a different path than it used to. Prior to marking it OFFLINE, it showed /dev/multipath/disk21p2. Now, after removing/ replacing the physical drive, the WebUI shows some /dev/gptid/xxxxxx device. Not sure why this changed or the significance of it.

What to do? This is a production pool with backups, but I'm VERY concerned because if that now single-disk vdev dies, my pool is toast..

My pool (cut off to save space):

What I see when I attempt to Replace the drive:

The multipath info, this is the replacement drive:

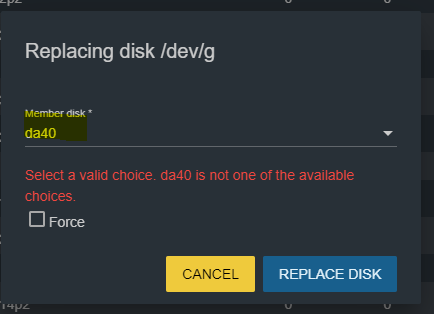

Disks in the drop-down (da40 is the new disk.. well.. one of the two paths to it):

The error I receive after selecting da40 and clicking the "Replace" button. Note that I DID NOT choose to "Force" the replacement, as I don't know what that might do...

dmesg content prior to and after replacing the failing drive:

My pool consists of 24 SAS disks, multipath, in mirrored vdevs.

One of my drives was dying, reporting:

Elements in grown defect list: 18532. My pool is healthy, but the drive is on its last legs.. This is bad news of course, so I decided I'd replace it before it's totally dead.

I followed the procedure described in the FreeNAS docs, and marked the failing drive as "OFFLINE" via the WebUI, removed the old drive, and replaced it with a new drive. Then I went back to the WebUI to complete the drive replacement. That's where I'm at now.

The issue I'm seeing is that I cannot complete the step to replace the OFFLINE drive. I go into the WebUI, navigate to Pools, view the status of the pool and find my "OFFLINE" drive, and attempt to Replace it.

The WebUI pops up a box from which to select the replacement drive, but my multipath device is NOT listed. The /dev/daXXX device is there, but not the multipath version of that. Thinking I might need to select the non-multipath device name and use that, I did so.. but the WebUI throws an error complaining about it.

Note that in the screen-shots below, the now "OFFLINE" drive appears with a different path than it used to. Prior to marking it OFFLINE, it showed /dev/multipath/disk21p2. Now, after removing/ replacing the physical drive, the WebUI shows some /dev/gptid/xxxxxx device. Not sure why this changed or the significance of it.

What to do? This is a production pool with backups, but I'm VERY concerned because if that now single-disk vdev dies, my pool is toast..

My pool (cut off to save space):

What I see when I attempt to Replace the drive:

The multipath info, this is the replacement drive:

Code:

root@omega[~]# gmultipath list disk21 Geom name: disk21 Type: AUTOMATIC Mode: Active/Passive UUID: b20155e9-83db-11e9-9319-000c291adbc7 State: OPTIMAL Providers: 1. Name: multipath/disk21 Mediasize: 3000592981504 (2.7T) Sectorsize: 512 Mode: r0w0e0 State: OPTIMAL Consumers: 1. Name: da40 Mediasize: 3000592982016 (2.7T) Sectorsize: 512 Mode: r1w1e1 State: ACTIVE 2. Name: da47 Mediasize: 3000592982016 (2.7T) Sectorsize: 512 Mode: r1w1e1 State: PASSIVE

Disks in the drop-down (da40 is the new disk.. well.. one of the two paths to it):

The error I receive after selecting da40 and clicking the "Replace" button. Note that I DID NOT choose to "Force" the replacement, as I don't know what that might do...

Code:

root@omega[~]# zpool status nebula

pool: nebula

state: DEGRADED

status: One or more devices has been taken offline by the administrator.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Online the device using 'zpool online' or replace the device with

'zpool replace'.

scan: scrub repaired 0 in 0 days 09:16:35 with 0 errors on Sun Apr 28 11:16:41 2019

config:

NAME STATE READ WRITE CKSUM

nebula DEGRADED 0 0 0

mirror-0 ONLINE 0 0 0

gptid/53e3fce7-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/55826cdf-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/572020ca-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/58ae54e8-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/5a7bbab2-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/5c261ec6-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

gptid/5dc3d734-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/5f4d5da1-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

gptid/60ea8cf2-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/6285471e-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

gptid/640f25b2-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/65a25361-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-6 ONLINE 0 0 0

gptid/6746b749-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/68d4c0d6-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-7 DEGRADED 0 0 0

gptid/6a5cf4a3-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

15207924617641425081 OFFLINE 0 0 0 was /dev/gptid/6beabb76-2375-11e9-9262-000c291adbc7

mirror-8 ONLINE 0 0 0

gptid/6d9629ce-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/6f39c052-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-9 ONLINE 0 0 0

gptid/70d34ea0-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/72626e7f-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-10 ONLINE 0 0 0

gptid/7419ee0e-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/75b80698-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

mirror-11 ONLINE 0 0 0

gptid/7749cc47-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

gptid/78e75b73-2375-11e9-9262-000c291adbc7 ONLINE 0 0 0

errors: No known data errors

dmesg content prior to and after replacing the failing drive:

Code:

(da40:mps0:0:79:0): READ(10). CDB: 28 00 0d 57 30 c0 00 00 20 00 length 16384 SMID 901 Aborting command 0xfffffe0001274e90 mps0: Sending reset from mpssas_send_abort for target ID 79 (da40:mps0:0:79:0): READ(10). CDB: 28 00 0d 57 30 a0 00 00 20 00 length 16384 SMID 378 Aborting command 0xfffffe000124a020 mps0: Sending reset from mpssas_send_abort for target ID 79 (da40:mps0:0:79:0): READ(10). CDB: 28 00 0d 57 30 c0 00 00 20 00 (da40:mps0:0:79:0): CAM status: Command timeout (da40:mps0:0:79:0): Retrying command mps0: mpssas_action_scsiio: Freezing devq for target ID 79 (da40:mps0:0:79:0): READ(10). CDB: 28 00 0d 57 30 c0 00 00 20 00 (da40:mps0:0:79:0): CAM status: CAM subsystem is busy (da40:mps0:0:79:0): Retrying command (da40:mps0:0:79:0): READ(10). CDB: 28 00 0d 57 31 00 00 00 20 00 length 16384 SMID 165 Aborting command 0xfffffe0001238890 (da40:mps0:0:79:0): READ(10). CDB: 28 00 0d 57 30 a0 00 00 20 00 mps0: (da40:mps0:0:79:0): CAM status: Command timeout (da40:mps0:0:79:0): Retrying command Sending reset from mpssas_send_abort for target ID 79 mps0: Unfreezing devq for target ID 79 (da40:mps0:0:79:0): READ(10). CDB: 28 00 0d 57 31 00 00 00 20 00 (da40:mps0:0:79:0): CAM status: Command timeout (da40:mps0:0:79:0): Retrying command (da40:mps0:0:79:0): WRITE(10). CDB: 2a 00 2b 53 99 58 00 00 08 00 length 4096 SMID 69 Aborting command 0xfffffe0001230a90 mps0: Sending reset from mpssas_send_abort for target ID 79 mps0: Unfreezing devq for target ID 79 (da40:mps0:0:79:0): WRITE(10). CDB: 2a 00 2b 53 99 58 00 00 08 00 (da40:mps0:0:79:0): CAM status: Command timeout (da40:mps0:0:79:0): Retrying command (da40:mps0:0:79:0): READ(10). CDB: 28 00 03 93 fb e8 00 00 20 00 (da40:mps0:0:79:0): CAM status: SCSI Status Error (da40:mps0:0:79:0): SCSI status: Check Condition (da40:mps0:0:79:0): SCSI sense: Deferred error: MEDIUM ERROR asc:3,0 (Peripheral device write fault) (da40:mps0:0:79:0): (da40:mps0:0:79:0): Field Replaceable Unit: 0 (da40:mps0:0:79:0): Command Specific Info: 0 (da40:mps0:0:79:0): (da40:mps0:0:79:0): Descriptor 0x80: f7 eb (da40:mps0:0:79:0): Descriptor 0x81: 00 2b c1 01 08 75 (da40:mps0:0:79:0): Retrying command (per sense data) ctl_datamove: tag 0x3252b9e on (0:3:0) aborted mps0: mpssas_prepare_remove: Sending reset for target ID 88 da47 at mps0 bus 0 scbus34 target 88 lun 0 da47: <HITACHI HUS723030ALS640 A222> s/n YVK6U9TK detached GEOM_MULTIPATH: da47 in disk21 was disconnected GEOM_MULTIPATH: da47 removed from disk21 (da47:mps0:0:88:0): Periph destroyed mps0: Unfreezing devq for target ID 88 mps0: mpssas_prepare_remove: Sending reset for target ID 79 da40 at mps0 bus 0 scbus34 target 79 lun 0 da40: <HITACHI HUS723030ALS640 A222> s/n YVK6U9TK detached GEOM_MULTIPATH: da40 in disk21 was disconnected GEOM_MULTIPATH: out of providers for disk21 GEOM_MULTIPATH: da40 removed from disk21 GEOM_MULTIPATH: destroying disk21 GEOM_MULTIPATH: disk21 destroyed (da40:mps0:0:79:0): Periph destroyed mps0: Unfreezing devq for target ID 79 da40 at mps0 bus 0 scbus34 target 93 lun 0 da40: <HITACHI HUS723030ALS640 A222> Fixed Direct Access SPC-4 SCSI device da40: Serial Number YVK42BRK da40: 600.000MB/s transfers da40: Command Queueing enabled da40: 2861588MB (5860533168 512 byte sectors) da47 at mps0 bus 0 scbus34 target 94 lun 0 da47: <HITACHI HUS723030ALS640 A222> Fixed Direct Access SPC-4 SCSI device da47: Serial Number YVK42BRK da47: 600.000MB/s transfers da47: Command Queueing enabled da47: 2861588MB (5860533168 512 byte sectors) GEOM_MULTIPATH: disk21 created GEOM_MULTIPATH: da40 added to disk21 GEOM_MULTIPATH: da40 is now active path in disk21 GEOM_MULTIPATH: da47 added to disk21 ses0: da47,pass50: SAS Device Slot Element: 1 Phys at Slot 7 ses0: phy 0: SAS device type 1 id 0 ses0: phy 0: protocols: Initiator( None ) Target( SSP ) ses0: phy 0: parent 500a098004347dbf addr 5000cca03eb083b1 ses1: da40,pass43: SAS Device Slot Element: 1 Phys at Slot 7 ses1: phy 0: SAS device type 1 id 1 ses1: phy 0: protocols: Initiator( None ) Target( SSP ) ses1: phy 0: parent 500a098004346bff addr 5000cca03eb083b2

Last edited: