Hello All, I have a failed drive in one of my raidz2 pools. Normally this is a trivial thing to replace, done it a dozen times already but this time around I cannot get the GUI to replace the failed disk with the new disk I installed. Even tried via command line and can't get the replace to take. Anyone have any ideas? Appreciate the help!!

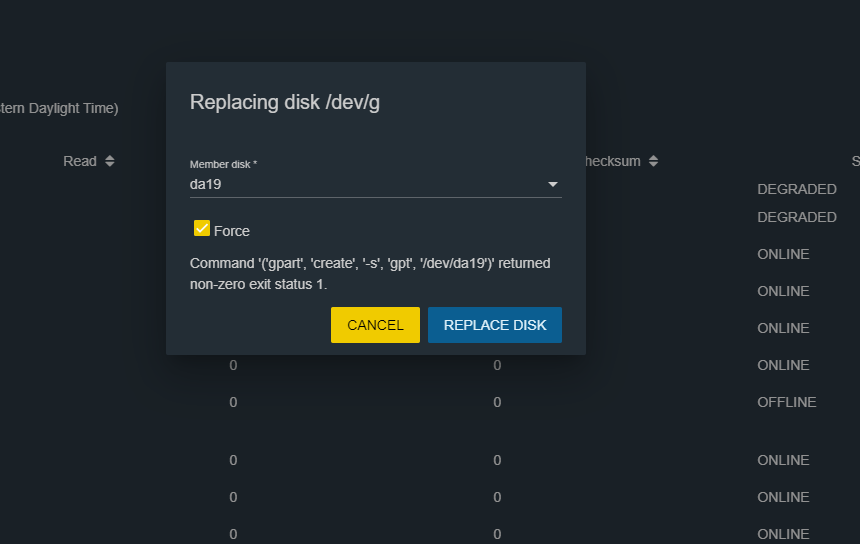

Replace via GUI and receive this error:

Have no idea how to get around this. It's a brand new disk, just took it out of the anti-static bag, don't think it's DOA...

Code:

media

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: http://illumos.org/msg/ZFS-8000-9P

scan: scrub repaired 0 in 0 days 13:28:58 with 0 errors on Sun Jun 21 13:28:59 2020

config:

NAME STATE READ WRITE CKSUM

media DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

gptid/6861e259-1068-11e9-918e-0c9d9264b1d5 ONLINE 0 0 0

gptid/6994874f-1068-11e9-918e-0c9d9264b1d5 ONLINE 0 0 0

gptid/6bd32ca7-1068-11e9-918e-0c9d9264b1d5 ONLINE 0 0 0

gptid/6e20a0df-1068-11e9-918e-0c9d9264b1d5 ONLINE 0 0 0

4779586579116178481 OFFLINE 6 0 0 was /dev/gptid/6f54e2db-1068-11e9-918e-0c9d9264b1d5

gptid/718e607b-1068-11e9-918e-0c9d9264b1d5 ONLINE 0 0 0

gptid/73fe3fea-1068-11e9-918e-0c9d9264b1d5 ONLINE 0 0 0

gptid/762e78ac-1068-11e9-918e-0c9d9264b1d5 ONLINE 0 0 0

Replace via GUI and receive this error:

Have no idea how to get around this. It's a brand new disk, just took it out of the anti-static bag, don't think it's DOA...