HillTopsGM

Cadet

- Joined

- Jan 27, 2020

- Messages

- 4

Hello Everyone.

I've had FreeNAS set up for at least 5 years (if not more).

I set it up and let it go . . . never really had to touch it, so I am very much a novice.

Here is what I have:

Build FreeNAS-8.3.1-RELEASE-p2-x64 (r12686+b770da6_dirty)

Platform AMD E-350 Processor

Memory 7772MB

4x2TB Hatachi HDD's Set up with Raidz2

Generally I don't leave the NAS running ALL the time (it may be off for a couple of weeks before I turn it on to access something).

When I do turn it on, I usually leave it on so it can do a Scrub if it has been off for a while.

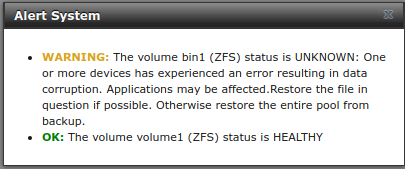

Well for the first time ever, post scrub, I had this message emailed to me that showed an error:

I wasn't sure what that meant exactly.

I logged in to the admin section via a Browser, and everything SEEMED to be OK there, and I was able to access all my files over the network normally, so I opened SHELL and ran zpool status -v

Here is the output for that:

That made me feel a little better as I have those files backed up elsewhere.

So far the experience has me wondering about what to expect from FreeNAS.

I realise that there have been SIGNIFICANT updates to the OS (I hope to get around to upgrading sometime soon).

For now hear are my questions:

1. I was under the impression that with a raidz2 setup that when a scrub happens it should have detected there was an issue with one of the copies, and then fixed the one that was corrupted. Did both copies get corrupted here?

2. In the emailed report it was suggested that there were 6 corrupted files, yet zpool status -v showed 3 . . . is that because both copies that were created in the raidz2 pool were corrupted?

3. I was under the impression that with raidz2 setups, the chances of corrupted files was supposed to be very low (almost non-existent) because of the scrubs and file checking. Could there be something else going on here?

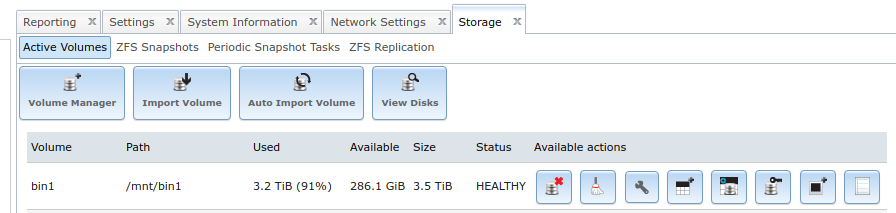

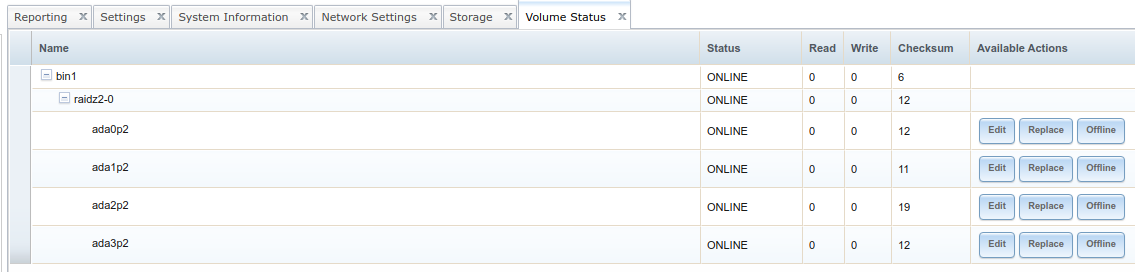

Here are a couple of images from my admin GUI (not sure if this helps):

Thanks for your consideration.

I've had FreeNAS set up for at least 5 years (if not more).

I set it up and let it go . . . never really had to touch it, so I am very much a novice.

Here is what I have:

Build FreeNAS-8.3.1-RELEASE-p2-x64 (r12686+b770da6_dirty)

Platform AMD E-350 Processor

Memory 7772MB

4x2TB Hatachi HDD's Set up with Raidz2

Generally I don't leave the NAS running ALL the time (it may be off for a couple of weeks before I turn it on to access something).

When I do turn it on, I usually leave it on so it can do a Scrub if it has been off for a while.

Well for the first time ever, post scrub, I had this message emailed to me that showed an error:

Removing stale files from /var/preserve:

Cleaning out old system announcements:

Backup passwd and group files:

Verifying group file syntax:

/etc/group is fine

Backing up package db directory:

Disk status:

Filesystem Size Used Avail Capacity Mounted on

/dev/ufs/FreeNASs2a 926M 381M 470M 45% /

devfs 1.0k 1.0k 0B 100% /dev

/dev/md0 4.6M 3.2M 981k 77% /etc

/dev/md1 823k 2.5k 755k 0% /mnt

/dev/md2 149M 16M 121M 12% /var

/dev/ufs/FreeNASs4 19M 1.9M 16M 10% /data

bin1 3.5T 3.2T 286G 92% /mnt/bin1

volume1 129G 943M 128G 1% /mnt/volume1

volume1/admin 1.8T 1.7T 128G 93% /mnt/volume1/admin

volume1/user2 128G 77k 128G 0% /mnt/volume1/user2

Last dump(s) done (Dump '>' file systems):

Checking status of zfs pools:

NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT

bin1 7.25T 6.56T 707G 90% 1.00x ONLINE /mnt

volume1 1.81T 1.66T 158G 91% 1.00x ONLINE /mnt

pool: bin1

state: ONLINE

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: http://www.sun.com/msg/ZFS-8000-8A

scan: scrub in progress since Sun Jan 26 22:09:56 2020

4.61T scanned out of 6.56T at 277M/s, 2h3m to go

0 repaired, 70.25% done

config:

NAME STATE READ WRITE CKSUM

bin1 ONLINE 0 0 6

raidz2-0 ONLINE 0 0 12

gptid/74271bb8-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0 12

gptid/74d1a118-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0 11

gptid/75c1c7f5-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0 19

gptid/766b7256-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0 12

errors: 6 data errors, use '-v' for a list

Checking status of ATA raid partitions:

Checking status of gmirror(8) devices:

Checking status of graid3(8) devices:

Checking status of gstripe(8) devices:

Network interface status:

Name Mtu Network Address Ipkts Ierrs Idrop Opkts Oerrs Coll

usbus 0 <Link#1> 0 0 0 0 0 0

usbus 0 <Link#2> 0 0 0 0 0 0

usbus 0 <Link#3> 0 0 0 0 0 0

usbus 0 <Link#4> 0 0 0 0 0 0

usbus 0 <Link#5> 0 0 0 0 0 0

re0 1500 <Link#6> f4:6d:04:d9:8f:51 195965454 0 0 245839538 0 0

re0 1500 192.168.1.0 192.168.1.7 195442833 - - 245838294 - -

usbus 0 <Link#7> 0 0 0 0 0 0

usbus 0 <Link#8> 0 0 0 0 0 0

lo0 16384 <Link#9> 42768 0 0 42768 0 0

lo0 16384 fe80::1%lo0 fe80::1 0 - - 0 - -

lo0 16384 localhost ::1 2 - - 2 - -

lo0 16384 your-net localhost 42766 - - 42766 - -

Security check:

(output mailed separately)

Checking status of 3ware RAID controllers:

Alarms (most recent first):

No new alarms.

-- End of daily output --

I wasn't sure what that meant exactly.

I logged in to the admin section via a Browser, and everything SEEMED to be OK there, and I was able to access all my files over the network normally, so I opened SHELL and ran zpool status -v

Here is the output for that:

pool: bin1

state: ONLINE

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: http://www.sun.com/msg/ZFS-8000-8A

scan: scrub repaired 0 in 6h56m with 3 errors on Mon Jan 27 05:06:02 2020

config:

NAME STATE READ WRITE CKS

UM

bin1 ONLINE 0 0

6

raidz2-0 ONLINE 0 0

12

gptid/74271bb8-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0

12

gptid/74d1a118-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0

11

gptid/75c1c7f5-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0

19

gptid/766b7256-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0

--More--(byte 899)

gptid/75c1c7f5-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0

19

gptid/766b7256-5554-11e2-9a32-f46d04d98f51 ONLINE 0 0

12

errors: Permanent errors have been detected in the following files:

/mnt/bin1/001_My_Operational_VMs/abc.vdi

/mnt/bin1/Styles/def.vdi

/mnt/bin1/Videos/xyz.mp4

pool: volume1

state: ONLINE

scan: scrub repaired 0 in 5h41m with 0 errors on Sun Jan 26 05:41:41 2020

config:

NAME STATE READ WRITE CKSUM

volume1 ONLINE 0 0 0

gptid/004b94fb-0755-11e1-be94-f46d04d98f51 ONLINE 0 0 0

errors: No known data errors

[root@freenas ~]#

That made me feel a little better as I have those files backed up elsewhere.

So far the experience has me wondering about what to expect from FreeNAS.

I realise that there have been SIGNIFICANT updates to the OS (I hope to get around to upgrading sometime soon).

For now hear are my questions:

1. I was under the impression that with a raidz2 setup that when a scrub happens it should have detected there was an issue with one of the copies, and then fixed the one that was corrupted. Did both copies get corrupted here?

2. In the emailed report it was suggested that there were 6 corrupted files, yet zpool status -v showed 3 . . . is that because both copies that were created in the raidz2 pool were corrupted?

3. I was under the impression that with raidz2 setups, the chances of corrupted files was supposed to be very low (almost non-existent) because of the scrubs and file checking. Could there be something else going on here?

Here are a couple of images from my admin GUI (not sure if this helps):

Thanks for your consideration.