Hi,

I'm running 6 drives in raid10 on TrueNAS core 13.0-U6.1, and I'm seeing constantly increasing checksum errors on both drives of one of the 3 mirror.

I listed the corrupted files with `zpool status -v` and deleted them then re-ran a scrub, and did that a few times but it keeps coming back with more corrupted files and the checksum errors keep on increasing.

I checked the drives with smartctl and they are both fine, I checked the cables, and I even have each drive on different controller (one on the onboard sata and the other on a pcie hba sas controller), and I have more drives on each controller that aren't experiencing any error.

What could be the problem, and how can I resolve this ?

Ultimately I would like to switch to raidz2 instead of 3 stripped mirrors of 2 drives for better robustness in case of multi drive failures, but I don't have any spare drives to do that right now.

Before deleting the corrupted files and re-running a scrub :

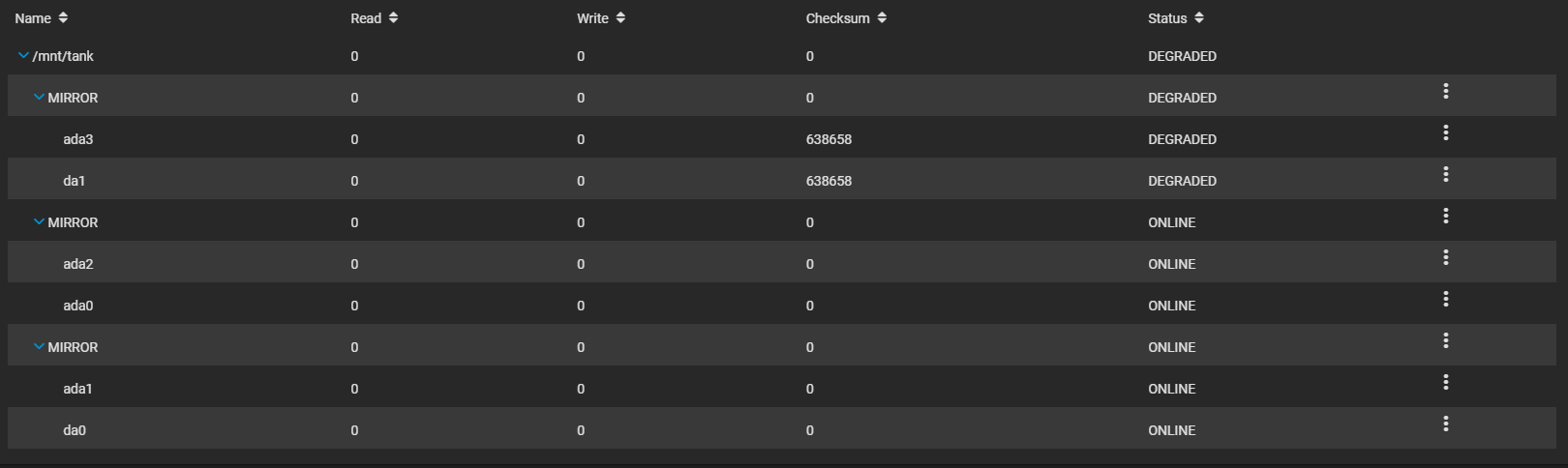

After deleting the corrupted files and re-running a scrub :

Smartctl status of each drive :

I'm running 6 drives in raid10 on TrueNAS core 13.0-U6.1, and I'm seeing constantly increasing checksum errors on both drives of one of the 3 mirror.

I listed the corrupted files with `zpool status -v` and deleted them then re-ran a scrub, and did that a few times but it keeps coming back with more corrupted files and the checksum errors keep on increasing.

I checked the drives with smartctl and they are both fine, I checked the cables, and I even have each drive on different controller (one on the onboard sata and the other on a pcie hba sas controller), and I have more drives on each controller that aren't experiencing any error.

What could be the problem, and how can I resolve this ?

Ultimately I would like to switch to raidz2 instead of 3 stripped mirrors of 2 drives for better robustness in case of multi drive failures, but I don't have any spare drives to do that right now.

Before deleting the corrupted files and re-running a scrub :

Code:

root@truenas:~ # zpool status -v

pool: boot-pool

state: ONLINE

scan: scrub repaired 0B in 00:00:04 with 0 errors on Mon Dec 11 03:45:04 2023

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

nvd0p2 ONLINE 0 0 0

nvd1p2 ONLINE 0 0 0

errors: No known data errors

pool: tank

state: DEGRADED

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-8A

scan: scrub repaired 0B in 03:37:46 with 13 errors on Thu Dec 14 03:37:58 2023

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

gptid/0d082ece-199a-11ec-9d77-fcaa141f8c79 DEGRADED 0 0 620K too many errors

gptid/9cf66b69-978d-11ee-b5ee-a8a159b72230 DEGRADED 0 0 620K too many errors

mirror-1 ONLINE 0 0 0

gptid/34c0bd67-9791-11ee-9358-a8a159b72230 ONLINE 0 0 0

gptid/2bb7ec6b-6f44-11e7-9d69-fcaa141f8c79 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/2cb7cdb2-7c66-11e7-9193-fcaa141f8c79 ONLINE 0 0 0

gptid/2ff8d1fa-7c66-11e7-9193-fcaa141f8c79 ONLINE 0 0 0

errors: Permanent errors have been detected in the following files:

tank/iocage/jails/NextCloud/root:<0x50085>

/mnt/tank/iocage/jails/NextCloud/root/var/log/maillog

tank/iocage/jails/Web/root@auto-2023-12-13_00-00:/var/db/mysql/ib_buffer_pool

tank/iocage/jails/Web/root@auto-2023-12-13_00-00:<0x54c93>

tank/iocage/jails/NextCloud/root@auto-2023-12-13_00-00:/var/log/php-fpm.log

tank/iocage/jails/NextCloud/root@auto-2023-12-13_00-00:/var/spool/clientmqueue/df3B895086011949

tank/iocage/jails/NextCloud/root@auto-2023-12-13_00-00:/var/log/maillog

tank/iocage/jails/BrewMonitor/root@auto-2023-12-13_00-00:/var/log/httpd-error.log

tank/iocage/jails/Web/root:<0x54c93>

tank/iocage:<0x0>

After deleting the corrupted files and re-running a scrub :

Code:

root@truenas:~ # zpool status -v

pool: boot-pool

state: ONLINE

scan: scrub repaired 0B in 00:00:04 with 0 errors on Mon Dec 11 03:45:04 2023

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

nvd0p2 ONLINE 0 0 0

nvd1p2 ONLINE 0 0 0

errors: No known data errors

pool: tank

state: DEGRADED

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-8A

scan: scrub in progress since Thu Dec 14 15:11:03 2023

1.17T scanned at 978M/s, 277G issued at 226M/s, 1.77T total

0B repaired, 15.29% done, 01:56:06 to go

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

gptid/0d082ece-199a-11ec-9d77-fcaa141f8c79 DEGRADED 0 0 625K too many errors

gptid/9cf66b69-978d-11ee-b5ee-a8a159b72230 DEGRADED 0 0 625K too many errors

mirror-1 ONLINE 0 0 0

gptid/34c0bd67-9791-11ee-9358-a8a159b72230 ONLINE 0 0 0

gptid/2bb7ec6b-6f44-11e7-9d69-fcaa141f8c79 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/2cb7cdb2-7c66-11e7-9193-fcaa141f8c79 ONLINE 0 0 0

gptid/2ff8d1fa-7c66-11e7-9193-fcaa141f8c79 ONLINE 0 0 0

errors: Permanent errors have been detected in the following files:

tank/iocage/jails/NextCloud/root:<0x50085>

tank/iocage/jails/NextCloud/root:<0x719c3>

<0x8a47>:<0x80306>

<0x8a47>:<0x54c93>

<0x8a4b>:<0x2ec3f>

<0x8a4b>:<0x50085>

<0x8a4b>:<0x719c3>

<0x8a57>:<0xc358>

tank/iocage/jails/Web/root:<0x54c93>

tank/iocage:<0x0>

Smartctl status of each drive :

Code:

root@truenas:~ # smartctl -a /dev/ada3

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p9 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: HGST Travelstar 7K1000

Device Model: HGST HTS721010A9E630

Serial Number: JG40006PGS378C

LU WWN Device Id: 5 000cca 6acca80ad

Firmware Version: JB0OA3B0

User Capacity: 1,000,204,886,016 bytes [1.00 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 2.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS T13/1699-D revision 6

SATA Version is: SATA 2.6, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Thu Dec 14 15:18:02 2023 GMT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x82) Offline data collection activity

was completed without error.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 45) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 194) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 062 Pre-fail Always - 0

2 Throughput_Performance 0x0005 198 198 040 Pre-fail Offline - 98

3 Spin_Up_Time 0x0007 180 180 033 Pre-fail Always - 0

4 Start_Stop_Count 0x0012 100 100 000 Old_age Always - 87

5 Reallocated_Sector_Ct 0x0033 063 063 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 110 110 040 Pre-fail Offline - 36

9 Power_On_Hours 0x0012 022 022 000 Old_age Always - 34388

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 80

191 G-Sense_Error_Rate 0x000a 100 100 000 Old_age Always - 0

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 32

193 Load_Cycle_Count 0x0012 099 099 000 Old_age Always - 13062

194 Temperature_Celsius 0x0002 193 193 000 Old_age Always - 31 (Min/Max 14/65)

196 Reallocated_Event_Count 0x0032 063 063 000 Old_age Always - 1052

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

223 Load_Retry_Count 0x000a 100 100 000 Old_age Always - 0

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 34341 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

Code:

root@truenas:~ # smartctl -a /dev/da1

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p9 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: HGST Travelstar 7K1000

Device Model: HGST HTS721010A9E630

Serial Number: JG40006PGS4AMC

LU WWN Device Id: 5 000cca 6acca84d6

Firmware Version: JB0OA3B0

User Capacity: 1,000,204,886,016 bytes [1.00 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 2.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS T13/1699-D revision 6

SATA Version is: SATA 2.6, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Thu Dec 14 15:18:51 2023 GMT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x82) Offline data collection activity

was completed without error.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 45) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 175) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 062 Pre-fail Always - 0

2 Throughput_Performance 0x0005 198 198 040 Pre-fail Offline - 98

3 Spin_Up_Time 0x0007 180 180 033 Pre-fail Always - 2

4 Start_Stop_Count 0x0012 100 100 000 Old_age Always - 195

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 110 110 040 Pre-fail Offline - 36

9 Power_On_Hours 0x0012 069 069 000 Old_age Always - 13957

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 83

191 G-Sense_Error_Rate 0x000a 100 100 000 Old_age Always - 0

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 29

193 Load_Cycle_Count 0x0012 071 071 000 Old_age Always - 292502

194 Temperature_Celsius 0x0002 176 176 000 Old_age Always - 34 (Min/Max 14/39)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 8

223 Load_Retry_Count 0x000a 100 100 000 Old_age Always - 0

SMART Error Log Version: 1

ATA Error Count: 8 (device log contains only the most recent five errors)

CR = Command Register [HEX]

FR = Features Register [HEX]

SC = Sector Count Register [HEX]

SN = Sector Number Register [HEX]

CL = Cylinder Low Register [HEX]

CH = Cylinder High Register [HEX]

DH = Device/Head Register [HEX]

DC = Device Command Register [HEX]

ER = Error register [HEX]

ST = Status register [HEX]

Powered_Up_Time is measured from power on, and printed as

DDd+hh:mm:SS.sss where DD=days, hh=hours, mm=minutes,

SS=sec, and sss=millisec. It "wraps" after 49.710 days.

Error 8 occurred at disk power-on lifetime: 9890 hours (412 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

84 51 41 9f 71 16 00 Error: ICRC, ABRT at LBA = 0x0016719f = 1470879

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 80 08 60 71 16 40 00 00:09:01.645 READ FPDMA QUEUED

60 80 00 e0 6f 16 40 00 00:09:01.644 READ FPDMA QUEUED

60 80 08 60 6e 16 40 00 00:09:01.643 READ FPDMA QUEUED

60 80 00 e0 6c 16 40 00 00:09:01.640 READ FPDMA QUEUED

60 80 08 60 6b 16 40 00 00:09:01.639 READ FPDMA QUEUED

Error 7 occurred at disk power-on lifetime: 9890 hours (412 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

84 51 01 5f fe 15 00 Error: ICRC, ABRT at LBA = 0x0015fe5f = 1441375

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 80 00 e0 fd 15 40 00 00:09:01.221 READ FPDMA QUEUED

60 80 08 60 fc 15 40 00 00:09:01.220 READ FPDMA QUEUED

60 80 00 e0 fa 15 40 00 00:09:01.219 READ FPDMA QUEUED

60 80 08 60 f9 15 40 00 00:09:01.217 READ FPDMA QUEUED

60 80 00 e0 f7 15 40 00 00:09:01.216 READ FPDMA QUEUED

Error 6 occurred at disk power-on lifetime: 9890 hours (412 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

84 51 01 5f e3 12 00 Error: ICRC, ABRT at LBA = 0x0012e35f = 1237855

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 80 00 e0 e1 12 40 00 00:09:00.202 READ FPDMA QUEUED

60 80 08 60 e0 12 40 00 00:09:00.201 READ FPDMA QUEUED

60 80 00 e0 de 12 40 00 00:09:00.200 READ FPDMA QUEUED

60 80 08 60 dd 12 40 00 00:09:00.199 READ FPDMA QUEUED

60 80 00 e0 db 12 40 00 00:09:00.197 READ FPDMA QUEUED

Error 5 occurred at disk power-on lifetime: 9890 hours (412 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

84 51 71 ef a3 11 00 Error: ICRC, ABRT at LBA = 0x0011a3ef = 1156079

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 80 00 e0 a3 11 40 00 00:08:59.606 READ FPDMA QUEUED

60 80 08 60 a2 11 40 00 00:08:59.603 READ FPDMA QUEUED

60 80 00 e0 a0 11 40 00 00:08:59.602 READ FPDMA QUEUED

60 80 08 60 9f 11 40 00 00:08:59.601 READ FPDMA QUEUED

60 80 00 e0 9d 11 40 00 00:08:59.600 READ FPDMA QUEUED

Error 4 occurred at disk power-on lifetime: 9889 hours (412 days + 1 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

84 51 91 ef e7 06 00 Error: ICRC, ABRT at LBA = 0x0006e7ef = 452591

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 00 00 80 e6 06 40 00 00:04:21.946 READ FPDMA QUEUED

60 20 00 00 07 4c 40 00 00:04:21.939 READ FPDMA QUEUED

60 20 00 80 06 8c 40 00 00:04:21.938 READ FPDMA QUEUED

60 08 00 80 06 8c 40 00 00:04:21.938 READ FPDMA QUEUED

60 08 08 08 07 4c 40 00 00:04:21.938 READ FPDMA QUEUED

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 13910 -

# 2 Short offline Completed without error 00% 13852 -

# 3 Short offline Completed without error 00% 13828 -

# 4 Short offline Completed without error 00% 13804 -

# 5 Short offline Completed without error 00% 13780 -

# 6 Short offline Completed without error 00% 13756 -

# 7 Short offline Completed without error 00% 13732 -

# 8 Extended offline Completed without error 00% 13711 -

# 9 Short offline Completed without error 00% 13684 -

#10 Short offline Completed without error 00% 13660 -

#11 Short offline Completed without error 00% 13636 -

#12 Short offline Completed without error 00% 13612 -

#13 Short offline Completed without error 00% 13588 -

#14 Short offline Completed without error 00% 13564 -

#15 Extended offline Completed without error 00% 13543 -

#16 Short offline Completed without error 00% 13516 -

#17 Short offline Completed without error 00% 13492 -

#18 Short offline Completed without error 00% 13468 -

#19 Short offline Completed without error 00% 13444 -

#20 Short offline Completed without error 00% 13420 -

#21 Short offline Completed without error 00% 13396 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.